Next: Logistic Regression and Binary Up: Regression Analysis and Classification Previous: Bayesian Linear Regression

The goal of nonlinear least squares (LS) regresion is to

model the nonlinear relationship between the dependent variable

![${\bf x}=[x_1,\cdots,x_d]^T$](img491.svg)

![${\bf\theta}=[\theta_1,\cdots,\theta_M]^T$](img501.svg)

|

(187) |

for the model to fit the

training dataset

for the model to fit the

training dataset  , so that, its output

, so that, its output

matches the ground truth labeling

matches the ground truth labeling  in the training set,

i.e., the residual

in the training set,

i.e., the residual

is ideally zero:

where

is ideally zero:

where

is the predicted output by the regression function based on the

nth data point

is the predicted output by the regression function based on the

nth data point  . We see that the regression problem

is equivalent to solving a nonlinear equation system of

. We see that the regression problem

is equivalent to solving a nonlinear equation system of  equations for the

equations for the  unknown parameters in

unknown parameters in

. As

typically there are many more data samples than the unknown

parameters, i.e.,

. As

typically there are many more data samples than the unknown

parameters, i.e.,  , the equation

, the equation

is over-overconstrained without an exact solution. We therefore

consider the the optimal parameter

is over-overconstrained without an exact solution. We therefore

consider the the optimal parameter

that minimizes

the sum-of-squared error based on the residual

that minimizes

the sum-of-squared error based on the residual  :

To do so, we first consider the gradient descent method based

on the gradient of this error function:

:

To do so, we first consider the gradient descent method based

on the gradient of this error function:

is the

is the  Jacobian matrix

Jacobian matrix

of

of

. The mth component

of the gradient vector is

. The mth component

of the gradient vector is

is

the element in the nth row and mth column of

is

the element in the nth row and mth column of  ,

which is simply the negative version of Jacobian of

,

which is simply the negative version of Jacobian of

:

:

|

(192) |

The optimal parameters in

|

(193) |

properly may

be tricky, as it depends on the specific landscape of the

error hypersurface

properly may

be tricky, as it depends on the specific landscape of the

error hypersurface

. If

. If  is too large, a minimum may be skipped over, but if

is too large, a minimum may be skipped over, but if  is too large, the convergence of the iteration may be too

slow.

is too large, the convergence of the iteration may be too

slow.

This gradient method is also called

batch gradient descent (BGD), as the gradient in

Eq. (190) is calculated based on the entire batch

of all

|

(194) |

based on one data point is

the same as the true gradient (scaled by

based on one data point is

the same as the true gradient (scaled by  ) based on

all

) based on

all  data points in the dataset

data points in the dataset

![$\displaystyle E[ {\bf g}_n({\bf\theta}) ]

=\sum_{n=1}^N P({\bf x}_n) {\bf g}_n(...

...{N}\sum_{n=1}^N \frac{dr_n}{d{\bf\theta}}\;r_n

=\frac{1}{N}{\bf g}({\bf\theta})$](img913.svg) |

(195) |

As the data are typically noisy, the SGD gradient based on

only one data point may be in a direction very different

from the true gradient based on the mean of all

Some trade-off between BGD and SGD can be made so that the

gradient is estimated based on more than one but less than

all

Alternatively, the optimal parameter

![[*]](crossref.png) ) in

Chapter 2:

) in

Chapter 2:

.

Here

.

Here

in the pseudo-inverse is actually an

approximation of the Hessian

in the pseudo-inverse is actually an

approximation of the Hessian

of the error

function

of the error

function

, as shown in the example

in a previous chapter,

and

, as shown in the example

in a previous chapter,

and

is the gradient of the error function given in Eq. (190).

So the iteration above is essentially:

is the gradient of the error function given in Eq. (190).

So the iteration above is essentially:

|

(197) |

as the second order derivative of the error function

as the second order derivative of the error function

is approximated by the Jacobian

is approximated by the Jacobian

as the first order derivative of the

regression function

as the first order derivative of the

regression function

, making

the implementation computationally easier.

, making

the implementation computationally easier.

In the special case of linear regression function

![$\displaystyle \hat{\bf y}={\bf f}({\bf X},{\bf w})

=\left[\begin{array}{c}f({\b...

...nd{array}\right]{\bf w}

=[{\bf x}_1,\cdots,{\bf x}_N]^T{\bf w}={\bf X}^T{\bf w}$](img925.svg) |

(198) |

![${\bf w}=[w_0,\,w_1,\cdots,w_d]^T$](img926.svg) and

and

![${\bf x}_i=[1, x_{i1},\cdots,x_{id}]^T$](img927.svg) are both augmented

are both augmented  dimensional vectors and

dimensional vectors and

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_N]$](img498.svg) .

The Taylor series is

.

The Taylor series is

|

(199) |

|

(200) |

|

|

|

|

|

|

||

|

|

(201) |

can be found in a single

step without iteration, same as that in Eq. (116).

can be found in a single

step without iteration, same as that in Eq. (116).

The Matlab code for the essential parts of the algorithm is

listed below, where data(:,1) and data(:,2) are the

observed data containing

NLregression is called to estimate the

function parameters:

sym x; % symbolic variables

a=sym('a',[3 1]); % symbolic parameters

f=@(x,a)a(1)*exp(a(2)*x)+a(3)| % symbolic function

[a er]=NLregression(data(:,1),data(:,2),f,3); % call regression

The code below estimates the

function [a er]=NLregression(x,y,f,M) % nonlinear regression

N=length(x); % number of data samples

a=sym('a',[M 1]); % M symbolic parameters

F=[];

for i=1:N

F=[F; f(x(i),a)]; % evaluate function at N given data points

end

J=jacobian(F,a); % symbolic Jacobian

J=matlabFunction(J); % convert to a true function

F=matlabFunction(F);

a1=ones(M,1); % initial guesses for M parameters

n=0; % iteration index

da=1;

while da>tol % terminate if no progress made

n=n+1;

a0=a1; % update parameters

J0=J(a0(1),a0(2)); % evaluate Jacobian based on a0

Jinv=pinv(J0); % pseudo inverse of Jacobian

r=y-F(a0(1),a0(2)); % residual

a1=a0+Jinv*r; % Newton iteration

er=norm(r); % residual error

da=norm(a0-a1); % progress

end

end

where tol is a small value for tollerance, such as  .

.

Example 1

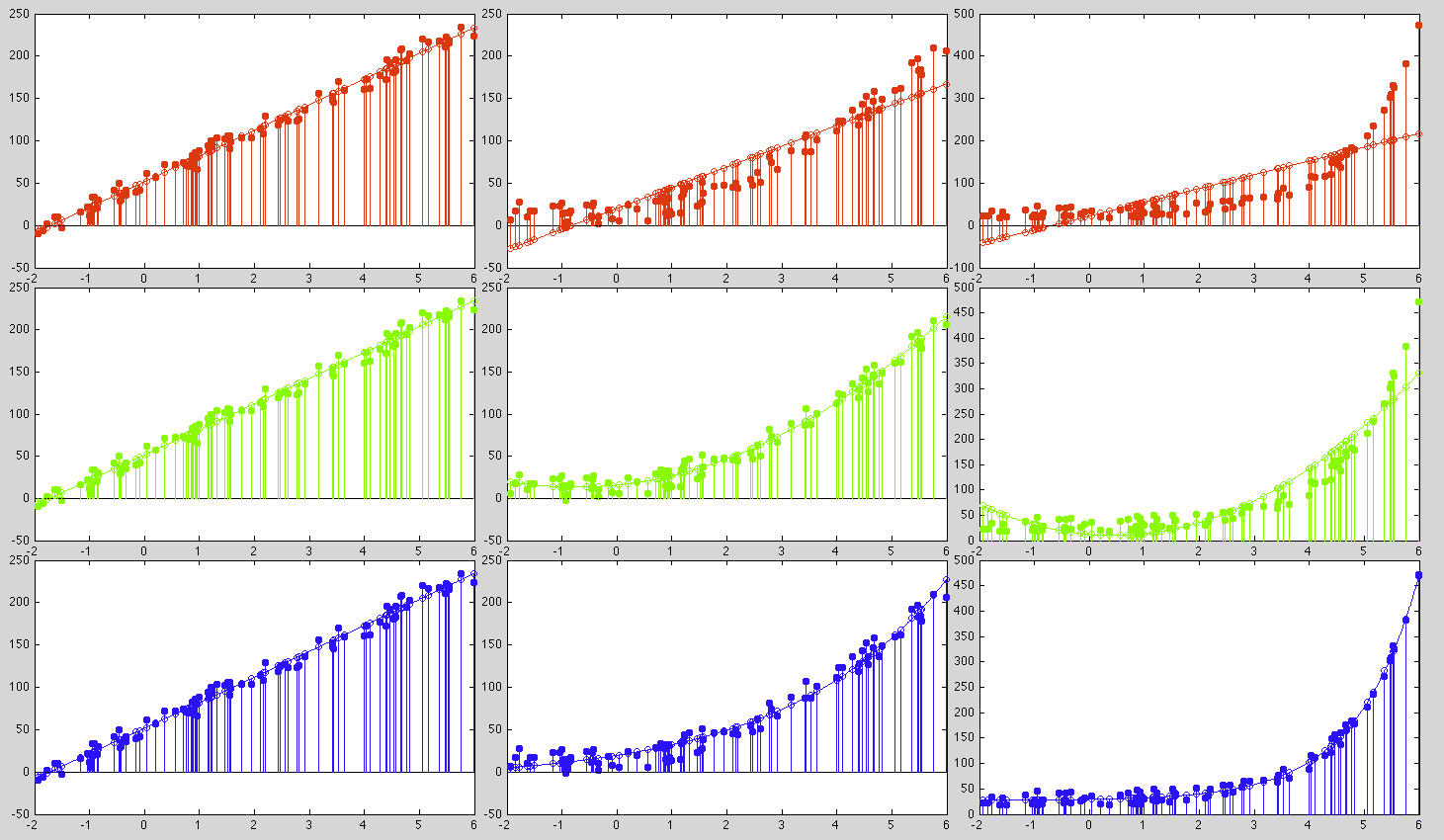

Consider three sets of data (shown as the solid dots in the three columns in the plots below) are fitted by three different models (shown as the open circles in the plots):

These are the parameters for the three models to fit each of the three datasets:

|

We see that the first dataset can be fitted by all three models equally

well with similar error (when

Example 2

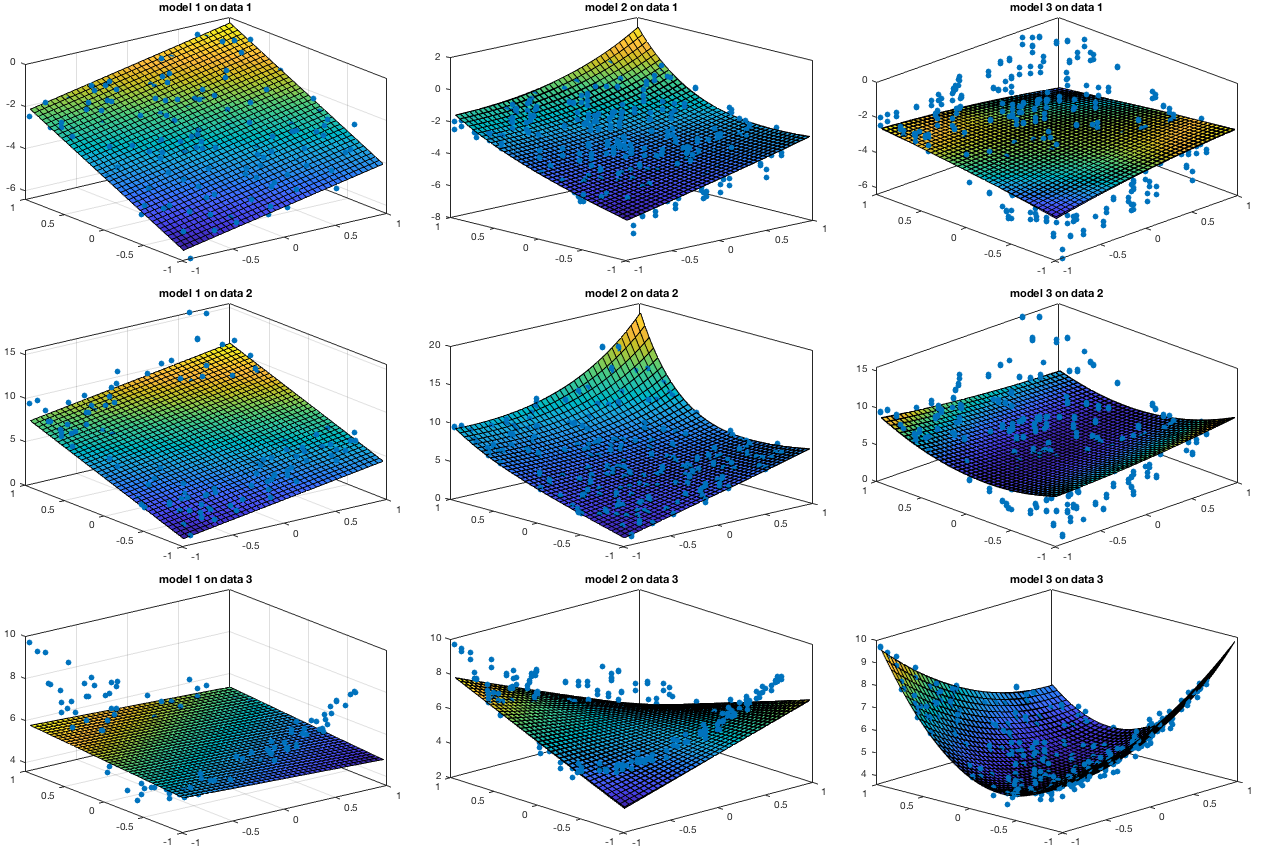

Based on the following three functions with four parameters

|

|

|

|

|

|

|

|

|

|

|

(202) |

Then each of three datasets are fitted by the three functions as models while the optimal parameters are found by the Gauss-Newton method.

|

The Levenberg-Marquardt algorithm (LMA) or

damped least-squares method is a method widely

used to minimize a sum-of-squares error

Specifically, the LMA modifies Eq. (196)

![$\displaystyle {\bf\theta}_{n+1}={\bf\theta}_n

+{\bf J}^-_f({\bf\theta}_n)[ {\bf...

...\bf J}_n^T{\bf J}_n)^{-1}{\bf J}_n^T

[ {\bf y}-{\bf f}({\bf X},{\bf\theta}_n) ]$](img950.svg) |

(203) |

:

where

:

where

is the non-negative damping factor, which is to

be adjusted at each iteration. We recognize that this is actaully

similar to Eq. (144) for ridge regression.

is the non-negative damping factor, which is to

be adjusted at each iteration. We recognize that this is actaully

similar to Eq. (144) for ridge regression.

In each iterative step, if

|

(205) |

is updated along the negative direction of

the gradient in Eq. (190):

is updated along the negative direction of

the gradient in Eq. (190):

|

(206) |

.

.

By adjusting the parameter