Next: Line minimization Up: Unconstrained Optimization Previous: Newton's method

Newton's method discussed above is based on the Hessian

We first consider the minimization of a single-variable function

where where |

(46) |

is positive or negative,

the function value

is positive or negative,

the function value  (approximated by the first two terms

of its Taylor series) is always reduced if the positive step size

(approximated by the first two terms

of its Taylor series) is always reduced if the positive step size

is small enough:

is small enough:

|

(47) |

|

(48) |

at which

at which  and no

further progress can be made, i.e. a local minimum of the function is

obtained.

and no

further progress can be made, i.e. a local minimum of the function is

obtained.

This simple method can be generalized to minimize a multi-variable

objective function

Specifically the gradient descent method (also called steepest

descent or down hill method) carries out the following approximation

|

(49) |

and the

minimum of the function

and the

minimum of the function

is reached.

is reached.

Comparing the gradient descent method with Newton's method we see that

here the Hessian matrix is no longer needed. The iteration simply follows

a search direction

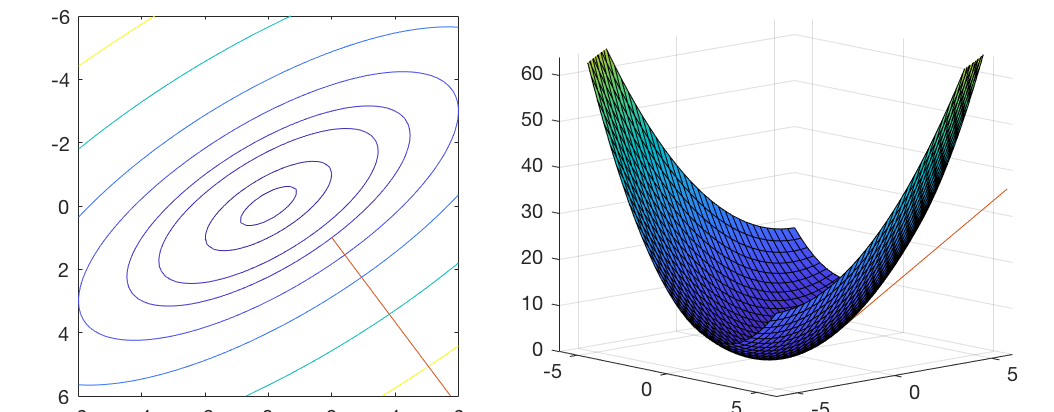

As the gradient descent method relies only on the gradient vector of the objective function without any information contained in the second order derivatives in the Hessian matrix, it does not have as much information as Newton's method and therefore may not be as efficient. For example, when the function is quadratic, as discussed before, Newton's method can find the solution in a single step from any initial guess, but it may take the gradient descent method many steps to reach the solution, because it always follows the negative direction of the local gradient, which typically does not lead to the solution directly. However, for the same reason, the gradient descent method is computationally less expensive as the Hessian matrix is not used.

Example:

Consider a two-variable quadratic function in the following general form:

![$\displaystyle f({\bf x})={\bf x}^T{\bf Ax}+{\bf b}^T{\bf x}+c

=[x_1\;x_2]\left[...

...nd{array}\right]

+[b_1\;b_2]\left[\begin{array}{c}x_1\\ x_2\end{array}\right]+c$](img278.svg) |

is a symmetric positive semidefinite matrix,

i.e.,

is a symmetric positive semidefinite matrix,

i.e.,

and

and

. Specially, if we let

. Specially, if we let

![$\displaystyle {\bf A}=\left[\begin{array}{cc}2 & 1\\ 1 & 1\end{array}\right],\;\;\;\;\;

{\bf b}=\left[\begin{array}{c}0 \\ 0\end{array}\right],\;\;\;\;\; c=0$](img282.svg) |

![$\displaystyle f(x_1,x_2)=\frac{1}{2}[x_1\;x_2]\left[\begin{array}{cc}2&1\\ 1&1\...

...t[\begin{array}{c}x_1\\ x_2\end{array}\right]=\frac{1}{2}(2x_1^2+2x_1x_2+x_2^2)$](img283.svg) |

at

at  .

.

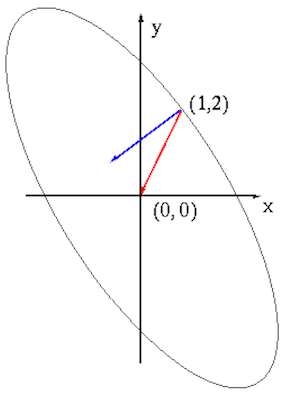

We assume the initial guess is

![${\bf x}_0=[1,\;2]^T$](img286.svg)

![${\bf g}_0=[4,\;3]^T$](img287.svg)

![$\displaystyle {\bf g}=\left[\begin{array}{c}2x_1+x_2\\ x_1+x_2\end{array}\right...

...ght],\;\;\;\;

{\bf H}^{-1}=\left[\begin{array}{rr}1&-1\\ -1&2\end{array}\right]$](img289.svg) |

![${\bf d}_0=-{\bf H}^{-1}{\bf g}_0=-[1,\;2]^T$](img290.svg) (the red arrow in the figure).

The iteration is:

(the red arrow in the figure).

The iteration is:

![$\displaystyle {\bf x}_1={\bf x}_0-{\bf H}^{-1}{\bf g}_0=\left[\begin{array}{rr}...

...array}{r}4\\ 3\end{array}\right]

=\left[\begin{array}{r}0\\ 0\end{array}\right]$](img291.svg) |

![${\bf d}_0=-{\bf g}_0=-[4,\;3]^T$](img292.svg) , perpendicular to the contour of

the function. The first iteration is:

, perpendicular to the contour of

the function. The first iteration is:

![$\displaystyle {\bf x}_1={\bf x}_0-\delta{\bf g}_0

=\left[\begin{array}{r}1\\ 2\...

...{array}\right]

=\left[\begin{array}{r}1-\delta 4\\ 2-\delta 3\end{array}\right]$](img293.svg) |

(to be considered later)

to find

(to be considered later)

to find  , and then continue the iteration.

, and then continue the iteration.

The figure below shows the search direction of the Newton's method

(red), which reaches the solution in a single step, based on not only

the gradient

In the gradient descent method, we also need to determine a proper step

size