The Newton-Raphson method discussed above for solving a single-variable

equation  can be generalized to the case of multivariate equation

systems containing

can be generalized to the case of multivariate equation

systems containing  equations of

equations of  variables in

variables in

![${\bf x}=[x_1,\cdots,x_N]^T$](img432.svg) :

:

![$\displaystyle {\bf f}({\bf x})=\left[\begin{array}{c}

f_1({\bf x}) \\ \vdots\\ ...

...nd{array}\right]

=\left[\begin{array}{c}0\\ \vdots\\ 0\end{array}\right]={\bf0}$](img433.svg) |

(95) |

To solve the equation system, we first consider the

Taylor series expansion

of each of the  functions in the neighborhood of the initial

point

functions in the neighborhood of the initial

point

![${\bf x}_0=[x_{01},\cdots,x_{0N}]^T$](img434.svg) :

:

|

(96) |

where

represents the second and higher

order terms in the series beyond the linear term, which can be neglected

if

represents the second and higher

order terms in the series beyond the linear term, which can be neglected

if

is small. These

is small. These  equations can be expressed

in matrix form

equations can be expressed

in matrix form

where

, while

, while

and

and

are the function

are the function

and its Jacobian matrix

and its Jacobian matrix

both evaluated at

both evaluated at  . We further

consider solving the equation system

. We further

consider solving the equation system

in the following two cases:

in the following two cases:

: The number of equations is the same as the number of

unknowns, the Jacobian

: The number of equations is the same as the number of

unknowns, the Jacobian

is a square matrix and

its inverse

is a square matrix and

its inverse

exists in general. In the special case

where

exists in general. In the special case

where

is linear, the Taylor series contains

only the first two terms while all higher order terms are zero,

and the approximation in Eq. (97) becomes

exact. To find the root

is linear, the Taylor series contains

only the first two terms while all higher order terms are zero,

and the approximation in Eq. (97) becomes

exact. To find the root  satisfying

satisfying

, we set

, we set

in

Eq. (97) to zero and solve the resulting

equation for

in

Eq. (97) to zero and solve the resulting

equation for  to get

to get

|

(98) |

As in general

is nonlinear, it can only

be approximated by the first two terms of the Taylor series,

consequently the result above is only an approximation of

the optimal solution. But this approximation can be improved

iteratiively to approach the optimal solution

is nonlinear, it can only

be approximated by the first two terms of the Taylor series,

consequently the result above is only an approximation of

the optimal solution. But this approximation can be improved

iteratiively to approach the optimal solution  :

:

|

(99) |

where we have defined

as the increment, which can also be denoted by

as the increment, which can also be denoted by

to represent the search or

Newton direction. The iteration moves

to represent the search or

Newton direction. The iteration moves  in the

N-D space spanned by

in the

N-D space spanned by

from some initial

guess

from some initial

guess  along such a path that all function values

along such a path that all function values

) are reduced. Same as in the

univariate case, a scaling factor

) are reduced. Same as in the

univariate case, a scaling factor  can be used to

control the step size of the iteration

can be used to

control the step size of the iteration

|

(100) |

When  , the step size becomes smaller and the convergence

of the iteration is slower, however, we will have a better chance

not to skip a solution, which may happen if

, the step size becomes smaller and the convergence

of the iteration is slower, however, we will have a better chance

not to skip a solution, which may happen if

is

not smooth and the step size is too big.

is

not smooth and the step size is too big.

The algorithm is listed below:

- Select

- Obtain

and

and

- Obtain

- While

do

do

: There are more equations than unknowns, i.e.,

equation

: There are more equations than unknowns, i.e.,

equation

is an over-constrained

system, and the Jacobian

is an over-constrained

system, and the Jacobian

is an

is an  non-square matrix without an inverse, i.e., no solution

exists for the equation

non-square matrix without an inverse, i.e., no solution

exists for the equation

in general.

But we can still seek to find an optimal solution

in general.

But we can still seek to find an optimal solution  that minimizes the following sum-of-squares error:

that minimizes the following sum-of-squares error:

|

(101) |

The gradient vector of

is:

is:

|

(102) |

The nth component of

is

is

|

(103) |

where

is the component

in the mth row and nth column of the Jacobian matrix

is the component

in the mth row and nth column of the Jacobian matrix

of

of

. Now the gradient

can be written as

. Now the gradient

can be written as

|

(104) |

If

is linear and can therefore be represented

as the sum of the first two terms of its Taylor series in

Eq. (97), then the gradient is:

is linear and can therefore be represented

as the sum of the first two terms of its Taylor series in

Eq. (97), then the gradient is:

![$\displaystyle {\bf g}_{\varepsilon}({\bf x})={\bf J}^T({\bf x})\,{\bf f}({\bf x...

...bf J}({\bf x}_0)\Delta {\bf x}]

={\bf J}_0^T( {\bf f}_0+{\bf J}_0\Delta{\bf x})$](img475.svg) |

(105) |

where  is any chosen initial guess. If we assume

is any chosen initial guess. If we assume

is the optimal solution at which

is the optimal solution at which

is minimized and

is minimized and

is zero:

is zero:

|

(106) |

then the increment

can be found by solving

the equation

can be found by solving

the equation

|

(107) |

Here

is the

pseudo-inverse

of the non-square matrix

is the

pseudo-inverse

of the non-square matrix  . Now the optimal solution

can be found as:

. Now the optimal solution

can be found as:

|

(108) |

However, as

is nonlinear in general, the

sum of the first two terms of its Taylor series is only an

approximation. Consequently the result

is nonlinear in general, the

sum of the first two terms of its Taylor series is only an

approximation. Consequently the result

above is not the optimal

solution, but an estimate which can be improved by carrying

out this step iteratively:

above is not the optimal

solution, but an estimate which can be improved by carrying

out this step iteratively:

|

(109) |

This iteration will converge to  at which

at which

, and the squared

error

, and the squared

error

is minimized.

is minimized.

Specially, for a linear equation system

, the Jacobian

is simplely

, the Jacobian

is simplely

, and the optimal

solution is

, and the optimal

solution is

|

(110) |

i.e., the optimal solution can be found from any initial

guess in a single step. This result is the same as that in

Eq. (7).

Comparing Eqs. (99) and (109),

we see that the two algorithms are essentially the same, with

the only difference that the regular inverse

is

used when

is

used when  , but the pseudoinverse

, but the pseudoinverse  is used when

is used when

and

and

does not exist.

does not exist.

The Newton-Raphson method assumes the availability of

the analytical expressions of all partial derivatives

in the Jacobian matrix

in the Jacobian matrix  . However, when this is not

the case,

. However, when this is not

the case,  need to be approximated by forward or central

difference (secant) method:

need to be approximated by forward or central

difference (secant) method:

where  is a small increment.

is a small increment.

Example 1

Example 2

With

![${\bf x}_0=[1,\;2,\;3]^T$](img502.svg) , we get a root:

, we get a root:

With

![${\bf x}_0=[0,\;0,\;0]^T$](img319.svg) , we get another root:

, we get another root:

Broyden's method

In the Newton-Raphson method, two main operations are carried out in

each iteration: (a) evaluate the Jacobian matrix

and (b) obtain its inverse

and (b) obtain its inverse

. To avoid

these expensive computation for these operations, we can consider using

Broyden's method, one of the quasi-Newton methods, which approximates

the inverse of the Jacobian

. To avoid

these expensive computation for these operations, we can consider using

Broyden's method, one of the quasi-Newton methods, which approximates

the inverse of the Jacobian

from the

from the

in the previous iteration

step, so that it can be updated iteratively from the initial

in the previous iteration

step, so that it can be updated iteratively from the initial

.

.

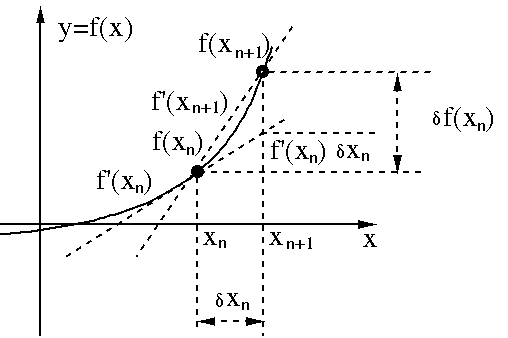

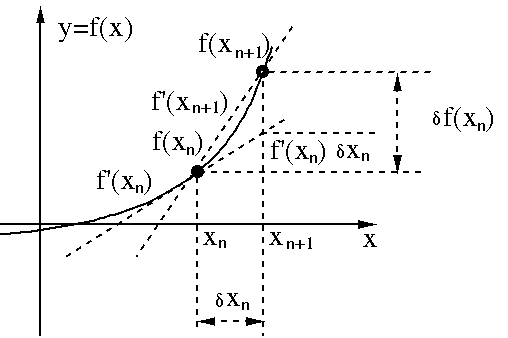

We first consider in the single-variable case how to estimate the next

derivative

from the current

from the current

by the

secant method:

by the

secant method:

where

The equation above indicates that the derivative  in the

in the

step can be estimated by adding the estimated increment

step can be estimated by adding the estimated increment

to the derivative

to the derivative  in the current

in the current

step.

step.

Having obtained

, we can use the same iteration in the

Newton-Raphson method to find

, we can use the same iteration in the

Newton-Raphson method to find  :

:

|

(114) |

This method for single-variable case can be generalized to multiple

variable case for solving

. Following the

way we estimate the increment of the derivative of a single-variable

function in Eq. (113), here we can estimate the

increment of the Jacobian of a multi-variable function:

. Following the

way we estimate the increment of the derivative of a single-variable

function in Eq. (113), here we can estimate the

increment of the Jacobian of a multi-variable function:

|

(115) |

where

and

and

.

Now in each iteration, we can update the estimated Jacobian as well as

the estimated root:

.

Now in each iteration, we can update the estimated Jacobian as well as

the estimated root:

|

(116) |

The algorithm can be further improved so that the inverse of the Jacobian

is avoided. Specifically, consider the inverse Jacobian:

is avoided. Specifically, consider the inverse Jacobian:

![$\displaystyle \hat{{\bf J}}_{n+1}^{-1}=\left( \hat{{\bf J}}_n+\delta\hat{\bf J}...

...bf x}_n)\delta{\bf x}^T_n}{\vert\vert\delta {\bf x}_n\vert\vert^2} \right]^{-1}$](img532.svg) |

(117) |

We can apply the

Sherman-Morrison formula:

|

(118) |

to the right-hand side of the equation above by defining

|

(119) |

and rewrite it as:

|

(120) |

We see that the next

can be iteratively estimated

directly from the previous

can be iteratively estimated

directly from the previous

, thereby avoiding computing

the inverse of

, thereby avoiding computing

the inverse of

altogether. The algorithm is listed below:

altogether. The algorithm is listed below:

- Select

- Find

,

,

, and

, and

-

- While

do

do

![${\bf x}=[x_1,\cdots,x_N]^T$](img432.svg)

![$\displaystyle {\bf f}({\bf x})=\left[\begin{array}{c}

f_1({\bf x}) \\ \vdots\\ ...

...nd{array}\right]

=\left[\begin{array}{c}0\\ \vdots\\ 0\end{array}\right]={\bf0}$](img433.svg)

functions in the neighborhood of the initial

point

functions in the neighborhood of the initial

point

![${\bf x}_0=[x_{01},\cdots,x_{0N}]^T$](img434.svg) :

:

represents the second and higher

order terms in the series beyond the linear term, which can be neglected

if

represents the second and higher

order terms in the series beyond the linear term, which can be neglected

if

is small. These

is small. These  equations can be expressed

in matrix form

equations can be expressed

in matrix form

, while

, while

and

and

are the function

are the function

and its Jacobian matrix

and its Jacobian matrix

both evaluated at

both evaluated at  . We further

consider solving the equation system

. We further

consider solving the equation system

in the following two cases:

in the following two cases:

: The number of equations is the same as the number of

unknowns, the Jacobian

: The number of equations is the same as the number of

unknowns, the Jacobian

is a square matrix and

its inverse

is a square matrix and

its inverse

exists in general. In the special case

where

exists in general. In the special case

where

is linear, the Taylor series contains

only the first two terms while all higher order terms are zero,

and the approximation in Eq. (97) becomes

exact. To find the root

is linear, the Taylor series contains

only the first two terms while all higher order terms are zero,

and the approximation in Eq. (97) becomes

exact. To find the root  satisfying

satisfying

, we set

, we set

in

Eq. (97) to zero and solve the resulting

equation for

in

Eq. (97) to zero and solve the resulting

equation for  to get

to get

is nonlinear, it can only

be approximated by the first two terms of the Taylor series,

consequently the result above is only an approximation of

the optimal solution. But this approximation can be improved

iteratiively to approach the optimal solution

is nonlinear, it can only

be approximated by the first two terms of the Taylor series,

consequently the result above is only an approximation of

the optimal solution. But this approximation can be improved

iteratiively to approach the optimal solution  :

where we have defined

:

where we have defined

as the increment, which can also be denoted by

as the increment, which can also be denoted by

to represent the search or

Newton direction. The iteration moves

to represent the search or

Newton direction. The iteration moves  in the

N-D space spanned by

in the

N-D space spanned by

from some initial

guess

from some initial

guess  along such a path that all function values

along such a path that all function values

) are reduced. Same as in the

univariate case, a scaling factor

) are reduced. Same as in the

univariate case, a scaling factor  can be used to

control the step size of the iteration

can be used to

control the step size of the iteration

, the step size becomes smaller and the convergence

of the iteration is slower, however, we will have a better chance

not to skip a solution, which may happen if

, the step size becomes smaller and the convergence

of the iteration is slower, however, we will have a better chance

not to skip a solution, which may happen if

is

not smooth and the step size is too big.

is

not smooth and the step size is too big.

and

and

do

do

and

and

: There are more equations than unknowns, i.e.,

equation

: There are more equations than unknowns, i.e.,

equation

is an over-constrained

system, and the Jacobian

is an over-constrained

system, and the Jacobian

is an

is an  non-square matrix without an inverse, i.e., no solution

exists for the equation

non-square matrix without an inverse, i.e., no solution

exists for the equation

in general.

But we can still seek to find an optimal solution

in general.

But we can still seek to find an optimal solution  that minimizes the following sum-of-squares error:

that minimizes the following sum-of-squares error:

is:

is:

is

is

is the component

in the mth row and nth column of the Jacobian matrix

is the component

in the mth row and nth column of the Jacobian matrix

of

of

. Now the gradient

can be written as

. Now the gradient

can be written as

is linear and can therefore be represented

as the sum of the first two terms of its Taylor series in

Eq. (97), then the gradient is:

is linear and can therefore be represented

as the sum of the first two terms of its Taylor series in

Eq. (97), then the gradient is:

![$\displaystyle {\bf g}_{\varepsilon}({\bf x})={\bf J}^T({\bf x})\,{\bf f}({\bf x...

...bf J}({\bf x}_0)\Delta {\bf x}]

={\bf J}_0^T( {\bf f}_0+{\bf J}_0\Delta{\bf x})$](img475.svg)

is any chosen initial guess. If we assume

is any chosen initial guess. If we assume

is the optimal solution at which

is the optimal solution at which

is minimized and

is minimized and

is zero:

is zero:

can be found by solving

the equation

can be found by solving

the equation

is the

pseudo-inverse

of the non-square matrix

is the

pseudo-inverse

of the non-square matrix  . Now the optimal solution

can be found as:

. Now the optimal solution

can be found as:

is nonlinear in general, the

sum of the first two terms of its Taylor series is only an

approximation. Consequently the result

is nonlinear in general, the

sum of the first two terms of its Taylor series is only an

approximation. Consequently the result

above is not the optimal

solution, but an estimate which can be improved by carrying

out this step iteratively:

This iteration will converge to

above is not the optimal

solution, but an estimate which can be improved by carrying

out this step iteratively:

This iteration will converge to  at which

at which

, and the squared

error

, and the squared

error

is minimized.

is minimized.

is a small increment.

is a small increment.

![\begin{displaymath}{\bf J}=\left[

\begin{array}{ccc}

3 & x_3\sin(x_2 x_3) & x_2\...

...x_2\,e^{-x_1 x_2} & -x_1\,e^{-x_1 x_2} & 20

\end{array} \right]\end{displaymath}](img498.svg)

![$\displaystyle {\bf J}=\left[\begin{array}{ccc}

2x_1-2 & 2x_2 & -1\\

x_2^2-1 & ...

..._2-3+x_3 & x_2 \\

x_3^2+x_2 & x_3^2+x_1 & 2x_1x_3-3+2x_2x_3

\end{array}\right]$](img501.svg)

![${\bf x}_0=[1,\;2,\;3]^T$](img502.svg)

![${\bf x}_0=[0,\;0,\;0]^T$](img319.svg)

is the true increment of the function

over the interval

is the true increment of the function

over the interval

;

;

is the estimated increment of the function

based on the previous derivate

is the estimated increment of the function

based on the previous derivate

;

;

is the estimated increment of the derivative:

is the estimated increment of the derivative:

in the

in the

step can be estimated by adding the estimated increment

step can be estimated by adding the estimated increment

to the derivative

to the derivative  in the current

in the current

step.

step.

and

and

.

Now in each iteration, we can update the estimated Jacobian as well as

the estimated root:

.

Now in each iteration, we can update the estimated Jacobian as well as

the estimated root:

![$\displaystyle \hat{{\bf J}}_{n+1}^{-1}=\left( \hat{{\bf J}}_n+\delta\hat{\bf J}...

...bf x}_n)\delta{\bf x}^T_n}{\vert\vert\delta {\bf x}_n\vert\vert^2} \right]^{-1}$](img532.svg)

can be iteratively estimated

directly from the previous

can be iteratively estimated

directly from the previous

, thereby avoiding computing

the inverse of

, thereby avoiding computing

the inverse of

altogether. The algorithm is listed below:

altogether. The algorithm is listed below:

,

,

, and

, and

do

do

,

,