Next: Monte Carlo (MC) Algorithms Up: Introduction to Reinforcement Learning Previous: Model-Based Planning

The previously discussed dynamic programming methods find

the optimal policy based on the assumption that the MDP

model of the environment is completely available, i.e.,

the dynamics of MDP in terms of its state transition and

reward mechanism are known. A given policy

Now we consider the optimization problem with an unknown MDP model of the environment. In this model-free problem, called control, the state transition and reward probabilities of the MDP model are unknown, the value functions can no longer be calculated directly based on Eqs. (21) and (22) as in model-based planning. Instead, now we need to learn the MDP model by sampling the environment, i.e., by repeatedly running a large number of episodes of the stochastic process of the environment while following some given policy, and estimate the value functions as the average of the actual returns received during the sampling process. This method is generally referred to as Monte Carlo (MC) method. At the same time, the given policy can also be improved to gradually approach optimality.

This approach is called general policy iteration (GPI), as illustrated in Eq. (40) below, similar to the policy iteration (PI) algorithm for model-based planning illustrated in Eq. (37). In general, all model-free control algorithms are based on GPI by which the two alternating processes of policy evaluation and policy imprvement are carried out iteratively. This GPI can also be similarly illustrated in Fig. 1.3 for the GI.

While GPI and GI illustrated in respectively in Eqs. (40) and (37) may look similar to each other, there are some essential differences between the two, as listed below.

, instead

of the state value function

, instead

of the state value function  in model-based

planning. This is because without the a specific MDP

model the state value function can no longer be calculated,

while the action value function can still be estimated

based on the actual rewards received while sampling the

environment. Similar to how we improve the policy by

taking a greedy action to achive a higher state value

in model-based planning, here we improve the policy by

taking an action different from that dictated by the

given policy

in model-based

planning. This is because without the a specific MDP

model the state value function can no longer be calculated,

while the action value function can still be estimated

based on the actual rewards received while sampling the

environment. Similar to how we improve the policy by

taking a greedy action to achive a higher state value

in model-based planning, here we improve the policy by

taking an action different from that dictated by the

given policy  to achieve a higher action value based

on some

to achieve a higher action value based

on some  -greedy method, as discussed below.

-greedy method, as discussed below.

(Eq. (27))

during policy improvement. However, here in model-free

control, we have to learn the action value

(Eq. (27))

during policy improvement. However, here in model-free

control, we have to learn the action value

, as

a function of action

, as

a function of action  as well as state

as well as state  by

sampling the environment. Such an estimated action value

based only on some partial sample data is inevitably noisy,

especially in the early stage of learning when many of the

states have not yet been visited yet. We therefore need to

explore all actions at each state to better learn

the value function, as well as to exploit the greedy

action to improve the policy.

by

sampling the environment. Such an estimated action value

based only on some partial sample data is inevitably noisy,

especially in the early stage of learning when many of the

states have not yet been visited yet. We therefore need to

explore all actions at each state to better learn

the value function, as well as to exploit the greedy

action to improve the policy.

This issue of exploration versus exploitation

can be addressed by the

![$\epsilon\in[0,\;1]$](img200.svg)

For example, if

and

and

,

the greedy action may be chosen with probability

,

the greedy action may be chosen with probability

, while each of the remaining

three non-greedy actions may be chosen with probability

, while each of the remaining

three non-greedy actions may be chosen with probability

. Such a policy describes the behavior

of the agent, and is therefore called behavior policy,

different from the policy being followed.

. Such a policy describes the behavior

of the agent, and is therefore called behavior policy,

different from the policy being followed.

We further note that a larger value of

Similar to how we proved the policy improvement theorem

in Eq. (30) stating that the greedy

policy

|

|

|

|

|

|

||

|

|

(42) |

is no less than the average of action

values

is no less than the average of action

values

over all

over all  weighted by some

normalized coefficients adding up to 1:

weighted by some

normalized coefficients adding up to 1:

|

(43) |

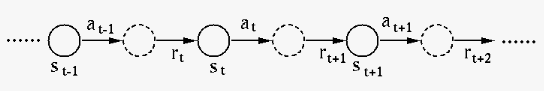

The iterative process of the GPI method for model-free

control is illustrated in the figure below, similar to

the PI method for model-based planning based planning

illustrated in Fig. 1.3, but with the state

value

Also, here the estimated action value

The main task in model-free control is to evaluate

the state and action-value functions given in

Eqs. (21) and (22)

while following a given policy

|

(45) |

actually received from these episodes.

actually received from these episodes.

Such a sequence of states visited is called a trajectory, and the trajectories of different episodes are in general different from each another due to the random nature of the environment. In the following sections we will consider specific algoithms for the implementation of the general model-free control discussed above.

To prepare for specific discussion of the GPI methods, we

first consider a general problem of the estimation of the

value of a random variable

|

|

|

|

|

|

(46) |

| newEstimate |  |

oldEstimate stepSize stepSize target target oldEstimate oldEstimate |

|

|

oldEstimate stepSize stepSize error error |

||

|

oldEstimate increment increment |

(48) |

between the old average

between the old average  and the latest

sample

and the latest

sample  , and the second term is the gradient

, and the second term is the gradient

weighted by the step

size

weighted by the step

size

![$\alpha\in[0,\;1]$](img244.svg) , which controls how samples

are weighted differently. In the extreme case when

, which controls how samples

are weighted differently. In the extreme case when

,

,

with no contribution

from any previous samples; on the other hand when

with no contribution

from any previous samples; on the other hand when

is close to 0,

is close to 0,  , the most recent

samples has little contribution. We can therefore

properly adjust

, the most recent

samples has little contribution. We can therefore

properly adjust  to fit our specific need.

For example, if we gradually reduce

to fit our specific need.

For example, if we gradually reduce  , then the

estimated average will become stabilized while enough

samples have been collected. On the other hand, if we

let

, then the

estimated average will become stabilized while enough

samples have been collected. On the other hand, if we

let

, the more recent samples are

weighted more heavily than the earlier ones. Such a

stratigy is suitable when estimating parameters of a

nonstationary system, such as an MDP with varying

behaviors when the policy is being modified continuously.

, the more recent samples are

weighted more heavily than the earlier ones. Such a

stratigy is suitable when estimating parameters of a

nonstationary system, such as an MDP with varying

behaviors when the policy is being modified continuously.

Specifically in model-free control Eq. (47)

can be used to iteratively update the estimated value

functions while sampling the environment. Both the

state and action values as the expected return are

estimated as the average of all previous sample returns,

and they are updated incrementally when a new sample

return