Next: TD() Algorithm Up: Model-Free Evaluation and Control Previous: Monte Carlo (MC) Algorithms

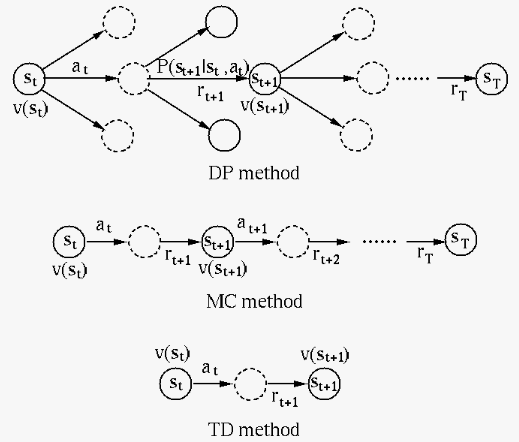

The temporal difference (TD) method is a combinaion of the

MC method considered above and the bootstrapping DP method

based on the Bellman equation. The main difference between

the TD and MC methods is the target, the return

|

(51) |

Again, we first consider the simpler problem of policy

evaluation. As in the MC method, we estimate the value

function

, called the TD error, is the

difference between the TD target and the previous

estimate:

and

, called the TD error, is the

difference between the TD target and the previous

estimate:

and  is the current estimatee of the value

of the next state (bootstrapping). It can be shown that

the iteration of this TD method converges to the true

value

is the current estimatee of the value

of the next state (bootstrapping). It can be shown that

the iteration of this TD method converges to the true

value  , if the step size is small enough.

, if the step size is small enough.

Here is the pseudo code for policy evaluation using the

TD method, based on parameters

to be evaluated

to be evaluated

for all

for all  arbitrarily,

arbitrarily,

for terminal state

for terminal state  ,

,

![$\alpha\in(0,\;1]$](img289.svg)

is not terminal (for each step)

is not terminal (for each step)

, get reward

, get reward  and

next state

and

next state

![$v(s)=v(s)+\alpha[ r+\gamma v(s')-v(s)]$](img291.svg)

As the TD algorithm updates the value function at every step of the episode, it uses the sample data more frequently and efficiently, and therefore has lower variance error than the CM method that updates the estimated value function at the end of each episode. On the other hand, the TD method may be biased when compared to the first-visit MC method, due to the arbitrary initialization of the value functions.

Based on the TD method for model-free policy evaluation,

we now further consider the TD method for model-free

control to gradually learn the optimal policy by updading

the Q-values of all state-action pairs

The Q-values for all state-action pairs can be stored in a state-action table which is iteratively updated by running many episodes of the unknown MDP by the TD method to gradually approach the maximum Q-values achievable by taking each action at each state, i.e., the optimal action is the one with the highest Q-value.

This method has two different flavors, the on-policy

algorithm, which updates the Q-value by following the

current policy such as an

The Q-value

|

|

|

|

|

|

(54) |

This algorithm is called SARSA, as it updates

the Q-value based on the current state

for all

for all  ,

,

![$\alpha\in(0,\;1]$](img289.svg) ,

,

,

denote

,

denote  -greedy policy

-greedy policy  by

by

according to

according to  based on

based on

is not terminal (for each step)

is not terminal (for each step)

, get reward

, get reward  and next state

and next state

according to

according to  based on

based on

![$q(s,a)=q(s,a)+\alpha [r+\gamma q(s',a')-q(s,a)]$](img301.svg)

As a variation of SARSA, the expected SARSA updates

the Q-value at state

|

(55) |

Same as SARSA, the Q-learning algorithm also estimates

the Q-value

|

(56) |

The pseudo code of SARSA algorithm is listed below.

arbitrarily (

arbitrarily ( for terminal

for terminal  ),

),

![$\alpha\in(0,\;1]$](img289.svg) ,

,

is not terminal (for each step)

is not terminal (for each step)

according to

according to  based on

based on  ,

get reward

,

get reward  and next state

and next state

![$q(s,a)=q(s,a)+\alpha [r+\gamma\max_{a'} q(s',a)'-Q(s,a)]$](img306.svg)

Here is the comparison of the MC and TD methods in terms of their pros and cons:

,

the expected return, by the true return

,

the expected return, by the true return  obtained

at the end of the episode, i.e., the estimated value is

updated once every episode;

obtained

at the end of the episode, i.e., the estimated value is

updated once every episode;

The TD method estimates

is based on sample returns

is based on sample returns

, and it is unbiased, while TD uses the

bootstrap approach to find the TD target based on sample

data that are not necessarily i.i.d., and it is more

sensitive to the initial guess of the value functions,

it is more biased.

, and it is unbiased, while TD uses the

bootstrap approach to find the TD target based on sample

data that are not necessarily i.i.d., and it is more

sensitive to the initial guess of the value functions,

it is more biased.

affected by

many random events (state transitions, actions, and

rewards), and in particular the first-visit version of

the MC method only makes use of the sample data from

the first visit of a state, it does not use the available

data efficiently and it has high variance, while on the

other hand the TD method is based on only one random

variable, the estimated return, and it makes more

frequent and efficient use of the sample data, it has

lower variance.

affected by

many random events (state transitions, actions, and

rewards), and in particular the first-visit version of

the MC method only makes use of the sample data from

the first visit of a state, it does not use the available

data efficiently and it has high variance, while on the

other hand the TD method is based on only one random

variable, the estimated return, and it makes more

frequent and efficient use of the sample data, it has

lower variance.

Here is a summary of the dynamic programming (DP) method for model-based planning, and the Monte-Carlo (MC) and time difference (TD) methods for model-free control: