Next: Value Function Approximation Up: Model-Free Evaluation and Control Previous: Temporal Difference (TD) Algorithms

) Algorithm

) Algorithm

The MC and TD methods considered previously can be

unified by the n-step TD(

Recall that both MC and TD algorithms updates iteratively

the value functions being estimated and the policy being

improved based on Eq. (49), but they

estimate the target

As a trade-off between the MC and TD methods, the n-step

TD algorithm can be considered a generalization of the TD

method, where the target in Eq. (49)

is an n-step return, the sum of the discounted

rewards in the

|

(57) |

: the 1-step return is the sum of the

immediate reward and the estimated value of the next

state, the same as the TD target in the TD method:

: the 1-step return is the sum of the

immediate reward and the estimated value of the next

state, the same as the TD target in the TD method:

|

(58) |

: the n-step return is the sum of the

discounted rewards from all future states upto the

terminal state at the end of the episode, i.e., it

is the return

: the n-step return is the sum of the

discounted rewards from all future states upto the

terminal state at the end of the episode, i.e., it

is the return  defined in Eq. (7),

the same as in the MC method:

defined in Eq. (7),

the same as in the MC method:

|

(59) |

is zero.

is zero.

:

All states

:

All states  beyond the terminal state

beyond the terminal state  with

with

remain the same as the terminal state with

value

remain the same as the terminal state with

value  and return

and return  , same as in the MC

method.

, same as in the MC

method.

Based on these n-step returns of different

We first define the

|

(60) |

. Note that the weights decay

exponentially, and they are normalized due to the

coefficient

. Note that the weights decay

exponentially, and they are normalized due to the

coefficient  :

:

|

(61) |

-return

-return

defined above can be

expressed in two summations, the sum of the first

defined above can be

expressed in two summations, the sum of the first  n-step returns

n-step returns

, and the

sum of all subsequent n-step returns

, and the

sum of all subsequent n-step returns

:

:

in the second term is the

sum of coefficients in the second summation:

in the second term is the

sum of coefficients in the second summation:

|

(63) |

. We see that in Eq. (62) all

n-step returns

. We see that in Eq. (62) all

n-step returns  are weighted by exponentially

decaying coefficient

are weighted by exponentially

decaying coefficient

, except the true

return

, except the true

return  which is weighted by

which is weighted by

.

.

Again consider two special cases:

: all terms in Eq. (62)

are zero, except the first one with

: all terms in Eq. (62)

are zero, except the first one with  :

:

|

(64) |

: the first summation is zero and

: the first summation is zero and

|

(65) |

) is a general

algorithm of which the two special cases TD(0) and TD(1)

are respectively the TD and MC methods.

) is a general

algorithm of which the two special cases TD(0) and TD(1)

are respectively the TD and MC methods.

In Eq. (62),

,

,

same as

before, and when

same as

before, and when  ,

,

, same as

the TD target.

, same as

the TD target.

Based on

|

(67) |

|

(68) |

), which is similar to the MC method, as

they are based on either

), which is similar to the MC method, as

they are based on either  or

or

, available

only at the end of each episode.

, available

only at the end of each episode.

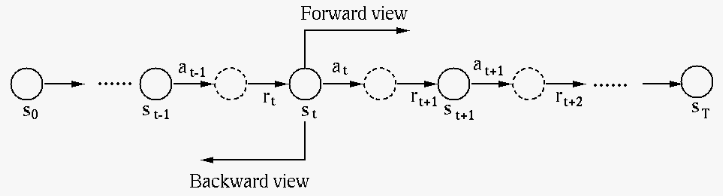

An alternative method is the backward view of

TD(

Specifically, the backward view of TD(

|

(69) |

to become

to become

if

if  is currently visited.

is currently visited.

Now the iterative update of the estimated value function

in Eq. (52) is modified so that all

states, instead of only the one currently visited, are

updated, but to different extents based on

is the same as in TD(0),

given in Eq. (53):

is the same as in TD(0),

given in Eq. (53):

The eligibility trace

Here is the pseudo code for the backward view of the

TD(

to be evaluated

to be evaluated

is not terminal (for each step)

is not terminal (for each step)

based on

based on  ,

get reward

,

get reward  and next state

and next state

, different from

the TD algorithm where only the value at the state

currently visited is updated.

, different from

the TD algorithm where only the value at the state

currently visited is updated.

Again consider two special cases:

,

,  for all states except the

current

for all states except the

current  being visited, i.e., TD(

being visited, i.e., TD( )

becomes TD(0), the same as the TD method.

)

becomes TD(0), the same as the TD method.

, then

, then  is scaled down by a

factor

is scaled down by a

factor  for all states except the current

for all states except the current

being visited, i.e., TD(

being visited, i.e., TD( ) becomes TD(1),

the same as the MC method. However, different from

the MC method that updates the value function

) becomes TD(1),

the same as the MC method. However, different from

the MC method that updates the value function  at the end of each spisode, here

at the end of each spisode, here  is still

updated at every step of the episode due to the

backward view of the method.

is still

updated at every step of the episode due to the

backward view of the method.

This backward view of the TD(

) algorithm:

) algorithm:

,

,

![$\alpha\in(0,\;1]$](img289.svg) ,

,

,

denote

,

denote  -greedy policy

-greedy policy  by

by

according to

according to  based on

based on

is not terminal (for each step)

is not terminal (for each step)

, get reward

, get reward  and next state

and next state

according to

according to  based on

based on

) algorithem:

) algorithem:

,

,

![$\alpha\in(0,\;1]$](img289.svg) ,

,

,

denote

,

denote  -greedy policy

-greedy policy  by

by

according to

according to  based on

based on

is not terminal (for each step)

is not terminal (for each step)

, get reward

, get reward  and next state

and next state

according to

according to  based on

based on

then

then

else

else

) algorithm is different from the

SARSA(

) algorithm is different from the

SARSA( ) algorithm in two ways. First, the

action value is updated based on the greedy action

) algorithm in two ways. First, the

action value is updated based on the greedy action  ,

instead of the

,

instead of the  by policy

by policy  ; second, if the

; second, if the

-greedy policy

-greedy policy  happens to choose a random

non-greedy action

happens to choose a random

non-greedy action  with probability

with probability  to explore rather than exploiting

to explore rather than exploiting  , the eligibility

traces of all states are reset to zero. There are other

different versions of the algorithm which do not reset

these traces.

, the eligibility

traces of all states are reset to zero. There are other

different versions of the algorithm which do not reset

these traces.