Next: Model-Free Evaluation and Control Up: Introduction to Reinforcement Learning Previous: Markov Decision Process

Given a complete MDP model in terms of

| If |  |

|

|

| Then |  |

|

(26) |

One way to solve this optimization problem is to use

brute-force search to enumerate all

A more efficient and therefore more practical way to

optimize the policy is to do it iteratively. Consider

a simpler task of improving upon an existing

deterministic policy

While it is obvious that this greedy method will result

in a higher return for the single state transition from

|

(29) |

The proof is by recursively applying the greedy method to

replace the old policy

.

.

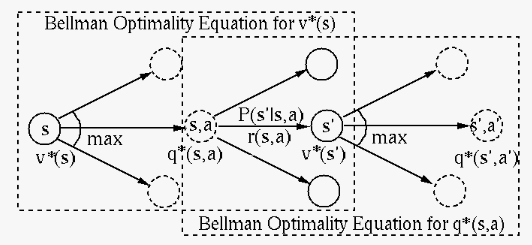

The optimal state value

states in

states in

and can

be written in vector form:

and can

be written in vector form:

|

(33) |

![$\displaystyle {\bf v}^*=\left[\begin{array}

{c}v^*(s_1)\\ \vdots\\ v^*(s_N)\end...

...s & \vdots \\

P(s_1\vert s_N,a) & \cdots & P(s_N\vert s_N,a)\end{array}\right]$](img162.svg) |

(34) |

:

This iteration is to be used for policy evaluation in

the algorithm value iteration below for finding

the optimal policy. This iteration will converge as

function

:

This iteration is to be used for policy evaluation in

the algorithm value iteration below for finding

the optimal policy. This iteration will converge as

function

is a

contraction mapping

with

is a

contraction mapping

with  :

:

|

|

|

|

|

|

||

|

|

||

|

|

(36) |

is the p-norm

(

is the p-norm

( ) of a stochastic matrix, which is known to be 1.

) of a stochastic matrix, which is known to be 1.

Given the complete information of the MDP model in

terms of

Carry out the following two processes iteratively from

some arbibrary initial policy

Given a deterministic policy

Given

![$\pi'(s)=\argmax_a\left[ r(s,a)

+\gamma\sum_{s'}P(s'\vert s,\pi(s)) v_\pi(s')\right]

\;\;\;\;\;\;\;\;\forall s\in S$](img172.svg)

We see that in each round of the policy iteration,

two intertwined and interacting processes, the policy

evaluation and policy improvement, are carried out

alternatively, one depending on the other, and one

completing before the other starts. The value functions

The pseudo code for the algorithm is listed below:

;

;

(small positive value);

(small positive value);

):

):

:

:

![$\pi'(s)=\argmax_a\left[r(s,a)+\gamma\sum_{s'} P(s'\vert s,\pi(s)) v(s')\right]$](img186.svg)

One drawback of the policy iteration method is the high computational complexity of the inner iteration for policy evaluation inside the outer iteration for policy evaluation. To speed up this process, we could terminate the inner iteration early before convergence. In the extreme case, we simply combine policy evaluation and improvement into a single step, resulting in the following value iteration contains two steps:

Based on some initial value

![$\displaystyle v_{n+1}(s)=\max_a\left[r(s,a)+\gamma\sum_{s'}

P(s'\vert s,a)v_n(s')\right]

\;\;\;\;\;\;\forall s\in S$](img190.svg) |

(38) |

Find

![$\displaystyle \pi^*(s)=\argmax_a\left[r(s,a)+\gamma

\sum_{s'}P(s'\vert s,a)v_n(s')\right]

\;\;\;\;\;\;\forall s\in S$](img192.svg) |

(39) |

Here is the pseudo code for the algorithm:

;

;

![$v'(s)=\max_a\left[r(s,a)+\gamma\sum_{s'}P(s'\vert s,a)v(s')\right]$](img194.svg)

![$\pi^*(s)=\argmax_a\left[r(s,a)+\gamma\sum_{s'}P(s'\vert s,a)v(s')\right]$](img197.svg)

In the PI method considered above, the value functions at all states are evaluated before the policy is updated for all states, as illustrated below:

This synchronous policy iteration can be modified so that the values at some or even one of the states are evaluated in-place before other state values are updated, and the policy at some or even one of the states are updated in-place before that at other states, resulting in an asynchronous method called general policy iteration (GPI), to be discussed in detail in the next sections on model-free control.