Next: Temporal Difference (TD) Algorithms Up: Model-Free Evaluation and Control Previous: Model-Free Evaluation and Control

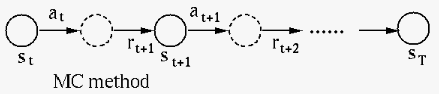

We first consider a simple problem of evaluating an

existing policy

![$v_\pi(s)=E[\,G_t\;]$](img255.svg)

of all possible next states each

weighted by the corresponding transition probability

of all possible next states each

weighted by the corresponding transition probability

, which is no longer available now in the

model-free case. We can still find the value function

based on the MC method by running multiple sample episodes

in the environment, and estimate

, which is no longer available now in the

model-free case. We can still find the value function

based on the MC method by running multiple sample episodes

in the environment, and estimate  as the average

of the actual returns

as the average

of the actual returns  , which in turn can be calculated

as the sum of discounted rewards

, which in turn can be calculated

as the sum of discounted rewards

from

the current state

from

the current state  onward to the terminal state

onward to the terminal state

, found at the end of the episode (assumed to have

finite horizon).

, found at the end of the episode (assumed to have

finite horizon).

This method has two versions, first-visit and every-visit, depending on whether only the first or every visit to a state in the trajectory of an episode is counted in calculation of the return. The pseudo code below is for first-visit version due to the if statement, which can be removed for the every-visit version.

to be evaluated

to be evaluated

![$G_t=[\;\;]$](img258.svg) (empty lists) for all

(empty lists) for all

to get sample data:

to get sample data:

(for each step)

(for each step)

(first visit)

(first visit)

![$G_t=[G_t,G]$](img265.svg) (append

(append  to list

to list  )

)

In the every-visit case, all sample returns

In the code above, the function average finds the average of all elements of a list, only when all sample returns are available at the end of each episode. A more computationally efficient method (in terms of both space and complexity) is to find the average incrementally as in Eq. (49). Based on this incremental average, policy evaluation in terms of both the state and action values can also be carried out by the code below:

to get

to get

(for each step)

(for each step)

is visited the first time

is visited the first time

to get

to get

pairs visited

pairs visited

is visited the first time

is visited the first time

We next consider the MC algorithm for model-free control,

based on the generalized policy iteration of alternating

and interacting policy evaluation and policy improvement.

Specifically, while sampling the environment by following

an existing policy

The pseudo code for this lgorithm is listed below.

, and a policy

, and a policy

soft (arbitrarily)

soft (arbitrarily)

to get

to get

is visited the first time

is visited the first time

-greedy policy

-greedy policy  is based on

action value function

is based on

action value function  (maximized by the greedy

action). Through out the iteration, the policy is modified

following the action value function, while at the same time

the action value function is modified based on the policy,

until the process converges to the desired optimality.

(maximized by the greedy

action). Through out the iteration, the policy is modified

following the action value function, while at the same time

the action value function is modified based on the policy,

until the process converges to the desired optimality.

We note that the optimal policy