Next: Model-Based Planning Up: Introduction to Reinforcement Learning Previous: Reinforcement Learning

Markov decision process (MDP) is the mathematical

framework for reinforcement learning. To understand MDP,

we first consider the basice concept of Markov process

or Markov chain, a stochastic model of a system that

can be characterized by a set of states

|

(1) |

For example, if the state of a helicopter is described by its linear and angular position and velocity based on which its future position and velocity can be completely determined, then it can be modeled by a Markov process; but if the state is only described by its position, then its position in the past is needed for determining its future (e.g., to find its velocity), and it is not a Markov process.

All transition probabilities can be organized as an

![$\displaystyle {\bf P}=\left[\begin{array}{ccc}

P_{11} & \cdots & P_{1N}\\

\vdots & \ddots & \vdots \\

P_{N1} & \cdots & P_{NN}\end{array}\right]$](img24.svg) |

(2) |

to the

to the  possible next states

possible next states

have

to sum up to 1, we have

Any matrix satisfying this property is called a

stochastic matrix.

have

to sum up to 1, we have

Any matrix satisfying this property is called a

stochastic matrix.

In the following, our discussion is mostly concentrated on

the the general transition from the current state

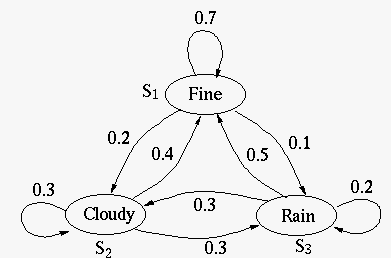

Example Weather in Los Angeles:

![$\displaystyle {\bf P}=\left[\begin{array}{ccc}

0.7 & 0.2 & 0.1 \\

0.4 & 0.3 & 0.3 \\

0.5 & 0.2 & 0.3 \end{array}\right]$](img30.svg) |

(4) |

We next consider a Markov reward process (MRP),

represented by a tuple

![$\gamma \in[0,\;1]$](img33.svg)

The sequence of state transitions of an MRP from an

initial state

Both the reward

|

(5) |

received after

arriving at state

received after

arriving at state  is denoted by

is denoted by  , instead

of

, instead

of  . The index

. The index  for the summation over all

states will be abbreviated to

for the summation over all

states will be abbreviated to  in the subsequent

discussion. The expectation of the reward at

in the subsequent

discussion. The expectation of the reward at  is

is

We define the return

at state

at state  as the expectation of the return, which

can be further expressed recursively in terms of the values

as the expectation of the return, which

can be further expressed recursively in terms of the values

of all possible next states

of all possible next states

:

:

is given in Eq. (6). The value of a

terminal state

is given in Eq. (6). The value of a

terminal state  at the end of an episode is zero, as

there will be no next state and thereby no more future

reward.

at the end of an episode is zero, as

there will be no next state and thereby no more future

reward.

This equation is called the Bellman equation, by

which the value

The Bellman equation in Eq. (8) holds

for all states

is called the stochastic matrix.

Solving this linear equation system of size

is called the stochastic matrix.

Solving this linear equation system of size  we find

Such a solution exists as matrix

we find

Such a solution exists as matrix

is invertible. This can be shown by noting that all

eigenvalues of the stochastic matrix

is invertible. This can be shown by noting that all

eigenvalues of the stochastic matrix  are not

greater than 1:

are not

greater than 1:

(see

here), and

all eigenvalues of

(see

here), and

all eigenvalues of

are smaller than 1:

are smaller than 1:

, i.e., the eigenvalue of

, i.e., the eigenvalue of

is greater than zero:

is greater than zero:

.

Consequently the determinant

.

Consequently the determinant

of this coefficient matrix, as the product of all its

nonzero eigenvalues, is non-zero and therefore matrix

of this coefficient matrix, as the product of all its

nonzero eigenvalues, is non-zero and therefore matrix

is invertible.

is invertible.

Alternatively, the Bellman equation in Eq. (8) can also be solved iteratively by the general method of dynamic programming (DP), which solves a multi-stage planning problem by a backward induction and find the value function recursively. We first rewrite the Bellman equation as

|

(11) |

is defined

as a vector-valued function (an operator) applied to

the vector argument

is defined

as a vector-valued function (an operator) applied to

the vector argument  . Now the Bellman equation

can be solved iteratively from an arbitrary initial value,

such as

. Now the Bellman equation

can be solved iteratively from an arbitrary initial value,

such as

:

This iteration will always converge to the root of the

equation, the fixed point of function

:

This iteration will always converge to the root of the

equation, the fixed point of function

,

as it is a

contraction mapping

satisfying

,

as it is a

contraction mapping

satisfying

|

|

|

|

|

|

(13) |

is a contraction can also be proven by

showing the norm of its Jacobian matrix, its derivative

with respect to its vector argument

is a contraction can also be proven by

showing the norm of its Jacobian matrix, its derivative

with respect to its vector argument  , is smaller

than 1. We first find the Jacobian matrix

, is smaller

than 1. We first find the Jacobian matrix

|

(14) |

(equivalent to

(equivalent to  ), the maximum absolute

row sum:

), the maximum absolute

row sum:

|

(15) |

.

.

In summary, the Bellman equation in Eq. (8)

can be solved by either of the two methods in

Eqs. (10) and (12),

so long as

Based on the definition of MRP, we further define

Markov decision process (MDP) as an MRP with

certain decision-making rule called policy,

denoted by

As there are

Following a given policy from an initial state

|

(16) |

can be any of the

can be any of the  states in

states in

of the MDP, not to be confused

with the t-th state in

of the MDP, not to be confused

with the t-th state in  . Note that in an episode

some of the states in

. Note that in an episode

some of the states in  may be visited multiple

times, while some others may never be visited. Also

note that due to the random nature of the MDP, following

the same policy may not result in the same trajectory.

may be visited multiple

times, while some others may never be visited. Also

note that due to the random nature of the MDP, following

the same policy may not result in the same trajectory.

Along the trajectory of an episode, an accumulation

of discounted rewards from all states visited will

be received. Our goal is to find the optimal policy

as a sequence of actions

In an MDP, both the next state

received after arriving at the

current is

Following a specific random policy

received after arriving at the

current is

Following a specific random policy  , the agent

takes an action

, the agent

takes an action  to transit from the current

state

to transit from the current

state  to the next state

to the next state

with probability

with probability

|

(19) |

and the reward in

state

and the reward in

state  :

:

![$\displaystyle r_\pi(s)=E_\pi[ r_{t+1}\vert s_t=s]=\sum_{a\in A(s)}\pi(a\vert s)\;r(s,a)$](img111.svg) |

(20) |

denotes the expectation with respect to a

certain policy

denotes the expectation with respect to a

certain policy  . The summation over all available

actions

. The summation over all available

actions  in state

in state  will be abbreviated to

will be abbreviated to  in the following.

in the following.

We further define two important functions with respect

to a given policy

is the

expected return at each state

is the

expected return at each state  of the MDP while

following policy

of the MDP while

following policy  , similar to the value function

of an MRP as

, similar to the value function

of an MRP as  in Eq. (8), but now

treated as a function of action

in Eq. (8), but now

treated as a function of action  as well as state

as well as state  :

:

is defined below.

is defined below.

is the expected return of taking a specific action

is the expected return of taking a specific action  (irrelevant to policy

(irrelevant to policy  ) in state

) in state  , and then

following

, and then

following  in all subsequent states:

in all subsequent states:

is recursively represented as a

weighted sum of

is recursively represented as a

weighted sum of

based on

Eq. (21).

based on

Eq. (21).

The state-action value

As a function of the state-action pair

In particular, if the policy is deterministic with

|

(23) |

, same as in Eq. (12).

, same as in Eq. (12).

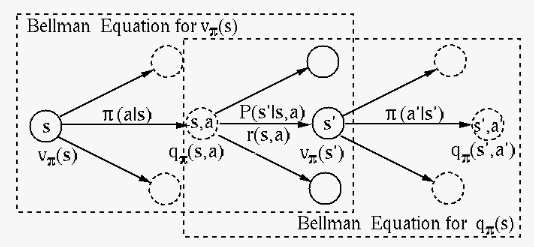

The figure above illustrates the Bellman equations in

Eqs. (21) and (22),

showing the bootstrapping of the state value function

in the dashed box on the left, and that of the action

value function in dashed box on the right. Specifically

here the word bootstrapping means the iterative method

that updates the estimated value at a state