Next: Solving equations by iteration Up: Solving Equations Previous: Solving Linear Equation Systems

Here we consider a set of methods that find the solution

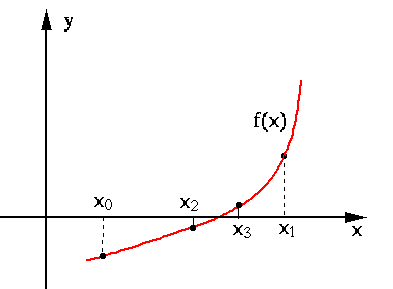

The bisection search

This method requires two initial guesses

Given such two end points

While any point between the two end points can be chosen for the

next iteration, we want to avoid the worst possible case in which

the solution always happens to be in the larger of the two sections

Here, without loss of generality, we have assumed

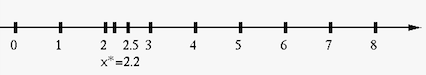

For example, we assume the root is located at

|

(12) |

Note that the error

|

(13) |

)

and the rate of convergence is

)

and the rate of convergence is  :

14

:

14

Compared to other methods to be considered later, the bisection method

converges rather slowly, but one of the advantages of the bisection

method is that no derivative of the given function is needed. This

means the given function

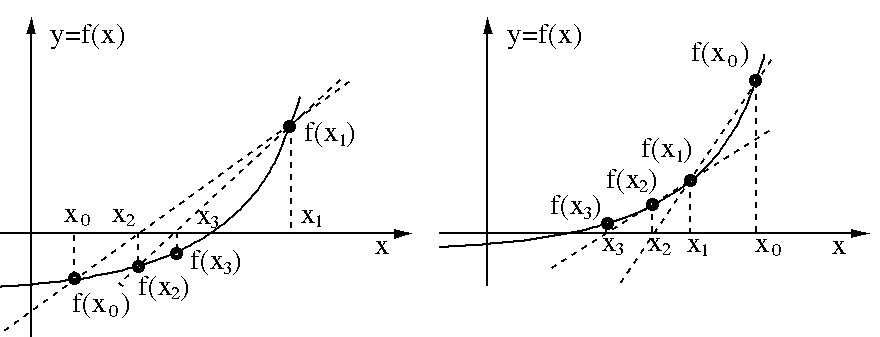

The Secant method

Same as in the bisection method, here again we assume there are two

initial values

At the zero crossing,

|

(16) |

is the finite difference that approximates

the derivative

is the finite difference that approximates

the derivative  :

:

|

(17) |

obtained above, as a better estimate of the

root than either

obtained above, as a better estimate of the

root than either  or

or  , is used to replace one of them:

, is used to replace one of them:

, then

, then

satisfying

satisfying

is replaced by

is replaced by  (same as the bisection

method);

(same as the bisection

method);

, then

, then

with greater

with greater

is replaced by

is replaced by  .

.

|

(18) |

In case

|

(19) |

We now consider the order of convergence of the secant method. Let

|

|

|

|

|

|

(20) |

:

:

|

(21) |

) and similarly

) and similarly

|

(22) |

above we get

above we get

|

|

![$\displaystyle \frac{e_{n-1}[f'(x^*)e_n+\frac{1}{2}f''(x^*)e_n^2+O(e_n^3)]-e_n[f...

...x^*)e_n^2+O(e_n^3)]-[f'(x^*)e_{n-1}+\frac{1}{2}f''(x^*)e_{n-1}^2+O(e_{n-1}^3)]}$](img133.svg) |

|

|

|

||

|

|

||

|

|

(23) |

, the lowest order terms in both the numerator

and denominator become the dominant terms as all other higher order terms

approach to zero, and we have

, the lowest order terms in both the numerator

and denominator become the dominant terms as all other higher order terms

approach to zero, and we have

|

(24) |

. To find the order of

convergence, we need to find

. To find the order of

convergence, we need to find  in

in

|

(25) |

we get

we get

|

(26) |

we also have

we also have

|

(27) |

|

(28) |

|

(29) |

|

(30) |

|

(31) |

|

(32) |

and the order of convergence is

and the order of convergence is  , which

is better than the linear convergence of the bisection search (which

does not use the information of the specific function

, which

is better than the linear convergence of the bisection search (which

does not use the information of the specific function  ), but

worse than quadratic convergence of the Newton-Raphson method based

on the function derivative

), but

worse than quadratic convergence of the Newton-Raphson method based

on the function derivative  instead of the approximation

instead of the approximation

, to be considered in the next section.

, to be considered in the next section.

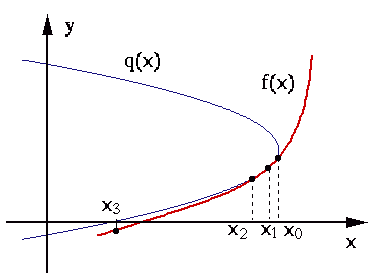

The inverse quadratic interpolation

Similar to the secant method that approximates the given function

In general, any function

|

(33) |

to approximate the

inverse (horizontal) function

to approximate the

inverse (horizontal) function

:

:

|

(34) |

, the corresponding

, the corresponding  is the zero-crossing of

is the zero-crossing of

:

:

|

(35) |

of

of  . This expression

can then be converted into an iteration by which the next root estimate

. This expression

can then be converted into an iteration by which the next root estimate

is computed based on the previous three estimates at

is computed based on the previous three estimates at  ,

,  ,

and

,

and  .

.

Brent's method

Brent's method combines the bisection method, secant method, and the method of inverse quadratic interpolation. In the iteration, a set of conditions is checked so that only the most suitable method under the current situation will be chosen to be used in the next iteration.