To solve a given equation  , we can first convert it into an

equivalent equation

, we can first convert it into an

equivalent equation  , and then carry out an iteration

, and then carry out an iteration

from some initial value

from some initial value  . If the iteration converges at a point

. If the iteration converges at a point  ,

i.e.,

,

i.e.,

, then we also have

, then we also have  , i.e.,

, i.e.,  is also the

root of the equation

is also the

root of the equation  . Consider the following examples:

. Consider the following examples:

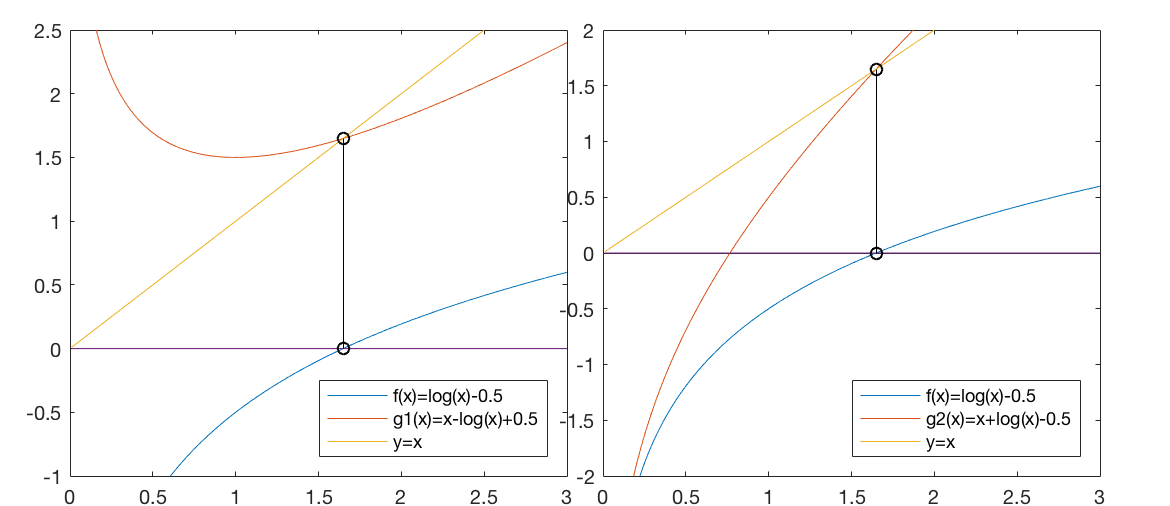

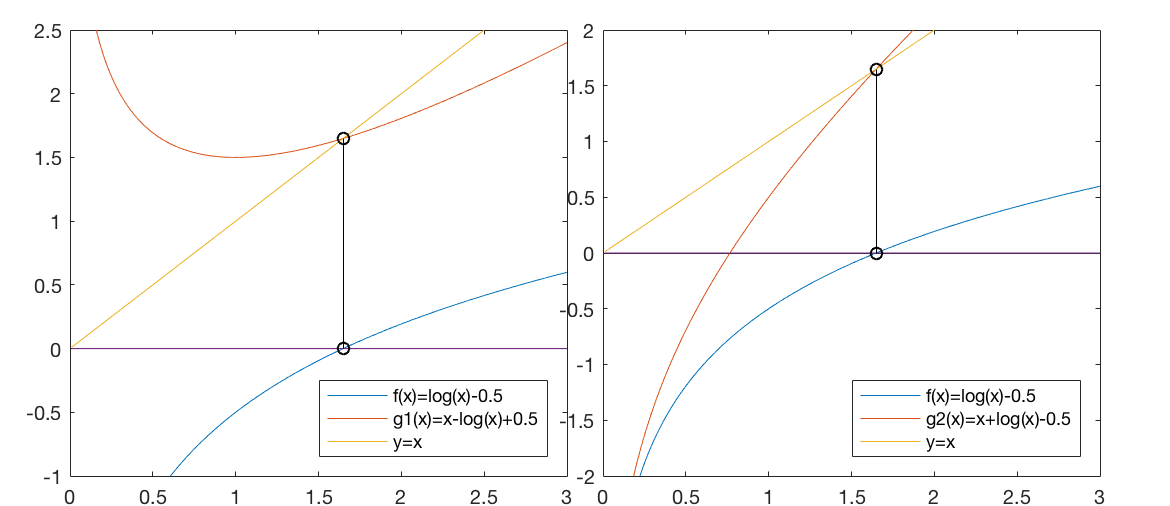

Example 1

To solve the equation

we can first construct another function

so that the equation  is indeed equivalent to the given equation

is indeed equivalent to the given equation

. We then carry out the iteration

. We then carry out the iteration

from some

initial value, such as

from some

initial value, such as  , and get:

, and get:

We see that the iteration converges to

satisfying

satisfying

, and, equivalently,

, and, equivalently,  is also the

solution of the given equation

is also the

solution of the given equation

:

:

i.e., i.e., |

|

Alternatively, we could construct a different function

which is also equivalent to  . But now the iteration

no longer converges. Why does it not work?

. But now the iteration

no longer converges. Why does it not work?

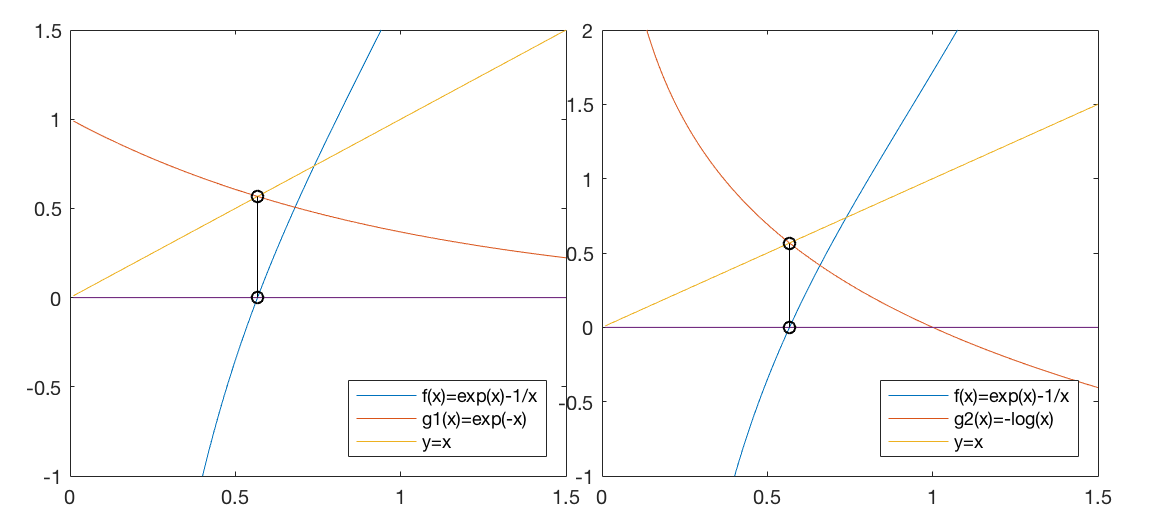

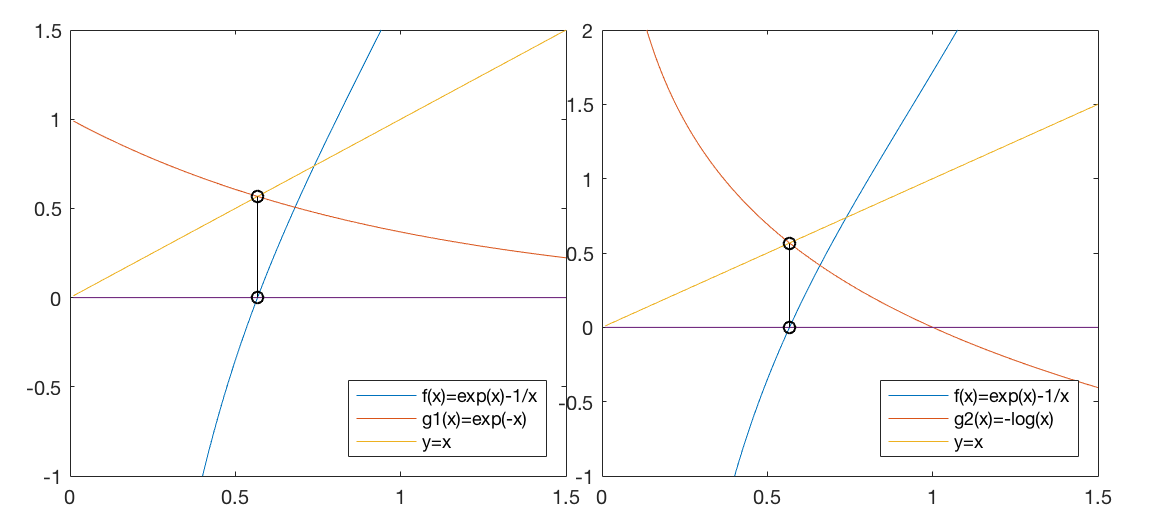

Example 2

We first convert this equation into an equivalent form

and then carry out the iteration:

which is the root of the given equation

, i.e.,

, i.e.,

.

.

Alternatively, the given equation can also be converted into a different

form

. However, the iteration based on this function no

longer converges.

. However, the iteration based on this function no

longer converges.

Example 3

We define another equation:

and the iteration based on  converges to the root of

converges to the root of  :

:

i.e.,

or

or

.

.

In summary, an equation  can be solved by converting it into

an equivalent form

can be solved by converting it into

an equivalent form  , which can then be solved iteratively to

find

, which can then be solved iteratively to

find  satisfying

satisfying

and equivalently

and equivalently  . However,

this iteration may or may not converge, as shown by one of the examples

above. We need to understand the condition for the convergence of the

iteration, so that we can construct the function

. However,

this iteration may or may not converge, as shown by one of the examples

above. We need to understand the condition for the convergence of the

iteration, so that we can construct the function  properly for the

iteration

properly for the

iteration  to converge.

to converge.

is indeed equivalent to the given equation

is indeed equivalent to the given equation

. We then carry out the iteration

. We then carry out the iteration

from some

initial value, such as

from some

initial value, such as  , and get:

, and get:

i.e.,

i.e.,

. But now the iteration

no longer converges. Why does it not work?

. But now the iteration

no longer converges. Why does it not work?