Next: Control based on Function Up: Introduction to Reinforcement Learning Previous: TD() Algorithm

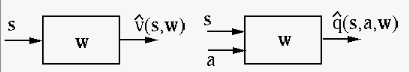

All previoiusly considered algorithms are based on

either the state values function

This approach can be considered as a regression problem

to fit a continuous function

Examples of such value function approximators include

Same as in regression problems, we desire to find the

optimal parameter

![$\displaystyle J({\bf w})=\frac{1}{2}E_\pi[(v_\pi(s)-\hat{v}(s,{\bf w}))^2 ]$](img381.svg) |

(72) |

is with respect to all states

visited while following some policy

is with respect to all states

visited while following some policy  . The gradient

vector of

. The gradient

vector of

is

is

|

|

![$\displaystyle \frac{d}{d{\bf w}}J({\bf w})

=\frac{1}{2}E_\pi\left[\frac{d}{d{\bf w}}

\left[(v_\pi(s)-\hat{v}(s,{\bf w}))^2 \right]\right]$](img384.svg) |

|

|

![$\displaystyle -E_\pi\left[ (v_\pi(s)-\hat{v}(s,{\bf w}))

\triangledown \hat{v}(s,{\bf w})\right]$](img385.svg) |

(73) |

can be

found iteratively by the gradient descent method

can be

found iteratively by the gradient descent method

![$\displaystyle {\bf w}_{n+1}={\bf w}_n+\Delta{\bf w}

={\bf w}_n-\alpha\triangled...

...\pi

\left[ (v_\pi(s)-\hat{v}(s,{\bf w}))

\triangledown\hat{v}(s,{\bf w})\right]$](img387.svg) |

(74) |

is the step size or learning rate,

and

is the step size or learning rate,

and

![$\displaystyle \Delta{\bf w}=-\alpha\triangledown{\bf J}({\bf w})

=\alpha E_\pi\left[ (v_\pi(s)-\hat{v}(s,{\bf w}))

\triangledown \hat{v}(s,{\bf w})\right]$](img388.svg) |

(75) |

is updated based

on only one data point at each iteration instead of

the expectation of the data points, then

is updated based

on only one data point at each iteration instead of

the expectation of the data points, then  for

the expectation can be dropped:

Here the true value function

for

the expectation can be dropped:

Here the true value function  , the expected

return, is unknown and needs to be estimated by the

actual return

, the expected

return, is unknown and needs to be estimated by the

actual return  at each state

at each state  based on the

MC method, or

based on the

MC method, or

in

terms of the immediate reward

in

terms of the immediate reward  and the estimated

value

and the estimated

value

of the next state

of the next state  based on the TD method, by sampling the environment.

Such an approach can be considered as a supervised

learning based on labeled training data set:

based on the TD method, by sampling the environment.

Such an approach can be considered as a supervised

learning based on labeled training data set:

for the MC method, or

for the MC method, or

for xthe TD method.

for xthe TD method.

In the following, we will consider the special case

where the state value

|

(77) |

![${\bf x}(s)=[x_1(s),\cdots,x_d(s)]^T$](img395.svg) for state

for state  ,

weighted by the corresponding weights in the weight

vector

,

weighted by the corresponding weights in the weight

vector

![${\bf w}=[w_1,\cdots,w_d]^T$](img396.svg) for all states.

for all states.

As a simple example, if we represent each of the

![${\bf x}(s_n)=[x_1(s_n),\cdots,x_N(s_n)]^T$](img398.svg)

![${\bf w}=[w_1,\cdots,w_N]^T=[v_\pi(s_1),\cdots,v_\pi(s_N)]^T$](img401.svg)

The objective function for the mean squared error of such a linear approximating function is

and its gradient is![$\displaystyle \triangledown J({\bf w})=\frac{d}{d{\bf w}}J({\bf w})

=-E_\pi\left[ (v_\pi(s)-{\bf w}^T{\bf x}(s)) {\bf x}(s)\right]$](img404.svg) |

(79) |

represented

by

represented

by

and the corresponding return

and the corresponding return  as

a sample of the true value

as

a sample of the true value  have already been

collected, then the value function approximation can be

carried out as an off-line algorithm in a batch manner,

the same as in the linear least squares linear regression

problem discussed in a previous chapter, and the optimal

weight vector that minimizes the squared error

have already been

collected, then the value function approximation can be

carried out as an off-line algorithm in a batch manner,

the same as in the linear least squares linear regression

problem discussed in a previous chapter, and the optimal

weight vector that minimizes the squared error

![$\displaystyle {\bf w}^*=\argmax_{\bf x}

\sum_s [G(s)-\hat{v}_\pi(s,{\bf w})]^2$](img407.svg) |

(80) |

|

(81) |

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_N]$](img409.svg) is a

matrix containing all

is a

matrix containing all  samples for the states and

samples for the states and

![${\bf G}=[G_1,\cdots,G_N]^T$](img410.svg) is a vector containing

the corresponding returns.

is a vector containing

the corresponding returns.

However, as reinforcement learning is typically an

online problem, the parameter

|

|

|

|

|

|

(82) |

can be found

iteratively

can be found

iteratively

|

|

![$\displaystyle {\bf w}_n+\Delta{\bf w}={\bf w}_n+\alpha

E_\pi\left[v_\pi(s)-\hat{v}(s,{\bf w})\right]

\triangledown \hat{v}(s,{\bf w})$](img414.svg) |

|

|

![$\displaystyle {\bf w}_n+\alpha

E_\pi\left[v_\pi(s)-{\bf w}^T{\bf x}(s) \right]{\bf x}(s)$](img415.svg) |

(83) |

is the increment in each iteration:

is the increment in each iteration:

![$\displaystyle \Delta{\bf w}

=\alpha E_\pi\left[v_\pi(s)-\hat{v}(s,{\bf w})\righ...

...s,{\bf w})

=\alpha E_\pi\left[v_\pi(s)-{\bf w}_n^T{\bf x}(s) \right] {\bf x}(s)$](img417.svg) |

(84) |

is

updated whenever a new sample data point

is

updated whenever a new sample data point

is

available at a time step

is

available at a time step  , then the expectation

, then the expectation  in the equations above can be dropped.

in the equations above can be dropped.

We note that the expectation of the estimated

Specifically, the state value function is unknown and can be estimated in several different ways, similar to the corresponding algorithms discussed before.

The value function

Here is the pseudo code for the algorithm based on the MC method, similar to that for value evaluation algorithms discussed previously:

is visited the first time

is visited the first time

![${\bf w}={\bf w}+\alpha[G_t-{\bf w}^T{\bf x}(s_t)] {\bf x}(s_t)$](img424.svg)

The value function

![$\displaystyle \Delta{\bf w}=\alpha[r_{t+1}+\gamma {\bf w}^T{\bf x}(s_{t+1})

-{\bf w}^T{\bf x}(s_t)] {\bf x}(s_t)$](img425.svg) |

(86) |

Here is the pseudo code for the algorithm based on the TD method, similar to that for value evaluation algorithms discussed previously:

, get reward

, get reward  and

next state

and

next state

![${\bf w}={\bf w}+\alpha[r+\gamma {\bf w}^T{\bf x}(s')-{\bf w}^T{\bf x}(s)]{\bf x}(s)$](img426.svg)

) method

) method

The value function

is replaced by

is replaced by  -return

-return

available only at the end of each spisode:

available only at the end of each spisode:

![$\displaystyle \Delta{\bf w}=\alpha[G_t^{\lambda}-{\bf w}^T{\bf x}(s_t)] {\bf x}(s_t)$](img427.svg) |

(87) |

available at every step

of each episode:

available at every step

of each episode:

![$\displaystyle \delta_t=\alpha[r_{t+1}+\gamma{\bf w}^T{\bf x}(s_{t+1})-{\bf w}^T{\bf x}(s_t)] {\bf x}(s_t)$](img428.svg) |

(88) |

|

(89) |

Given a policy

|

(90) |

|

(91) |

and

and  represent the same

distribution, they must be identical.

represent the same

distribution, they must be identical.

Given the probability distribution

![$\displaystyle MSE({\bf w})=\sum_s d(s)[v_\pi(s)-\hat{v}_\pi(s,{\bf w})]^2$](img434.svg) |

(92) |

that minimizes the mean

squared error above:

that minimizes the mean

squared error above:

![$\displaystyle MSE({\bf w}_{MC})=\min_{\bf w}

\sum_s d(s)[v_\pi(s)-\hat{v}_\pi(s,{\bf w})]^2$](img436.svg) |

(93) |