Next: Bayesian Linear Regression Up: Regression Analysis and Classification Previous: Bias-Variance Tradeoff and Ridge

The method of linear regression considered previously can

be generalized to model nonlinear relationships between

the dependent variable

![${\bf x}=[x_1,\cdots,x_d]^T$](img491.svg)

|

(145) |

is

the basis functions parameterized by

is

the basis functions parameterized by

that

span the function space in which

that

span the function space in which  resides. Some

of the common basis functions include the following:

resides. Some

of the common basis functions include the following:

|

(146) |

is

is  th order polynomial

function.

th order polynomial

function.

or or |

(147) |

is similar to the sine or

cosine series expansion of the given function.

is similar to the sine or

cosine series expansion of the given function.

|

(148) |

.

.

|

(149) |

The original d-dimensional data space spanned by

|

(150) |

is a

is a  matrix of which the

component in the nth row and kth column is the kth basis

function evaluated at the nth data point:

Typically there are many more sample data points than the basis

functions, i.e.,

matrix of which the

component in the nth row and kth column is the kth basis

function evaluated at the nth data point:

Typically there are many more sample data points than the basis

functions, i.e.,  .

.

As the nonlinear model

is the

is the  pseudo-inverse

of the

pseudo-inverse

of the  matrix

matrix

. Now we get the output

of the regression model

. Now we get the output

of the regression model

|

(154) |

The Matlab code segment belows shows the essential part

of the algorithm, where

![${\bf y}=[y_1,\cdots,y_N]^T$](img499.svg)

for n=1:N

for k=1:K

Phi(n,k)=phi(x(n),c(k));

end

end

w=pinv(Phi)*y(x); % weight vector by LS method

yhat=Phi*w; % reconstructed function

Example:

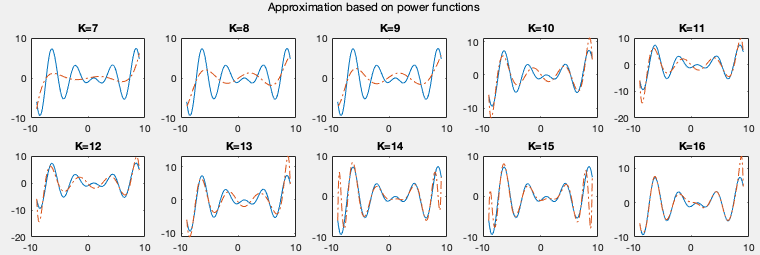

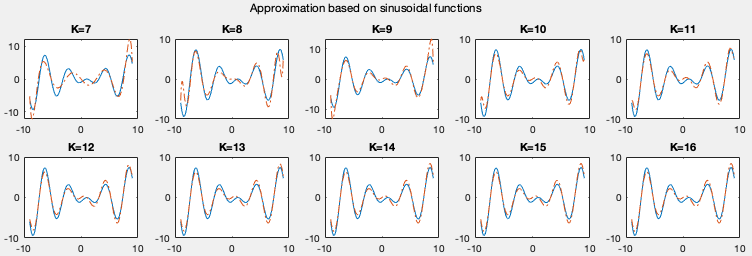

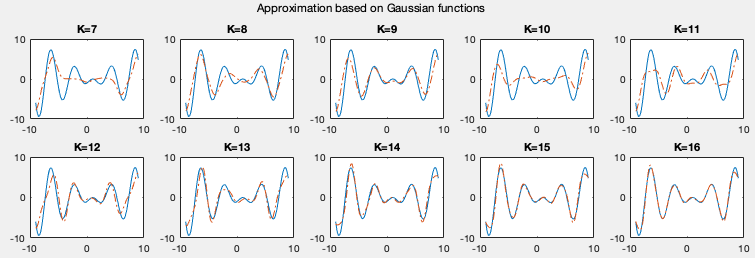

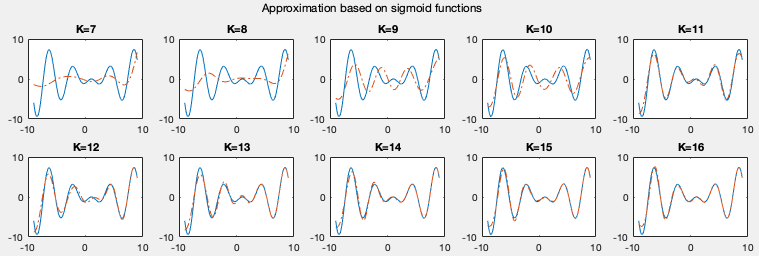

The plots below show the approximations of a function using linear regression based on four different types of basis functions listed above. We see that the more basis functions are used, the more accurately the given funtion is approximated.

Example:

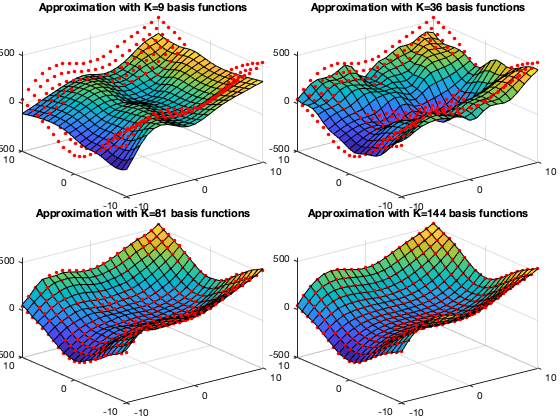

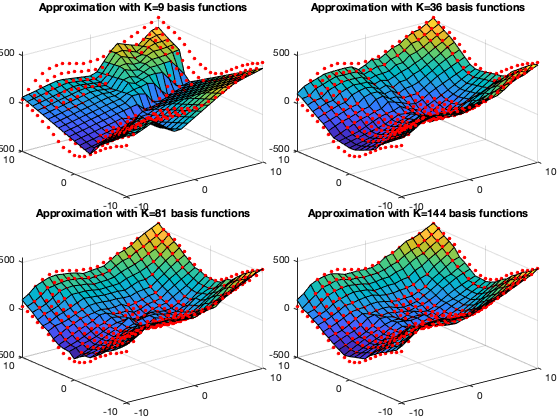

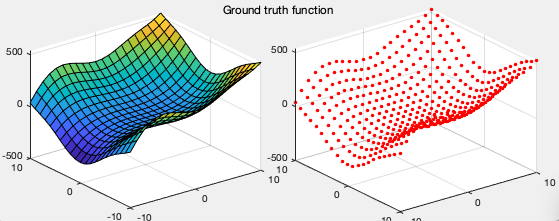

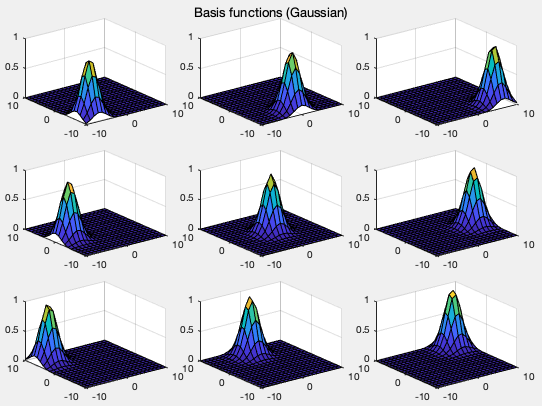

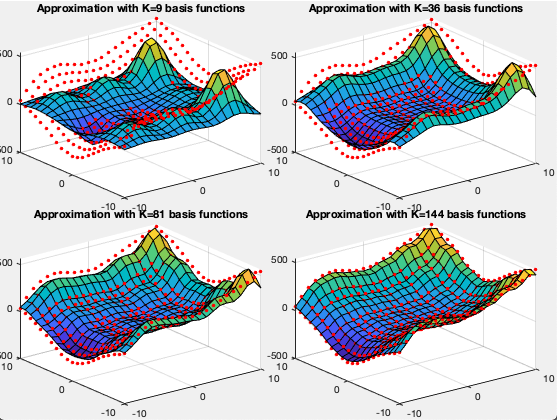

The plots below show the approximations of a 2-D function

The figure below shows a 2-D function (left) and the

The figure below shows

The figure below shows the approximated functions

based on different number

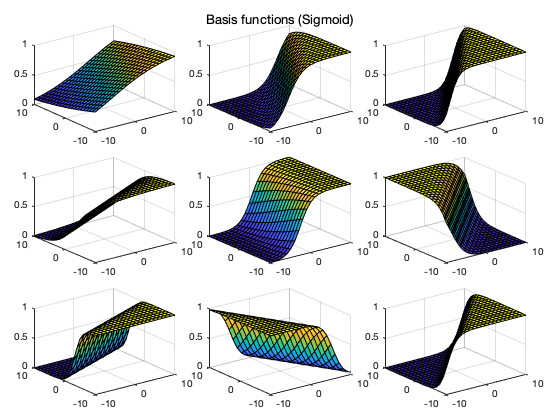

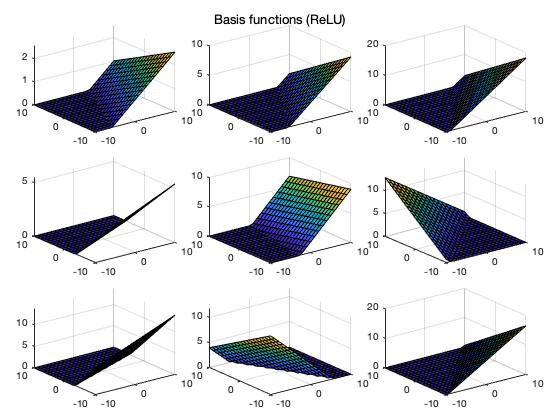

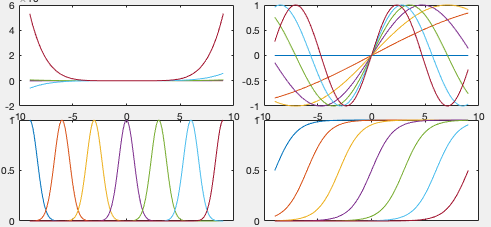

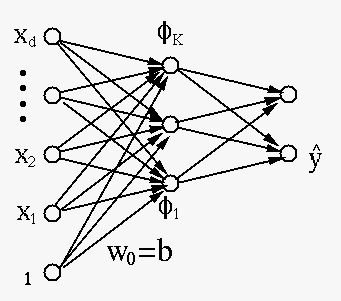

In addition to the different types of basis functions listed above, we further consider the following basis functions:

where in, called activation function, can take

some different forms such as

in, called activation function, can take

some different forms such as

|

(156) |

As shown in figure, the

Example

This method of regression based on basis functions is applied

to approximating the same 2-D function

The Matlab code segment below shows the essential part

of the program for this example, where

g=@(x) x.*(x>0); % ReLU activation function, or

g=@(x) 1/(1+exp(-x)); % Sigmoid activation function

g=@(x,w,b)g(w'*x+b); % the basis function

[X,Y]=meshgrid(xmin:1:xmax, ymin:1:ymax); % define 2-D points

nx=size(X,2); % number of samples in first dimension

ny=size(Y,1); % number of samples in second dimension

N=nx*ny; % total number of data samples

Phi=zeros(N,K); % K basis functions evaluated at N sample points

W=1-2*rand(2,K); % random initialization of weights

b=1-2*rand(1,K); % and biases for K basis functions

n=0;

for i=1:nx

x(1)=X(1,i); % first component

for j=1:ny

x(2)=Y(j,1); % second component

n=n+1; % of the nth sample

Y(i,j)=func(x); % function evalued at the sample

for k=1:K % basis functions evaluated at the sample

Phi(n,k)=g(x,W(:,k),b(k));

end

end

end

y=reshape(Y,N,1); % convert function values to vector

w=pinv(Phi)*y; % find weights by LS method

yhat=Phi*w; % reconstructed function

Yhat=reshape(yhat,m,n); % convert vector y to 2-D to compare w/ Y

The figures below show the regression results as well as the basis functions used, based on both the sigmoid and ReLU activation functions. We note that as the function is better approximated when more basis functions are used in the regression.