Next: Markov Decision Process Up: Introduction to Reinforcement Learning Previous: Introduction to Reinforcement Learning

Reinforcement learning (RL) can be considered as one of the three basic machine learning paradigms, alongside supervised learning (e.g., regression and classification) and unsupervised learning (e.g., clustering) discussed previously. The goal of RL is for the algorithm, a software agent, to learn to make a sequence of decisions, called policy, for a specific task in a given environment, for the purpose of receiving the maximum rewards from the environment.

Different from supervised learning for either regression or

classification based on a given training dataset composed of

a set of i.i.d. sample points

Specifically, RL as a sequential method depends on the dynamics of the environment which is modeled by a Markov decision process (MDP), a stochatic system of multiple states with probabilistic state transitions and rewards. If the MDP model of the environment in terms of its the state transition and reward probabilities is known, the agaent only needs to find the optimal policy in terms of what action to take at each state of the MDP to achieve maximum accumulated rewards as the consequence of its actions. The task in this model-based case is called planning. On the other hand, if MDP of the environment is unknown, the agent needs to learn the environment by repeatedly running the dynamic process of the MDP based on some initial policy and gradually evaluate the received rewards and improve the policy to eventually reach optimality. The task in this model-free case is called control.

Depending on the action taken by the agent in the current

state

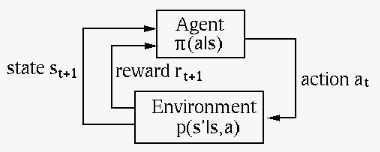

The figure below illustrates the basic concepts of RL,

where the agent makes a decision in terms of the action