Next: About this document ... Up: Introduction to Reinforcement Learning Previous: Deep Q-learning

All RL algorithms previous considered are based on either

state or action-value function and the policy is indectly

derived from them by greedy or

Previously we approximate the state value function

|

(110) |

![${\bf\theta}=[\theta_1,\cdots,\theta_d]^T$](img470.svg) represents some

represents some  parameters of the model. As the

dimensionality

parameters of the model. As the

dimensionality  is typically smaller than the

number of states and actions, such a parameterized

policy model is suitable in cases where the numbers

of states and actions are large or even continuous.

is typically smaller than the

number of states and actions, such a parameterized

policy model is suitable in cases where the numbers

of states and actions are large or even continuous.

As a specific example, the policy model can be based on the soft-max function:

satisfying . Here the

summation is over all possible actions, and

. Here the

summation is over all possible actions, and

is the preference of action

is the preference of action  in state

in state  , which

can be a parameterized function such as a simple linear

function

, which

can be a parameterized function such as a simple linear

function

, or a

neural network with weights represented by

, or a

neural network with weights represented by

,

the same as how the value functions are approxmiated in

Section 1.5. According to this

policy, an action with higher preference

,

the same as how the value functions are approxmiated in

Section 1.5. According to this

policy, an action with higher preference

will have a higher probability to be chosen.

will have a higher probability to be chosen.

Different from how we find the parameter

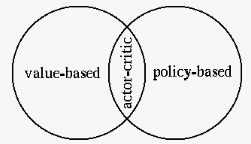

The value-function based methods considered previously and the policy-based methods considered here are summarized below, together with the actor-critic method, as the combination of the two:

-greedy method based on the

value function learned during sampling the

environment as a supervised learning process.

-greedy method based on the

value function learned during sampling the

environment as a supervised learning process.

Advantages of policy-based RL includes better convergence properties, but may stuck at local optimum, effective in high-dimensional or continuous action space can learn stochastic policies, but have high variance.

How good a policy is may be measured by different objective functions all related to the values or rewards associated with the policy being evaluated, depending on the environment of the specific problem:

:

:

|

(112) |

|

(113) |

is the stationary distribution

of all states under policy

is the stationary distribution

of all states under policy

.

.

for

the policy model

for

the policy model

by solving the

maximization problem:

by solving the

maximization problem:

|

(114) |

:

:

![$\displaystyle \triangledown_\theta J(\theta)=\frac{d}{d{\theta}}J(\theta)

=\lef...

...rtial J}{\partial\theta_1},\cdots,

\frac{\partial J}{\partial\theta_n}\right]^T$](img486.svg) |

(115) |

for the index of the iteration based on

the assumption that the a new sample point is available at

every time step of an episode while sampling the environment

following policy

for the index of the iteration based on

the assumption that the a new sample point is available at

every time step of an episode while sampling the environment

following policy

.

.

While it is conceptually straight forward to see how

gradient ascent method can be used to find the optimal

parameter

Proof of policy gradient theorem:

is not a function of

is not a function of

in Eq. (17)

in Eq. (17)

is indpendent of

is indpendent of  and moved inside summation over

and moved inside summation over

is expressed as a

function in terms

is expressed as a

function in terms

.

.

We further define

|

(119) |

and get:

and get:

can be written as

can be written as

as a constant independent of state

as a constant independent of state  ,

and we also defined

,

and we also defined

|

(126) |

from

the start state

from

the start state  , and

as a normalized verion of

, and

as a normalized verion of  representing the probability

distribution of visiting state

representing the probability

distribution of visiting state  while following policy

while following policy  .

Eq. (125) can be further written as

.

Eq. (125) can be further written as

denotes the expectation over all actions

in each state

denotes the expectation over all actions

in each state  weighted by

weighted by

and all

states

and all

states  weighted by

weighted by

.

.

Q.E.D.

We see that the gradient

We further note that Eq. (125) still hold if

an arbitrary bias term

|

(131) |

The expectation

Specifically, for a soft-max policy model as given in

Eq. (111), we have

|

|

|

|

|

|

||

|

|

||

|

|

(133) |

We list set of popular policy-based algorithms below, based on either the MC or TD methods, generally used in previous algorithms.

In this algorithm, the action value function

|

(134) |

is included

as the expression for

is included

as the expression for

in Eq. (128) assumed

in Eq. (128) assumed  for simplicity.

for simplicity.

Here is the pseudo code for the algorithm:

for each state visited

for each state visited

As its name suggests, this algorithm is based on two

approximation function models, the first for the policy

Specifically, in Eq. (132), the action

value function

|

|

![$\displaystyle {\bf w}_t+\alpha_w

\left[ (r_{t+1}+\gamma\hat{v}_\pi(s',{\bf w})-\hat{v}_\pi(s,{\bf w}))

\,\triangledown_w\hat{v}_\pi(s,{\bf w})\right]$](img560.svg) |

|

|

|

![$\displaystyle \theta_t+\alpha_\theta

\left[ (r_{t+1}+\gamma\hat{v}_\pi(s',{\bf ...

...t{v}_\pi(s,{\bf w}))

\,\triangledown_\theta\ln \pi(a\vert s,{\bf\theta})\right]$](img562.svg) |

(135) |

Here is the pseudo code for the algorithm:

and

and

and

and

,

,

is not terminal (for each step)

is not terminal (for each step)

following

following

, find

reward

, find

reward  and next state

and next state

) policy gradient

) policy gradient

Here is the pseudo code for the algorithm:

and

and

and

and

and

and

,

,

,

,

is not terminal (for each step)

is not terminal (for each step)

following

following

, find

reward

, find

reward  and next state

and next state

Following the similar steps, the policy gradient theorem for environment with continuous state and action spaces can be also proven:

|

(136) |