Next: Appendix B: Jensen's Inequality

Up: MCMC

Previous: Gibbs Sampling

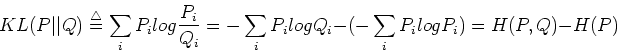

The KL-divergence between two distributions  and

and  is defined as

is defined as

where  is the entropy of distribution

is the entropy of distribution  ,

,  is the cross-entropy

of distributions

is the cross-entropy

of distributions  and

and  , and their difference, also called relative

entropy, represents the divergence or difference between the two distributions.

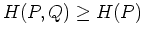

According Gibbs' inequality,

, and their difference, also called relative

entropy, represents the divergence or difference between the two distributions.

According Gibbs' inequality,

, with the equality holds

, with the equality holds

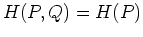

if and only if

if and only if  ,

,

.

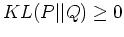

.

Ruye Wang

2018-03-26

![]() and

and ![]() is defined as

is defined as