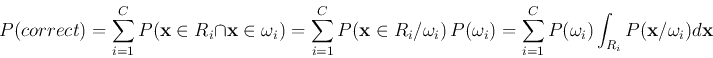

First consider the case of ![]() classes. Let

classes. Let

![]() denote the joint probability that

denote the joint probability that ![]() belongs to

belongs to ![]() but is in

but is in ![]() (

(![]() ), then the total probability of error (misclassification) is:

), then the total probability of error (misclassification) is:

|

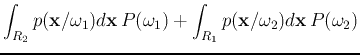

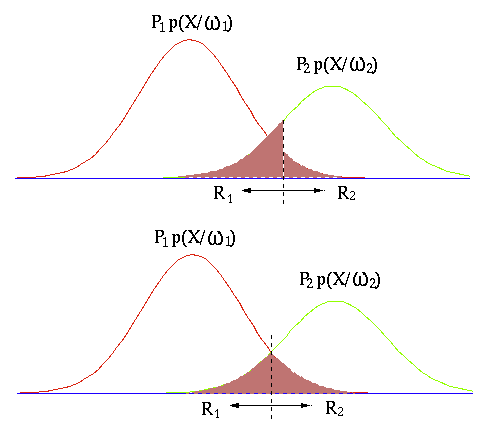

It is obvious that the Bayes classifier is indeed optimal, due to the

fact that its boundaries corresponding to

![]() guarantee the classification error to be minimized.

guarantee the classification error to be minimized.

Next consider multi-class case. As there are many different ways to have

a wrong classification and only one way to get it right, consider