Next: Pseudo-inverse

Up: algebra

Previous: Normal matrices and diagonalizability

SVD Theorem: An  matrix

matrix  of rank

of rank  can be diagonalized by two unitary matrices

can be diagonalized by two unitary matrices

and

and

:

:

where

-

![${\bf U}_{M\times M}=[{\bf u}_1,\ldots,{\bf u}_M]$](img377.png) is a unitary

matrix (

is a unitary

matrix (

) composed of the left

singular vectors satisfying

) composed of the left

singular vectors satisfying

or in matrix form

-

![${\bf V}_{N\times N}=[{\bf v}_1,\ldots,{\bf v}_N]$](img381.png) is a unitary

matrix (

is a unitary

matrix (

) composed of the right

singular vectors satisfying

) composed of the right

singular vectors satisfying

or in matrix form

-

is an

is an  diagonal matrix

with

diagonal matrix

with  non-zero singular values

non-zero singular values

of

of

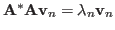

Proof: Let  and

and  be the nth eigenvalue and

the corresponding normalized eigenvector of the

be the nth eigenvalue and

the corresponding normalized eigenvector of the  matrix

matrix

satisfying the following eigenequation:

satisfying the following eigenequation:

As

is Hermitian (symmetric if

is Hermitian (symmetric if

is real), its eigenvalues

is real), its eigenvalues  are real and its

normalized eigenvectors are orthonormal:

are real and its

normalized eigenvectors are orthonormal:

,

i.e., the eigenvector matrix defined as

,

i.e., the eigenvector matrix defined as

is unitary

(orthogonal if

is unitary

(orthogonal if  is real):

is real):

The eigenequation can be written in matrix form:

where

![${\bf\Lambda}=diag[\lambda_1,\cdots,\lambda_N]$](img398.png) .

.

Pre-multiplying  on both sides of

on both sides of

we get

we get

We see that

is the eigenvector of the Hermitian matrix

is the eigenvector of the Hermitian matrix

corresponding to its eigenvalue

corresponding to its eigenvalue  , with norm:

, with norm:

We define the normalized eigenvector of

as

as

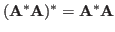

As

is Hermitian, its normalized eigenvectors are

orthonormal, which can also be shown as:

is Hermitian, its normalized eigenvectors are

orthonormal, which can also be shown as:

We define

which is also unitary

Now we have

Q.E.D.

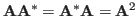

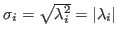

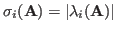

When

is Hermitian (symmetric if real), i.e.,

is Hermitian (symmetric if real), i.e.,

, then if

, then if

we also have

i.e., the singular values

are

the absolute values of its eigenvalues

are

the absolute values of its eigenvalues

,

and

,

and

.

.

The SVD equation

can be considered as the forward SVD transform. Pre-multiplying  and

post-multiplying

and

post-multiplying  on both sides, we get the inverse transform:

on both sides, we get the inverse transform:

by which the original matrix  is represented as a linear combination

of

is represented as a linear combination

of  matrices

matrices

![$[{\bf u}_k{\bf v}_k^*]$](img418.png) weighted by the singular values

weighted by the singular values

(

( ). We can rewrite both the forward and

inverse SVD transform as a pair of forward and inverse transforms:

). We can rewrite both the forward and

inverse SVD transform as a pair of forward and inverse transforms:

Given the SVD of an  matrix

matrix

,

its pseudo-inverse can be found to be

,

its pseudo-inverse can be found to be

where

is pseudo-inverse of

is pseudo-inverse of  , composed of

the reciprocals

, composed of

the reciprocals  of the

of the  singular values along the diagonal.

singular values along the diagonal.

The  matrix

matrix

can be

considered as alinear transformation that converts a vector

can be

considered as alinear transformation that converts a vector

to another vector

to another vector

in three steps:

in three steps:

- Rotate vector

by the unitary matrix

by the unitary matrix  :

:

- Scale each dimention

of

of  by a factor of

by a factor of  (

( ):

):

- Rotate vector

by the unitary matrix

by the unitary matrix  :

:

The figure below illustrates the transformation of the three vertices of

a triangle in 2-D space by a matrix

,

which first rotates the vertices by 45 degrees CCW, scale horitontally and

vertically by a factor of 3 and 2, respectively, and then rotate CW by 30

degrees.

,

which first rotates the vertices by 45 degrees CCW, scale horitontally and

vertically by a factor of 3 and 2, respectively, and then rotate CW by 30

degrees.

Next: Pseudo-inverse

Up: algebra

Previous: Normal matrices and diagonalizability

Ruye Wang

2015-04-27

![]() matrix

matrix ![]() of rank

of rank ![]() can be diagonalized by two unitary matrices

can be diagonalized by two unitary matrices

![]() and

and

![]() :

:

![]() and

and ![]() be the nth eigenvalue and

the corresponding normalized eigenvector of the

be the nth eigenvalue and

the corresponding normalized eigenvector of the ![]() matrix

matrix

![]() satisfying the following eigenequation:

satisfying the following eigenequation:

![]() on both sides of

on both sides of

![]() we get

we get

![]() is Hermitian (symmetric if real), i.e.,

is Hermitian (symmetric if real), i.e.,

![]() , then if

, then if

![\begin{displaymath}

{\bf A}={\bf U}{\bf\Sigma}{\bf V}^*

=[{\bf u}_1,\cdots\cdo...

...nd{array}\right]

=\sum_{k=1}^R \sigma_k[{\bf u}_k{\bf v}_k^*]

\end{displaymath}](img417.png)

![]() matrix

matrix

![]() ,

its pseudo-inverse can be found to be

,

its pseudo-inverse can be found to be

![]() matrix

matrix

![]() can be

considered as alinear transformation that converts a vector

can be

considered as alinear transformation that converts a vector

![]() to another vector

to another vector

![]() in three steps:

in three steps:

![]() ,

which first rotates the vertices by 45 degrees CCW, scale horitontally and

vertically by a factor of 3 and 2, respectively, and then rotate CW by 30

degrees.

,

which first rotates the vertices by 45 degrees CCW, scale horitontally and

vertically by a factor of 3 and 2, respectively, and then rotate CW by 30

degrees.