Next: The AdaBoost Algorithm Up: Back Propagation Network Previous: Summary of BP training

The training process is composed of both forward pass (shown above) and the

backward pass — the error back propagation. The following is the 2-step

training process for a particular pattern pair

![]() ,

which is repeated many times in random order for all

,

which is repeated many times in random order for all ![]() pattern pairs.

pattern pairs.

The actual output of the ith output node corresponding to the pth input pattern is:

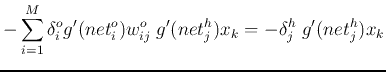

![$\displaystyle \frac{1}{2}\sum_{i=1}^M(y_i-y'_i)^2

=\frac{1}{2}\sum_{i=1}^M(y_i-...

...2}\sum_{i=1}^M\left[y_i-g\left(\sum_{j=1}^L w_{ij}^{o}z_j+T^o_i\right)\right]^2$](img69.svg) |

|||

![$\displaystyle \frac{1}{2}\sum_{i=1}^M\left[y_i-g\left(\sum_{j=1}^L w_{ij}^o\,g(...

...L w_{ij}^o\,

g\left(\sum_{k=1}^N w_{jk}^hx_k+T^h_j\right)+T^o_i\right)\right]^2$](img70.svg) |

|

|

||

|

Applications of BP networks include:

A story related (maybe) to neural networks

An elementary school teacher asked her class to give examples of the great technological inventions in the 20th century. One kid mentioned it was the telephone, as it would let you talk to someone far away. Another kid said it was the airplane, because an airplane could take you to anywhere in the world. Then the teacher saw little Johnny eagerly waving his hand in the back of the classroom. “What do you think is the greatest invention, Johnny?” “The thermos!” The teacher was very puzzled, “Why do you think the thermos? All it can do is to keep hot things hot and cold things cold.” “But”, Johnny answered, “ how does it know when to keep things hot and when to keep them cold?”

Don't you sometimes wonder “How does a neural network know ...?”

Well, it does not. It is just a monkey-see-monkey-do type of learning, like this...