Next: Kernel Methods Up: Principal Component Analysis Previous: Comparison with Other Orthogonal

The KLT can be applied to a set of

-dimensional vector

-dimensional vector  can be formed by all

can be formed by all  pixels (by concatenating the rows or columns) of each of the

pixels (by concatenating the rows or columns) of each of the  images. These

images. These  vectors each for one of the

vectors each for one of the  images are represented

by a

images are represented

by a  data array

data array

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_N]$](img6.svg) , and

treated as the samples of a K-dimensional random vector

, and

treated as the samples of a K-dimensional random vector  , its

covariance matrix can be estimated (assuming with zero mean) as:

, its

covariance matrix can be estimated (assuming with zero mean) as:

![$\displaystyle {\bf\Sigma}=\frac{1}{N} \sum_{n=1}^N{\bf x}_n{\bf x}_n^T

=\frac{1...

...N^T\end{array}\right]

=\frac{1}{N} \left( {\bf X} {\bf X}^T \right)_{K\times K}$](img327.svg) |

(86) |

-dimensional vector can be formed by the pixels at the same

position (in the

-dimensional vector can be formed by the pixels at the same

position (in the  th row and

th row and  th column of the image array) from

all

th column of the image array) from

all  images. There are

images. There are  such vectors each for one of the

such vectors each for one of the  pixels in the images. These vectors are the rows of the

pixels in the images. These vectors are the rows of the  defined above, or the columns of

defined above, or the columns of  . The covariance matrix

of these

. The covariance matrix

of these  dimensional vectors can be estimated as:

dimensional vectors can be estimated as:

|

(87) |

As shown previously, these two different covariance matrices share

the same eigenvalues. The eigenequations for

|

(88) |

on both sides of the second equation we get

on both sides of the second equation we get

![$\displaystyle {\bf X}^T{\bf X} [{\bf X}^T{\bf u}]=\mu [{\bf X}^T{\bf u}].$](img335.svg) |

(89) |

and eigenvector

and eigenvector

when normalized. The two covariance

matrices

when normalized. The two covariance

matrices

and

and

have the same rank

have the same rank

(if

(if  is not degenerate) and therefore the same number of non-zero eigenvalues.

Consequently, the KLT can be carried out based on either matrix with the same

effects in terms of the signal decorrelation and energy compaction. As the number

of pixels in the image is typically much greater than the number of images,

is not degenerate) and therefore the same number of non-zero eigenvalues.

Consequently, the KLT can be carried out based on either matrix with the same

effects in terms of the signal decorrelation and energy compaction. As the number

of pixels in the image is typically much greater than the number of images,

, we will take the second approach above to treat the pixels in the same

position in all

, we will take the second approach above to treat the pixels in the same

position in all  images as a sample of an

images as a sample of an  -dimensional random signal vector and

carry out the KLT based on the

-dimensional random signal vector and

carry out the KLT based on the  covariance matrix

covariance matrix

.

.

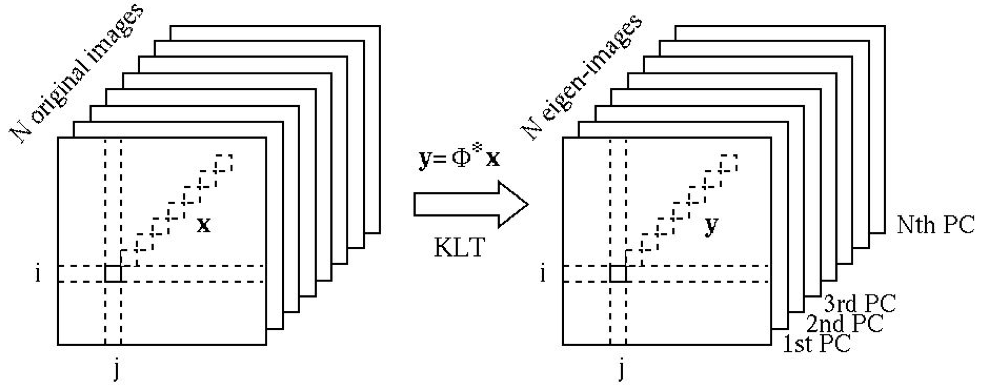

We can now carry out the KLT to each of the

We now consider some of such applications.

In remote sensing, images of the surface of either the Earth or other

planets such as Mars are taken by a multispectral camera system on board

satellite, for various studies (e.g., geology, geography, etc.). The camera

system has an array of

These sensors will produce a set of

A sequence of

It is interesting to observe that the first eigen-image corresponding to the greatest eigenvalue (left panel of the third row of the figure) represents mostly the static scene of the image frames representing the main variations in the image (carrying most of the energy), while the subsequent eigen-images represent mostly the motion in the video, the variation between the frames. For example, the motion of the people riding on the escalator is mostly reflected by the first few eigen-images following the first one, while the motion of the escalator stairs is mostly reflected in the subsequent eigen-images.

The

We see that due to the spatial correlation between nearby pixels, the covariance matrix before the KLT (left) can be modeled by the squared exponental function, while the covariance matrix after the KLT (middle) is completely decorrelated and the energy is highly compacted into a small number of principal components (here the first component), as also clearly shown in the comparison of the energy distribution before and after the KLT (right).

Twenty images of faces (

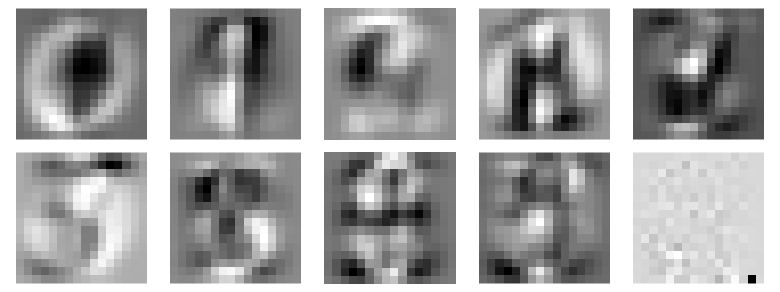

The eigen-images after KLT:

Percentage of energy contained in the

| components | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| percentage energy | 48.5 | 11.6 | 6.1 | 4.6 | 3.8 | 3.7 | 2.6 | 2.5 | 1.9 | 1.9 | 1.8 | 1.6 | 1.5 | 1.4 | 1.3 | 1.2 | 1.1 | 1.1 | 0.9 | 0.8 |

| accumulative energy | 48.5 | 60.1 | 66.2 | 70.8 | 74.6 | 78.3 | 81.0 | 83.5 | 85.4 | 87.3 | 89. | 90.7 | 92.2 | 93.6 | 94.9 | 96.1 | 97.2 | 98.2 | 99.2 | 100.0 |

Reconstructed faces using 95% of the total information (15 out of 20 components):

The goal here is to recognize hand-written number from 0 to 9 in an image,

as those in the figure below, containing

Specifically, we use the eigenvectors corresponding to the

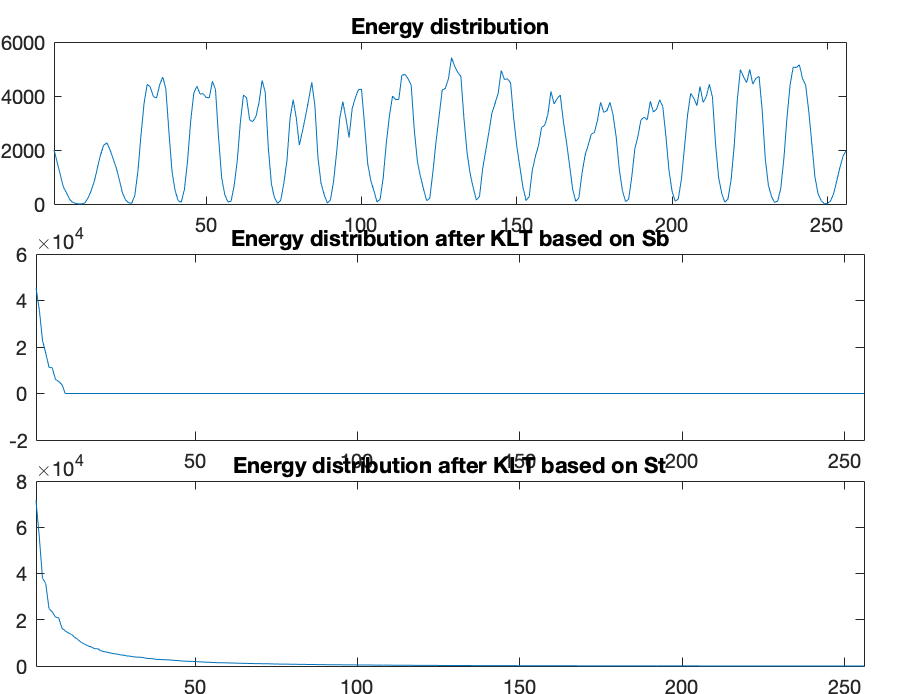

The energy distribution over all

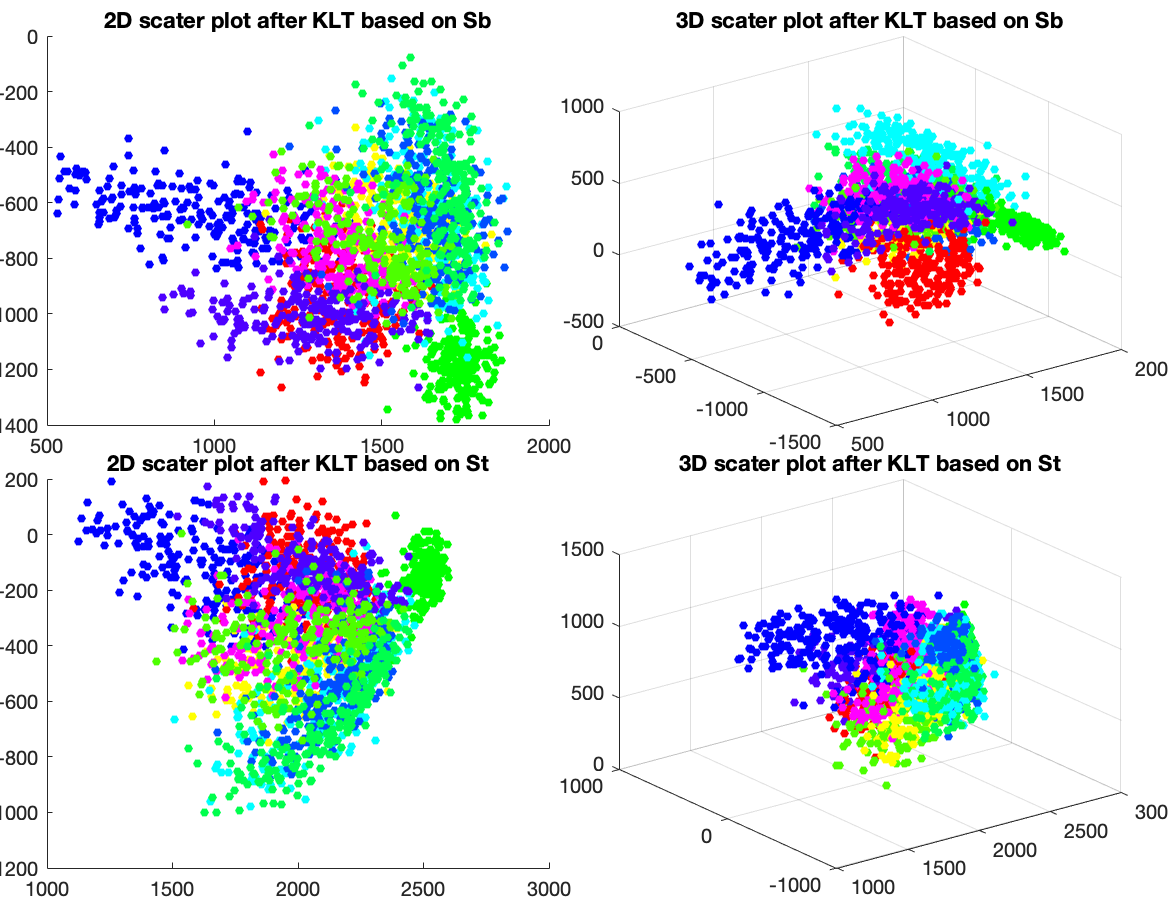

For the KLT based on

The corresponding

If we only keep the first two or three principal components (corresponding

to the greatest eigenvalues) after the KLT, the dataset can be visualized

as shown in the figure below. The sample points in each of the ten different

classes are color-coded. It can be seen that even when the dimensions are

much reduced from