The Runge-Kutta methods

Compared with all previous discussed methods for solving a first

order DE

, the Runge-Kutta (RK) methods

can achieve higher accuracies, i.e., the order of error term for

is higher than all previous discussed methods, due to the much

improved approximation of the slope of the secant

, the Runge-Kutta (RK) methods

can achieve higher accuracies, i.e., the order of error term for

is higher than all previous discussed methods, due to the much

improved approximation of the slope of the secant

between the two end points

between the two end points

and

and  .

.

The general iteration of the RK methods takes the following form:

|

(226) |

where the slope of the secant  is approximated as the

average of

is approximated as the

average of  estimations

estimations

of the slope weighted

respectively by

of the slope weighted

respectively by

:

:

|

(227) |

These  estimated slopes are obtained recursively, i.e., the ith

estimate

estimated slopes are obtained recursively, i.e., the ith

estimate  is based on all previous estimates

is based on all previous estimates

:

:

|

(228) |

where

,

,  and

and

is the slope of the tangent at

is the slope of the tangent at  .

.

This iteration can be expressed in terms of  ,

,

,

,

, and

, and

:

:

|

(229) |

where

Specially when  , we have the forward Euler method:

, we have the forward Euler method:

|

(231) |

The problem of iteratively solving the DE

can also

be viewed as an integral problem which can be solved by certain

quadrature rules:

can also

be viewed as an integral problem which can be solved by certain

quadrature rules:

|

(232) |

where  is the estimated function value

is the estimated function value  at

some point inside the interval

at

some point inside the interval ![$[t,\,t+h]$](img630.svg) , and

, and  is the

corresponding weight.

is the

corresponding weight.

In general, an s-stage RK method with free parameters

,

,

and

and

can be arranged in

the form of a Butcher tableau:

can be arranged in

the form of a Butcher tableau:

|

(233) |

The total number of parameters is

.

.

We need to find the specific values for these parameters so that the

error term of an s-stage RK method can achieve the highest possible

order in terms of the step size  . This is done by matching the

coefficients of different orders of the

. This is done by matching the

coefficients of different orders of the  terms in the RK iteration

with those of the corresponding

Taylor expansion

of the function:

terms in the RK iteration

with those of the corresponding

Taylor expansion

of the function:

|

(234) |

where the derivatives of  can be found as:

can be found as:

where

|

(236) |

Substituting these into the Taylor expansion of  in

Eq. (234), we get different approximations of the

function with truncation errors of progressively higher orders:

in

Eq. (234), we get different approximations of the

function with truncation errors of progressively higher orders:

These Taylor expansions are then compared with the iterations

of RK methods of different

number of stages

of RK methods of different

number of stages

to determine the parameters

required to achieve the highest possible order for the error term.

to determine the parameters

required to achieve the highest possible order for the error term.

:

:

|

(241) |

Comparing this with the Taylor expansion in Eq. (238),

we see that if  . This RK1 is simply Euler's forward method

in Eq. (189) with a 2nd order error.

. This RK1 is simply Euler's forward method

in Eq. (189) with a 2nd order error.

:

:

Given  , we express

, we express  as the Taylor expansion of

the 2-variable function

as the Taylor expansion of

the 2-variable function  :

:

|

(242) |

and the iterative step of the RK2 method becomes

For the RK2 method to have the third (highest possible) order error,

its coefficients have to match those of the Taylor expansion in Eq.

(239), and we get the following three necessary and sufficient

conditions for the parameters:

|

(244) |

There exist multiple solutions for this equation system of three

equations but four unknowns. By introducing a free variable  ,

the remaining three variables can be determined as shown in the Butcher's

tableau of the RK2:

,

the remaining three variables can be determined as shown in the Butcher's

tableau of the RK2:

|

(245) |

Listed below are three of such solutions corresponding to

:

:

-

, we have

, we have

This is the trapezoidal Euler (Heun's) method in

Eq. (199), which can also be obtained

by the trapezoidal rule of

Newton-Cotes quadrature

applied to approximate the integral in the iteration:

![$\displaystyle y(t_{n+1})=y(t_n)+\int_{t_n}^{t_{n+1}} f(t)\,dt

\approx y(t_n)+\frac{h}{2}[f(t_n)+f(t_n+h)]$](img676.svg) |

(247) |

-

, we have

, we have

This is the midpoint method in Eq. (218).

-

, we have

, we have

This is called Ralston's method.

Given  , we express

, we express  and

and  by their corresponding

Taylor expansions:

by their corresponding

Taylor expansions:

Here all terms of  of order higher than two are collected in the

error term

of order higher than two are collected in the

error term  . The iterative step for this RK3 method can now

be written as:

. The iterative step for this RK3 method can now

be written as:

For the RK3 method to have the forth (highest possible) order error, its

coefficients have to match those of the Taylor expansion expansion in

Eq. (240), which can also be written as

|

(252) |

we get the following necessary and sufficient conditions for the

parameters:

Simplifying we get the following 6 independent equations:

There exist multiple solutions for this system of 6 equations but 8

variables, such as the two shown below, known as Kutta's method (left)

and Heun's third order method (right):

|

(253) |

The RK3 iterations corresponding to the two sets of parameters are

respectively:

|

(254) |

We recognize that the first method is simply the Simpson's rule

of Newton-Cotes quadrature

for approximating the integral in the iteration:

![$\displaystyle y(t_{n+1})=y(t_n)+\int_{t_n}^{t_{n+1}} f(t)\,dt

\approx y(t_n)+\frac{h}{6}[f(t_n)+4f(t_n+h/2)+f(t_n+h)]$](img708.svg) |

(255) |

In a similar manner, we can derive (see the next section) the

following eight necessary and sufficient conditions for the

parameters of RK4 method for it to have a 5th order error term:

There exist multiple solutions for this system of eight equations

of 13 variables, such as the classical RK4 and the 3/8 rule

methods listed respectively on the left and right below.

|

(257) |

The RK4 iterations corresponding to the two sets of parameters are

respectively:

|

(258) |

We recognize that the second method is simply the application

of the Simpson's 3/8 rule of the

Newton-Cotes quadrature

for approximating the integral in the iteration:

![$\displaystyle y(t_{n+1})=y(t_n)+\int_{t_n}^{t_{n+1}} f(t)\,dt

\approx y(t_n)+\frac{h}{8}[f(t_n)+3f(t_n+h/3)+3f(t_n+2h/3)+f(t_n+h)]$](img720.svg) |

(259) |

From the above we see that up to  , the order of accuracy is

the same as the number of stages of the RK method, e.g., for RK4,

the order of its accuracy is the same as its stage

, the order of accuracy is

the same as the number of stages of the RK method, e.g., for RK4,

the order of its accuracy is the same as its stage  (with a

5th order error term

(with a

5th order error term  ) . However, this is not the case for

) . However, this is not the case for

, as shown in the table below:

, as shown in the table below:

|

(260) |

The Matlab code for RK4 is shown below as a function which

takes three arguments, the step size  , time moment

, time moment  and

and

, and generates the approximation of the next value

, and generates the approximation of the next value

with

with

estimated

by one of the several versions of the RK4 method, based on

another function that calculate

estimated

by one of the several versions of the RK4 method, based on

another function that calculate

:

:

function y = RungeKutta4(h, t, y, k)

k1 = f(t, y);

switch k

case 1

k2 = f(t+h/2, y + h*k1/2);

k3 = f(t+h/2, y + h*k2/2);

k4 = f(t+h, y + h*k3);

y = y + h*(k1 + 2*k2 + 2*k3 + k4)/6;

case 2

k2 = f(t+h/3, y + h*k1/3);

k3 = f(t+2*h/3, y -h*k1/3 +h*k2);

k4 = f(t+h, y + h*k1 -h*k2 +h*k3);

y = y + h*(k1 + 3*k2 + 3*k3 + k4)/8;

case 3

k2 = f(t+2*h/3, y + 2*h*k1/3);

k3 = f(t+h/3, y + h*k1/12 +h*k2/4);

k4 = f(t+h, y - 5*h*k1/4 +h*k2/4 +2*h*k3);

y = y + h*(k1 + 3*k2 + 3*k3 + k4)/8;

case 4

k2 = f(t+h/2, y + h*k1/2);

k3 = f(t+h/2, y + h*k1/6 +h*k2/3);

k4 = f(t+h, y -h*k2/2 +3*h*k3/2);

y = y + h*(k1 + k2 + 3*k3 + k4)/6;

case 5

k2 = f(t+h/2, y + h*k1/2);

k3 = f(t+h/2, y - h*k1/2 +h*k2);

k4 = f(t+h, y + h*k2/2 + h*k3/2);

y = y + h*(k1 + 3*k2 + k3 + k4)/6;

end

end

Example 1: Consider a simple equation

with a closed form solution

with a closed form solution

.

.

- RK1, same as forward Euler's method,

|

(261) |

- RK2, we choose to use

and

and

:

:

Substituting these into the RK2 iteration, we get

|

(264) |

- RK3, we choose to use

,

,

,

,  ,

,

, and

, and  :

:

Substituting these into the RK3 iteration, we get

|

(269) |

- RK4, we choose to use

,

,

and

and

,

,

:

:

Substituting these into the RK4 iteration, we get

|

(273) |

We realize that the expressions inside the brackets of the iterations of

the RK methods are simply the first few terms of the Taylor expansion of

, i.e., the iterations above are the approximations of the

closed form solution

, i.e., the iterations above are the approximations of the

closed form solution

with truncation errors of

different orders.

with truncation errors of

different orders.

The RK4 method considered above is in scalar form, however, it

can be easily generalized to the following vector form:

At each step, all  ,

,  ,

,  , and

, and

in vector form are computed based on

in vector form are computed based on  found in previous step, and then all components of

found in previous step, and then all components of

are obtained by the last equation above.

are obtained by the last equation above.

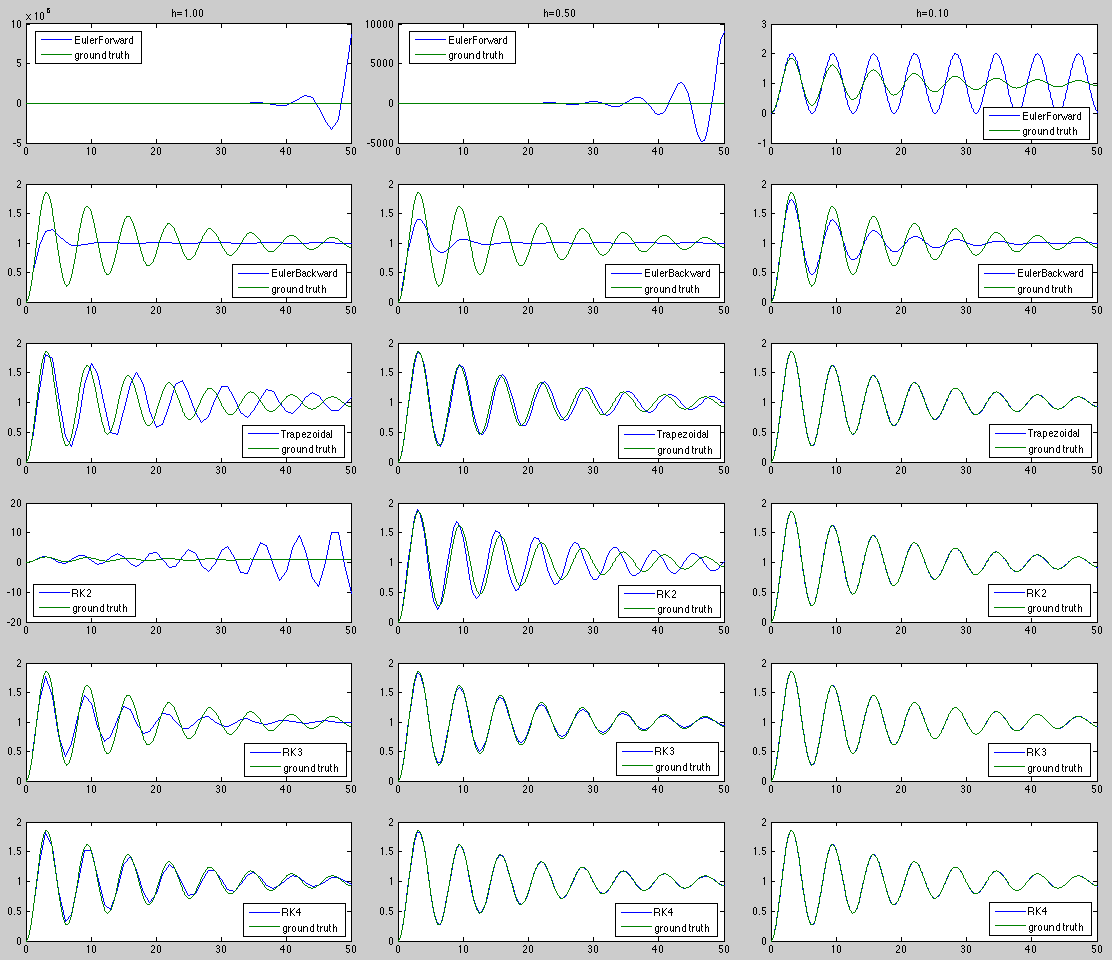

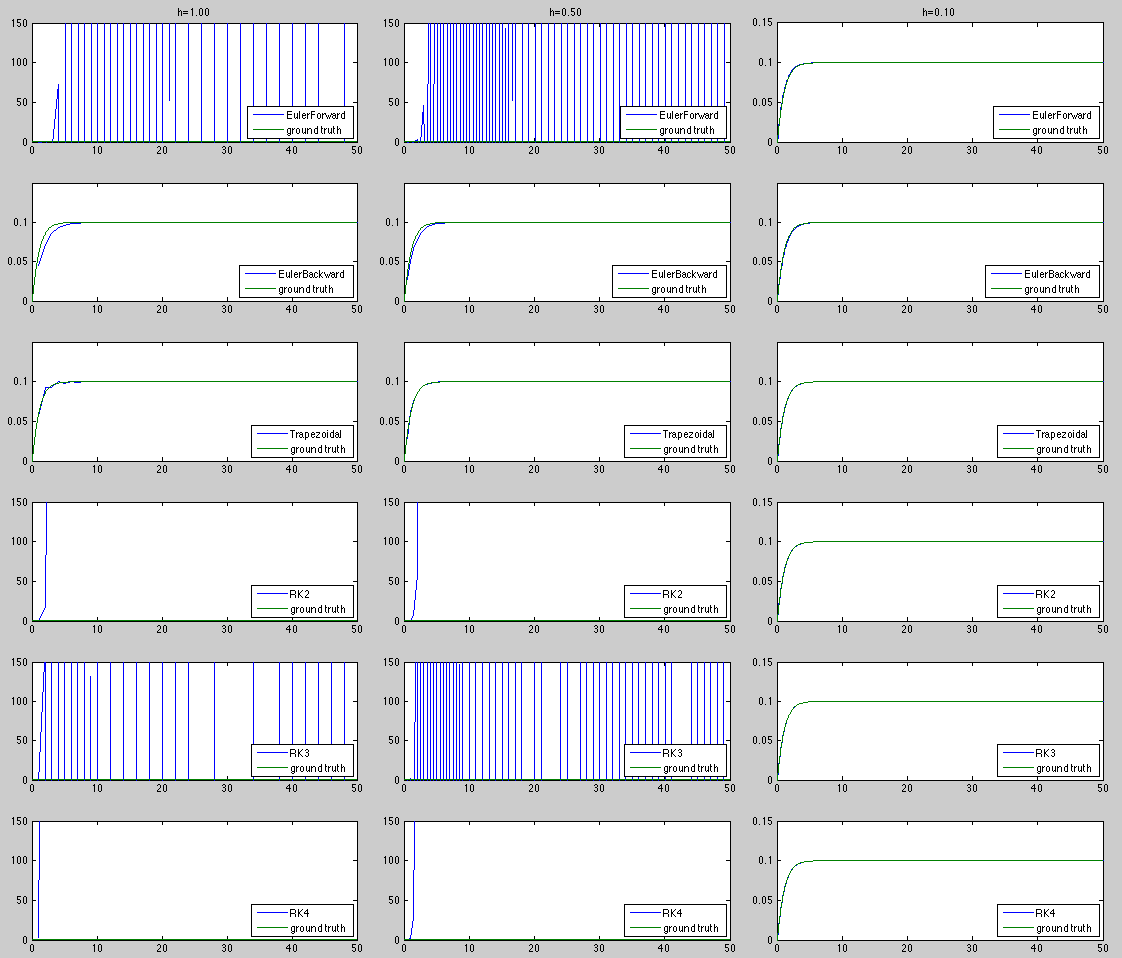

Exanple 2:

Previously we considered solving the second order LCCODE

analytically. We now resolve this equation but numerically

by the RK4 method. Specifically, consider a spring-damper-mass

mechanical system:

|

(275) |

with initial condition

and

and  . First, we

convert the DE into the canonic form

. First, we

convert the DE into the canonic form

|

(276) |

where

|

(277) |

This 2nd-order LCCODE can also be solved numerically. We let

|

(278) |

and then solve it iteratively to get

in the

interval

in the

interval ![$[0,\;50]$](img778.svg) , by each of the six methods: forward and backward

Euler's methods with

, by each of the six methods: forward and backward

Euler's methods with  , trapezoidal method with

, trapezoidal method with  , RK2

with

, RK2

with  , RK3 with

, RK3 with  , and RK4 with

, and RK4 with  .

.

Here we consider four different sets of parameters:

In the first case  and

and  are a complex conjugate pair, the

system is underdamped. But in all other cases, both

are a complex conjugate pair, the

system is underdamped. But in all other cases, both  and

and  are real, the system is overdamped, its solution contains two

exponentially decaying terms

are real, the system is overdamped, its solution contains two

exponentially decaying terms

and

and

, one

decaying more quickly than the other.

, one

decaying more quickly than the other.

The numerical solutions are plotted together with the closed-form

solution as the ground truth as show below.

Summarizing the above, we can make the following observations:

- A smaller step size always produces a more accurate soluton.

- Methods with higher order error terms are more accurate,

if they are stable.

- When the ratio

increases, a method may

become unstable, the step size needs to be reduced to avoid a

divergent solution.

increases, a method may

become unstable, the step size needs to be reduced to avoid a

divergent solution.

- When the step size is not small enough, an explicit method

may become not only inaccurate, but also unstable. A method of

lower error order (forward Euler with

, RK2 with

, RK2 with  )

requires a smaller step size to remain stable than a method of

higher error order (RK4 with

)

requires a smaller step size to remain stable than a method of

higher error order (RK4 with  ).

).

- Small step size may be needed not necessarily for more

accurate solution, but for preventing instability.

- The implicit backward Euler and trapezoidal methods are

always stable even when the step size is large, although the

orders of their error terms are not as high as other methods

such as RK3 and RK4.

- A method with higher order error may produce more

accurate solution only if it is stable, an implicit method

with low error order may not be as accurate, but it is

always stable.

- Accuracy and stability are two related but different

properties of a method.

The Matlab code for the RK methods is listed below:

function RungeKutta

global M C K

M=10; C=1; K=10;

figure(1)

% M=1; C=101; K=100; %stiff!

% M=1; C=1001; K=1000; %stiff!

t0=0; % initial time

y_int=[1; 1]; % initial values

tfinal = 50;

fprintf('Initial and final time: %.2f to %.2f\n',t0,tfinal);

% nsteps=input('Number of steps for numerical integral: ');

for l=1:3

switch l

case 1

nsteps=40;

case 2

nsteps=60;

case 3

nsteps=80;

end

tout=zeros(nsteps,1);

h = (tfinal - t0)/ nsteps; % step size

y1=ones(nsteps,1);

y2=ones(nsteps,1);

y3=ones(nsteps,1);

y4=ones(nsteps,1);

y5=ones(nsteps,1);

fprintf('\n# of steps: %4d\th=%0.3f\n',nsteps,h);

for k=0:4

t = t0; % set the variable t.

y = y_int; % initial conditions

for j= 1 : nsteps % go through the steps.

switch k

case 0

y = EulerForward(h, t, y);

y1(j)=y(1); % save only the first component of y.

case 1

%y=modifiedEuler(h, t, y);

y=EulerBackward(h, t, y);

y2(j)=y(1);

case 2

y=RungeKutta2(h, t, y);

y3(j)=y(1);

case 3

y=RungeKutta3(h, t, y);

y4(j)=y(1);

case 4

y=RungeKutta4(h, t, y, 3);

y5(j)=y(1);

end

t = t + h;

tout(j) = t;

end

end

y0=SecondOrder(y_int,tout);

subaxis(5,3,l, 'Spacing', 0.04, 'Padding', 0, 'Margin', 0.05);

plot(tout,y0,tout,y1)

xlim([t0,tfinal])

% ylim([-10 100])

legend('ground truth','EulerForward')

fprintf('\tEulerForward:\terror=%e\n',norm((y0-y1)./y0)/nsteps)

str = sprintf('h=%.2f',h);

title(str);

subaxis(5,3,l+3, 'Spacing', 0.04, 'Padding', 0, 'Margin', 0.05);

plot(tout,y0,tout,y2)

xlim([t0,tfinal])

% ylim([-10 100])

legend('ground truth','EulerBackward')

fprintf('\tEulerBackward:\terror=%e\n',norm((y0-y2)./y0)/nsteps)

subaxis(5,3,l+6, 'Spacing', 0.04, 'Padding', 0, 'Margin', 0.05);

plot(tout,y0,tout,y3)

xlim([t0,tfinal])

% ylim([-10 100])

legend('ground truth','RK2')

fprintf('\tRK2:\terror=%e\n',norm((y0-y3)./y0)/nsteps)

subaxis(5,3,l+9, 'Spacing', 0.04, 'Padding', 0, 'Margin', 0.05);

plot(tout,y0,tout,y4)

xlim([t0,tfinal])

% ylim([-10 100])

legend('ground truth','RK3')

fprintf('\tRK3:\terror=%e\n',norm((y0-y4)./y0)/nsteps)

subaxis(5,3,l+12, 'Spacing', 0.04, 'Padding', 0, 'Margin', 0.05);

plot(tout,y0,tout,y5)

xlim([t0,tfinal])

% ylim([-10 100])

legend('ground truth','RK4')

fprintf('\tRK4:\terror=%e\n',norm((y0-y5)./y0)/nsteps)

end

end

function e=rr(x,y)

e=0;

for i=1:length(x)

e=e+abs(x(i)-y(i))/abs(y(i));

end

% e=sqrt(e)

% norm(x-y)

% pause

end

function y = SecondOrder(y_int,t)

global M C K

zt=C/2/sqrt(M*K);

wn=sqrt(K/M);

s=sqrt(zt^2-1);

s1=(-zt-s)*wn;

s2=(-zt+s)*wn;

c1=(s2*y_int(1)-y_int(2))/(s2-s1);

c2=(s1*y_int(1)-y_int(2))/(s1-s2);

y=c1*exp(s1*t)+c2*exp(s2*t); % homogeneous solution

y=y+1/(M*wn^2); % particular solution

end

function dy = f(t, y) % 2nd order linear constant-coefficient DE

global M C K

dy = zeros(2,1);

dy(1) = y(2);

dy(2) = (-C*y(2)-K*y(1))/M; % homogeneous solution

dy(2) = dy(2)+1/M; % particular solution

end

function y = EulerForward(h, t, y)

y = y + h* f(t, y);

end

function y1 = EulerBackward(h, t0, y0)

global M C K

Jf=zeros(2);

Jf(1,1)=0;

Jf(1,2)=1;

Jf(2,1)=-K/M;

Jf(2,2)=-C/M;

y1=y0;

f(t0,y1);

F=y0+h*f(t0,y1)-y1;

norm(F);

i=0;

while norm(F)>0.0001

Jy=h*Jf;

Jy(1,1)=Jy(1,1)-1;

Jy(2,2)=Jy(2,2)-1;

y1=y1-inv(Jy)*F;

F=y0+h*f(t0,y1)-y1;

norm(F);

end

end

function y = modifiedEuler(h, t, y)

k1 = f(t, y);

k2 = f(t+h, y + h*k1);

y = y + h*(k1 + k2)/2;

end

function y = RungeKutta2(h, t, y)

k1 = f(t, y);

k2 = f(t+h/2, y + h*k1/2);

y = y + h*k2;

end

function y = RungeKutta3(h, t, y)

k1 = f(t, y);

k2 = f(t+h/2, y + h*k1/2);

k3 = f(t+h, y -h*k1 + 2*h*k2);

y = y + h*(k1 + 4*k2 + k3)/6;

% k1 = f(t0, y0);

% k2 = f(t0+h/3, y0 + h*k1/3);

% k3 = f(t0+2*h/3, y0 +2*h*k2/3);

% y1 = y0 + h*(k1 + 3*k3)/4;

end

function y1 = RungeKutta2a(h, t0, y0)

a=0.5;

k1 = f(t0, y0);

k2 = f(t0+a*h, y0 + a*h*k1);

y1 = y0 + h*((1-1/2/a)*k1+ k2/2/a)/2;

end

function y = RungeKutta4(h, t, y, k)

k1 = f(t, y);

switch k

case 1

k2 = f(t+h/2, y + h*k1/2);

k3 = f(t+h/2, y + h*k2/2);

k4 = f(t+h, y + h*k3);

y = y + h*(k1 + 2*k2 + 2*k3 + k4)/6;

case 2

k2 = f(t+h/3, y + h*k1/3);

k3 = f(t+2*h/3, y -h*k1/3 +h*k2);

k4 = f(t+h, y + h*k1 -h*k2 +h*k3);

y = y + h*(k1 + 3*k2 + 3*k3 + k4)/8;

case 3

k2 = f(t+2*h/3, y + 2*h*k1/3);

k3 = f(t+h/3, y + h*k1/12 +h*k2/4);

k4 = f(t+h, y - 5*h*k1/4 +h*k2/4 +2*h*k3);

y = y + h*(k1 + 3*k2 + 3*k3 + k4)/8;

case 4

k2 = f(t+h/2, y + h*k1/2);

k3 = f(t+h/2, y + h*k1/6 +h*k2/3);

k4 = f(t+h, y -h*k2/2 +3*h*k3/2);

y = y + h*(k1 + k2 + 3*k3 + k4)/6;

case 5

k2 = f(t+h/2, y + h*k1/2);

k3 = f(t+h/2, y - h*k1/2 +h*k2);

k4 = f(t+h, y + h*k2/2 + h*k3/2);

y = y + h*(k1 + 3*k2 + k3 + k4)/6;

end

end

is approximated as the

average of

is approximated as the

average of  estimations

estimations

of the slope weighted

respectively by

of the slope weighted

respectively by

:

:

estimated slopes are obtained recursively, i.e., the ith

estimate

estimated slopes are obtained recursively, i.e., the ith

estimate  is based on all previous estimates

is based on all previous estimates

:

:

,

,  and

and

is the slope of the tangent at

is the slope of the tangent at  .

.

, we have the forward Euler method:

, we have the forward Euler method:

is the estimated function value

is the estimated function value  at

some point inside the interval

at

some point inside the interval ![$[t,\,t+h]$](img630.svg) , and

, and  is the

corresponding weight.

is the

corresponding weight.

.

.

can be found as:

can be found as:

in

Eq. (234), we get different approximations of the

function with truncation errors of progressively higher orders:

in

Eq. (234), we get different approximations of the

function with truncation errors of progressively higher orders:

of RK methods of different

number of stages

of RK methods of different

number of stages

to determine the parameters

required to achieve the highest possible order for the error term.

to determine the parameters

required to achieve the highest possible order for the error term.

:

:

. This RK1 is simply Euler's forward method

in Eq. (189) with a 2nd order error.

. This RK1 is simply Euler's forward method

in Eq. (189) with a 2nd order error.

:

:

,

the remaining three variables can be determined as shown in the Butcher's

tableau of the RK2:

,

the remaining three variables can be determined as shown in the Butcher's

tableau of the RK2:

:

:

, we have

, we have

![$\displaystyle y(t_{n+1})=y(t_n)+\int_{t_n}^{t_{n+1}} f(t)\,dt

\approx y(t_n)+\frac{h}{2}[f(t_n)+f(t_n+h)]$](img676.svg)

, we have

, we have

, we have

, we have

![$\displaystyle f+h[c_3f_t+(a_{31}f+a_{32}(f+h(c_2f_t+a_{21}ff_y)))f_y]+O(h^2)$](img689.svg)

![$\displaystyle f+h[c_3f_t+(a_{31}f+a_{32}(f+h(c_2f_t+a_{21}ff_y)))f_y]$](img690.svg)

![$\displaystyle +\frac{h^2}{2}[c_3^2f_{tt}+2c_3(a_{31}f+a_{32}f)f_{ty}+(a_{31}f+a_{32}f)^2f_{yy}]

+O(h^3)$](img691.svg)

of order higher than two are collected in the

error term

of order higher than two are collected in the

error term  . The iterative step for this RK3 method can now

be written as:

. The iterative step for this RK3 method can now

be written as:

![$\displaystyle +hb_3\left(f+h[c_3f_t+(a_{31}f+a_{32}f+a_{32}h(c_2f_t+a_{21}f_yf)...

...2c_3(a_{31}f+a_{32}f)f_{ty}

+(a_{31}f+a_{32}f)^2f_{yy}]+O(h^3)\right)

\nonumber$](img694.svg)

![$\displaystyle y(t_{n+1})=y(t_n)+\int_{t_n}^{t_{n+1}} f(t)\,dt

\approx y(t_n)+\frac{h}{6}[f(t_n)+4f(t_n+h/2)+f(t_n+h)]$](img708.svg)

![$\displaystyle y(t_{n+1})=y(t_n)+\int_{t_n}^{t_{n+1}} f(t)\,dt

\approx y(t_n)+\frac{h}{8}[f(t_n)+3f(t_n+h/3)+3f(t_n+2h/3)+f(t_n+h)]$](img720.svg)

and

and

:

:

,

,

,

,  ,

,

, and

, and  :

:

,

,

and

and

,

,

:

:

, i.e., the iterations above are the approximations of the

closed form solution

, i.e., the iterations above are the approximations of the

closed form solution

with truncation errors of

different orders.

with truncation errors of

different orders.

,

,  ,

,  , and

, and

in vector form are computed based on

in vector form are computed based on  found in previous step, and then all components of

found in previous step, and then all components of

are obtained by the last equation above.

are obtained by the last equation above.

and

and  . First, we

convert the DE into the canonic form

. First, we

convert the DE into the canonic form

in the

interval

in the

interval ![$[0,\;50]$](img778.svg) , by each of the six methods: forward and backward

Euler's methods with

, by each of the six methods: forward and backward

Euler's methods with  , trapezoidal method with

, trapezoidal method with  , RK2

with

, RK2

with  , RK3 with

, RK3 with  , and RK4 with

, and RK4 with  .

.

and

and  are a complex conjugate pair, the

system is underdamped. But in all other cases, both

are a complex conjugate pair, the

system is underdamped. But in all other cases, both  and

and  are real, the system is overdamped, its solution contains two

exponentially decaying terms

are real, the system is overdamped, its solution contains two

exponentially decaying terms

and

and

, one

decaying more quickly than the other.

, one

decaying more quickly than the other.

(left),

(left),  (middle), and

(middle), and  (right)

(right)

or

or  ).

).

.

.

,

while the forward Euler and RK2 become stable only when the step

size is further reduced to

,

while the forward Euler and RK2 become stable only when the step

size is further reduced to  .

.

increases, a method may

become unstable, the step size needs to be reduced to avoid a

divergent solution.

increases, a method may

become unstable, the step size needs to be reduced to avoid a

divergent solution.

, RK2 with

, RK2 with  )

requires a smaller step size to remain stable than a method of

higher error order (RK4 with

)

requires a smaller step size to remain stable than a method of

higher error order (RK4 with  ).

).