Next: Multi-step methods Up: ch5 Previous: Solving First Order ODEs

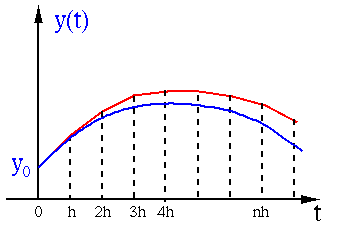

The slope of the secant through

This method uses the derivative

![$[t,\;t+h]$](img476.svg)

:

we see that this method has a second order truncation error

:

we see that this method has a second order truncation error  .

.

If the step size

This method uses the derivative

![$[t,\;t+h]$](img476.svg)

in the expression by its Taylor expansion:

in the expression by its Taylor expansion:

|

(192) |

|

(193) |

. As the desired function value

. As the desired function value  appears on both

sides of Eq. (191), this method is implicit,

instead of explicit, as

appears on both

sides of Eq. (191), this method is implicit,

instead of explicit, as  needs to be found by solving

the following equation for

needs to be found by solving

the following equation for  treated as the unknown:

treated as the unknown:

|

(194) |

:

:

where where |

(195) |

Obviously this method is more computationally expensive, however, as an implicit method, it is always stable compared to the forward method, as shown below.

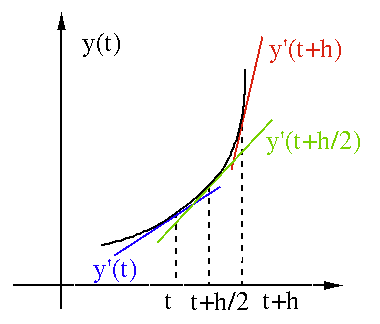

This method uses the average of the derivatives at the beginning

and end points of the interval ![$[t,\;t+h]$](img476.svg)

|

(196) |

|

|||

![$\displaystyle =y(t)+\frac{h}{2}\,[f(t,\,y(t))+f(t+h,\,y(t+h))]$](img520.svg) |

(197) |

|

|

![$\displaystyle y(t)+\int_t^{t+h} y'(\tau)\,d\tau

=y(t)+\frac{h}{2}\,[y'(t)+y'(t+h)]$](img521.svg) |

|

|

![$\displaystyle y(t)+\frac{h}{2}\,[f(t,\,y(t))+f(t+h,\,y(t+h))]$](img522.svg) |

(198) |

|

(200) |

To find the order of the truncation error, we replace

|

|

|

|

|

![$\displaystyle y(t)+\frac{h}{2}\,y'(t)+\frac{h}{2}\,[y'(t)+h y''(t)+O(h^2)]$](img528.svg) |

||

|

|

(201) |

, one order higher than either the forward or backward

method.

, one order higher than either the forward or backward

method.

Same as the backward method, this traperoidal method is also

implicit. To find

|

(202) |

In summary, here is how the three methods find the increment of

function

|

(203) |

|

(204) |

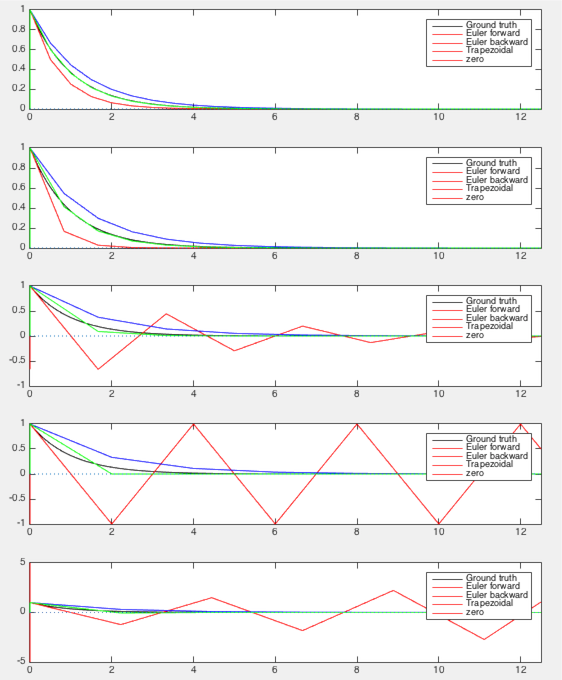

Example: Consider a simple first order constant coefficient DE:

i.e. i.e. |

(205) |

and initial condition

and initial condition  . The closed

form solution of this equation is known to be

. The closed

form solution of this equation is known to be

,

which decays exponentially to zero when

,

which decays exponentially to zero when

.

This DE can be solved numerically by each of the three methods.

.

This DE can be solved numerically by each of the three methods.

i.e.,

i.e.,

or

or

. If the step size is greater

than

. If the step size is greater

than  , iteration will diverge.

, iteration will diverge.

|

(207) |

we get

we get

|

(209) |

we get

we get

with

with  and

and  .

The four plots correspond to five different step sizes

.

The four plots correspond to five different step sizes

.

.

We make the following observations:

used in the forward method, but under estimate by

used in the forward method, but under estimate by

used by the backward method. As the average of the

two, the result of the trapezoidal method coincide with the

true solution more closely.

used by the backward method. As the average of the

two, the result of the trapezoidal method coincide with the

true solution more closely.

, while the performance of the forward

method is very sensitive to the step size

, while the performance of the forward

method is very sensitive to the step size  . In particular,

when

. In particular,

when

, the iteration becomes divergent.

, the iteration becomes divergent.

In general there are two different types of approaches to

estimate some value in the future, such as the next function

value

and

and  . Such a method may

be divergent and therefore unstable (the iteration grows

out of bound);

. Such a method may

be divergent and therefore unstable (the iteration grows

out of bound);

, as well as the present and

past information such as

, as well as the present and

past information such as  and

and  . Such a method

is in general stable.

. Such a method

is in general stable.

We further consider the Midpoint method which uses

the midpoint

|

(211) |

|

(212) |

is:

is:

|

(213) |

|

(214) |

we get

we get

|

(215) |

is one order higher than that

of either Euler's forwar or backward method.

This equation can be expressd iteratively:

is one order higher than that

of either Euler's forwar or backward method.

This equation can be expressd iteratively:

|

(216) |

of the function on the

right-hand side can be approximated by the first two terms of

its Taylor expansion:

of the function on the

right-hand side can be approximated by the first two terms of

its Taylor expansion:

|

(217) |

|

(219) |