As we see in the previous example, due to the change of the system

parameters, the problem may become difficult to solve, in the sense

that significantly reduced step size and thereby much increased

computing time will be needed for the numerical solution to remain

bounded. Such problems are said to be stiff, which is hard

to be strictly defined mathematically. One way to measure the

stiffness of a given problem is the stiffness ratio defined as

, where

, where

and

and

are

respectively the eigenvalues of the coefficient matrix

are

respectively the eigenvalues of the coefficient matrix  with most negative and least negative real part, assuming all

eigenvalues have negative real parts. In the example above, the

stiffness ratios corresponding to the four sets of parameters are

1, 10, 100, and 1000, i.e., the problem becomes progressively more

stiff measured by this particular paramter.

with most negative and least negative real part, assuming all

eigenvalues have negative real parts. In the example above, the

stiffness ratios corresponding to the four sets of parameters are

1, 10, 100, and 1000, i.e., the problem becomes progressively more

stiff measured by this particular paramter.

The stiffness of a differential equation is not strictly defined

mathematically. Some descriptive and commonly accepted criteria for

the stiffness are listed below. They are not necessarily independent

of each other, instead, they may be considered as different aspects

of the same phenomenon.

- Small step size is required for the stability rather than the

accuracy of the method used.

- Implicit methods need to be used as explicit methods require

extremely small step size and are therefore extremely slow.

- The solution contains components that decay much more quickly

than others, i.e., the components in the solution decay at very

different time scales.

- All eigenvalues of the coefficient matrix of a linear constant

coefficient DE

have negative

real part, and the stiffness ratio is large.

have negative

real part, and the stiffness ratio is large.

- All eigenvalues of the Jacobian of the

function of a

DE

function of a

DE

differ greatly in magnitude.

differ greatly in magnitude.

From the last example we also see that even if the given DE is stiff,

requiring small step size and long computing time for the numerical

methods used to remain stable, there are some methods, such as the

backward Euler's method, that are stable, as the numerical solution

they produce will not grow without bound, although the order of their

error is not necessarily high, and the step size is not necessarily

small. We therefore see that the accuracy in terms of the order of

the truncation error and the stability are two different characteristics

of a specific method. A stable method of low order of error such as the

backward Euler method may be more stable than a method with higher order

of error such as RK4. We need to have a way to measure the stability of

a specific method. To do so, we consider a simple test equation:

|

(318) |

where the initial value  is real and

is real and  is assumed to be

complex in general. The closed form solution of this equation is

is assumed to be

complex in general. The closed form solution of this equation is

, which converges if

, which converges if

:

:

|

(319) |

The stability of a specific method can now be measured by applying it to

this test equation, in terms of its region of absolute stability

(RAS), defined as the region on the complex plane, any value  in which corresponds to a bounded solution of the differential equation.

If, in particular, the entire left-hand plan

in which corresponds to a bounded solution of the differential equation.

If, in particular, the entire left-hand plan

is inside

the RAS of a method, then it is absolute stable or A-stable.

An A-stable method is always stable, i.e., the numerical solution it

generates is bounded, for any step size, so long as

is inside

the RAS of a method, then it is absolute stable or A-stable.

An A-stable method is always stable, i.e., the numerical solution it

generates is bounded, for any step size, so long as

, i.e.,

the true solution of the DE is bounded.

, i.e.,

the true solution of the DE is bounded.

Note that the RAS depends on the product  (instead of either

(instead of either

or

or  alone), because a smaller step size

alone), because a smaller step size  is needed for

a larger

is needed for

a larger

of a more rapidly decaying function

of a more rapidly decaying function

, but a greater step size

, but a greater step size  can be used for a

small

can be used for a

small

of a slowly decaying

of a slowly decaying  .

.

The RAS of a general method, such as the multi-step Adams-Moulton method

(Eq. (225)), can be obtained by applying it to the test

equation

:

:

This iteration can be viewed as a homogeneous difference equation:

|

(321) |

If we assume  , this equation becomes:

, this equation becomes:

where

|

(323) |

is the characteristic polynomial with  roots

roots

. The

solution of the test equation Eq. (320) is the linear combination

of

. The

solution of the test equation Eq. (320) is the linear combination

of  terms of

terms of  :

:

|

(324) |

where the coefficients

can be determined based on

can be determined based on

given initial conditions

given initial conditions

. For this solution to

be bounded we must have

. For this solution to

be bounded we must have  for all

for all

.

.

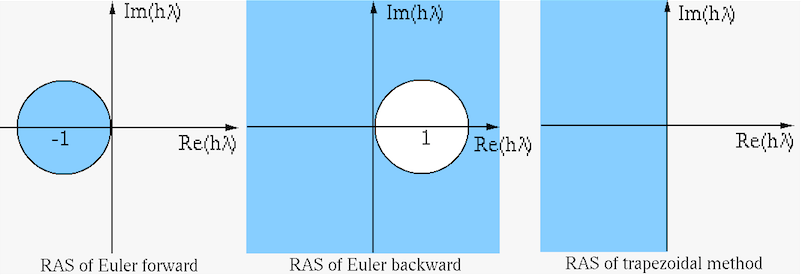

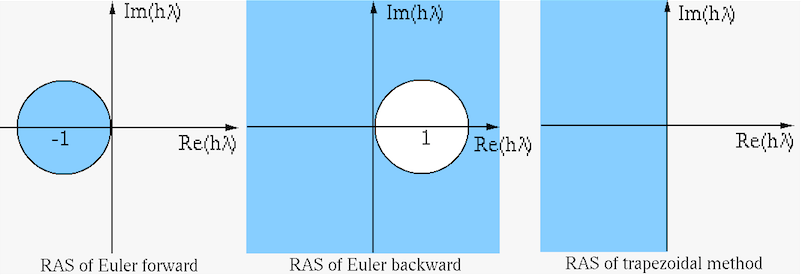

- Forward Euler's method (Eq. (206),

):

):

The characteristic equation is

|

(326) |

We must have

for the solution to be bounded.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

for the solution to be bounded.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

|

(327) |

This RAS is the unite circle centered at  , the method is

not A-stable. On the real axis, we have

, the method is

not A-stable. On the real axis, we have

, i.e.,

, i.e.,

or

or

. If

. If  , i.e., the

solution

, i.e., the

solution

exponentially decays to 0, the

numerical solution will converge only if

exponentially decays to 0, the

numerical solution will converge only if

.

.

- Backward Euler's method (Eq. (208),

):

):

|

(328) |

|

(329) |

For the solution to be bounded, we must have

,

i.e.,

,

i.e.,

.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

|

(330) |

This RAS is the area outside the unite circle centered at  .

If

.

If  , i.e., the solution

, i.e., the solution

exponentially

decays to 0, the numerical solution will always converge, as no

matter how large the step size

exponentially

decays to 0, the numerical solution will always converge, as no

matter how large the step size  may be,

may be,

is always

smaller than 1. The method is A-stable.

is always

smaller than 1. The method is A-stable.

- The trapezoidal method (Eq. (210)),

):

):

|

(331) |

|

(332) |

For the solution to be bounded, we must have

.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

|

(333) |

The inequality holds if

, i.e., the RAS is the entire

left-hand plan, the method is A-stable.

, i.e., the RAS is the entire

left-hand plan, the method is A-stable.

- Second order Adams-Bashforth method (Eq. (222),

):

):

|

(334) |

|

(335) |

The two roots are:

|

(336) |

For the solution to be bounded, we must have  . In particular,

on the real axis, we have

. In particular,

on the real axis, we have

|

(337) |

i.e.,

|

(338) |

Solving these inequalities, we get

.

.

Note that for methods of order  , the condition of stability can

no longer be found by solving

, the condition of stability can

no longer be found by solving

.

.

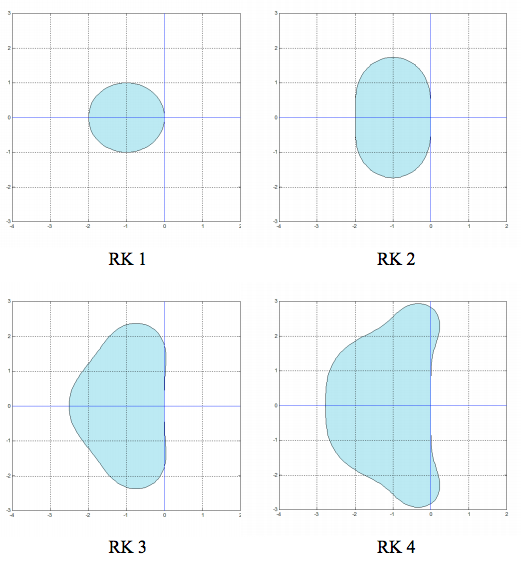

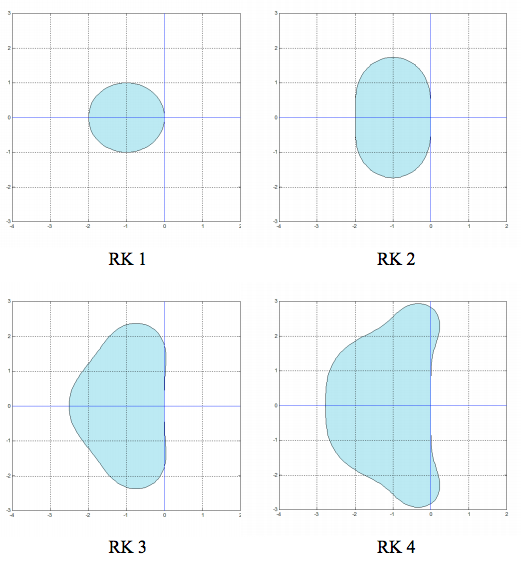

- Runge-Kutta methods:

As shown in the example in Section ![[*]](crossref.png) , the iterations of RK

methods for the test equation

, the iterations of RK

methods for the test equation

are

are

For the iteration to converge, we need to have

, i.e.,

the magnitudes of the corresponding polynomial of

, i.e.,

the magnitudes of the corresponding polynomial of  inside the

brackets must be smaller than 1. All points of

inside the

brackets must be smaller than 1. All points of  that satisfy

the inequality above form the RAS on the complex plane, as shown

here:

that satisfy

the inequality above form the RAS on the complex plane, as shown

here:

We see that although the RK methods have high order of errors (e.g.,

RK4 with  ), they are not A-stable, while the implicit backward

Euler and trapezoidal methods are both A-stable, even though they have

lower order error, as shown in the example of the the previous section.

), they are not A-stable, while the implicit backward

Euler and trapezoidal methods are both A-stable, even though they have

lower order error, as shown in the example of the the previous section.

The discussion above for single-variable linear constant coefficient DEs

can be generalized to a multi-variable linear constant coefficient DE

systems

with a general solution:

with a general solution:

|

(343) |

which in turn can be further generalized to nonlinear DE systems which can

be approximately linearized by dropping all higher order terms in its

Taylor expansion except the linear term represented by its Jacobian matrix

. Then the discussion above in terms of the constant coefficient

matrix

. Then the discussion above in terms of the constant coefficient

matrix  in a linear system can be generalized to a non-linear

system in terms of

in a linear system can be generalized to a non-linear

system in terms of  .

.

have negative

real part, and the stiffness ratio is large.

have negative

real part, and the stiffness ratio is large.

function of a

DE

function of a

DE

differ greatly in magnitude.

differ greatly in magnitude.

is real and

is real and  is assumed to be

complex in general. The closed form solution of this equation is

is assumed to be

complex in general. The closed form solution of this equation is

, which converges if

, which converges if

:

:

in which corresponds to a bounded solution of the differential equation.

If, in particular, the entire left-hand plan

in which corresponds to a bounded solution of the differential equation.

If, in particular, the entire left-hand plan

is inside

the RAS of a method, then it is absolute stable or A-stable.

An A-stable method is always stable, i.e., the numerical solution it

generates is bounded, for any step size, so long as

is inside

the RAS of a method, then it is absolute stable or A-stable.

An A-stable method is always stable, i.e., the numerical solution it

generates is bounded, for any step size, so long as

, i.e.,

the true solution of the DE is bounded.

, i.e.,

the true solution of the DE is bounded.

, this equation becomes:

, this equation becomes:

![$\displaystyle cz^n [ (1-h\lambda b_{n+m})z^m-(1+h\lambda b_{n+m-1})z^{m-1}+h\lambda\sum_{i=0}^{m-2}b_iz^i ]$](img967.svg)

roots

roots

. The

solution of the test equation Eq. (320) is the linear combination

of

. The

solution of the test equation Eq. (320) is the linear combination

of  terms of

terms of  :

:

can be determined based on

can be determined based on

given initial conditions

given initial conditions

. For this solution to

be bounded we must have

. For this solution to

be bounded we must have  for all

for all

.

.

):

):

for the solution to be bounded.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

for the solution to be bounded.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

, the method is

not A-stable. On the real axis, we have

, the method is

not A-stable. On the real axis, we have

, i.e.,

, i.e.,

or

or

. If

. If  , i.e., the

solution

, i.e., the

solution

exponentially decays to 0, the

numerical solution will converge only if

exponentially decays to 0, the

numerical solution will converge only if

.

.

):

):

,

i.e.,

,

i.e.,

.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

.

If

.

If  , i.e., the solution

, i.e., the solution

exponentially

decays to 0, the numerical solution will always converge, as no

matter how large the step size

exponentially

decays to 0, the numerical solution will always converge, as no

matter how large the step size  may be,

may be,

is always

smaller than 1. The method is A-stable.

is always

smaller than 1. The method is A-stable.

):

):

.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

.

Alternatively, we can reach the same result based on the direction

observation that the following ratio must be smaller than 1 for the

solution to converge:

, i.e., the RAS is the entire

left-hand plan, the method is A-stable.

, i.e., the RAS is the entire

left-hand plan, the method is A-stable.

):

):

. In particular,

on the real axis, we have

. In particular,

on the real axis, we have

.

.

![[*]](crossref.png) , the iterations of RK

methods for the test equation

, the iterations of RK

methods for the test equation

![$\displaystyle y_{n+1}=[1+h\lambda+(h\lambda)^2/2]y_n$](img1016.svg)

![$\displaystyle y_{n+1}=[1+h\lambda+(h\lambda)^2/2+(h\lambda)^3/6]y_n$](img1018.svg)

![$\displaystyle y_{n+1}=[1+h\lambda+(h\lambda)^2/2+(h\lambda)^3/6+(h\lambda)^4/24]y_n$](img1020.svg)

, i.e.,

the magnitudes of the corresponding polynomial of

, i.e.,

the magnitudes of the corresponding polynomial of  inside the

brackets must be smaller than 1. All points of

inside the

brackets must be smaller than 1. All points of  that satisfy

the inequality above form the RAS on the complex plane, as shown

here:

that satisfy

the inequality above form the RAS on the complex plane, as shown

here: