Next: Back Propagation Up: ch10 Previous: Hopfield Network

The perceptron network (F. Rosenblatt, 1957) is a

two-layer learning network containing an input layer of

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_N]$](img121.svg)

![${\bf x}=[x_1,\cdots,x_d]^T$](img44.svg)

![${\bf y}=[y_1,\cdots,y_N]^T$](img122.svg)

![${\bf w}=[w_1,\cdots,w_d]^T$](img123.svg)

We first consider the special case where the output layer has

only

If then then |

(29) |

by

by

, we get

, we get

|

(30) |

is the

projection of

is the

projection of  onto the normal direction

onto the normal direction  of the partitioning hyperplane, and

of the partitioning hyperplane, and

is

the vector from the origin to the hyperplane (

is

the vector from the origin to the hyperplane ( is the

distance of the hyperplane to the origin). Now the

classification above can be rewritten as

is the

distance of the hyperplane to the origin). Now the

classification above can be rewritten as

If then then |

(31) |

is classified into either of the two classes

based on the projection

is classified into either of the two classes

based on the projection

of

of  onto

onto

, which is either greater or smaller than the bias

, which is either greater or smaller than the bias

, depending on whether

, depending on whether  is on the positive or

negative side of the plane.

is on the positive or

negative side of the plane.

In all these binary classification methods the parameters

We now consider specifically the training algorithm of the

perceptron network as a binary classifier. As always, we

redefine the data vector as

![${\bf x}=[x_0=1,x_1,\cdots,x_n]^T$](img148.svg)

![${\bf w}=[w_0=b,w_1,\cdots,w_n]^T$](img149.svg)

The randomly initialized weight vector

is the step size but here called the

learning rate, which is assumed to be 1 in the

following for simplicity. We can show that by the iteration

above,

is the step size but here called the

learning rate, which is assumed to be 1 in the

following for simplicity. We can show that by the iteration

above,  is modified in such a way that the error

is modified in such a way that the error

is always reduced.

is always reduced.

When a training sample

|

(33) |

, the

error is

, the

error is

, and the weight vector

, and the weight vector

is not

modified. But in cases 2 and 3

is not

modified. But in cases 2 and 3

, the error

is

, the error

is

, the weight vector

, the weight vector  is modified

in either of the following two ways:

is modified

in either of the following two ways:

, but

, but  , then

, then

and

and

, we have

When the same

, we have

When the same  is presented to the network again in the

future, the function is smaller than its previous value

is presented to the network again in the

future, the function is smaller than its previous value

|

(35) |

is more likely to be the same as the

desired

is more likely to be the same as the

desired  .

.

, but

, but

, then

, then

and

and  , we have

When the same

, we have

When the same  is presented again, the function is

greater than its previoius value

is presented again, the function is

greater than its previoius value

|

(37) |

is more likely to be the same as the

desired

is more likely to be the same as the

desired  .

.

and

Eq. (36) for

and

Eq. (36) for  can be combined to become

where the scaling constant

can be combined to become

where the scaling constant  is dropped as it can be absorbed

into the learning rate if we let

is dropped as it can be absorbed

into the learning rate if we let  . Now the learning rule

can be rewritten as:

. Now the learning rule

can be rewritten as:

If then then |

(39) |

In summary, the learning law guarantees that the weight vector

This binary classifier with

![${\bf W}=[{\bf w}_1,\cdots,{\bf w}_m]$](img181.svg)

or or |

(40) |

output nodes form an m-dimensional binary vector

output nodes form an m-dimensional binary vector

![$\hat{\bf y}=[\hat{y}_1,\cdots,\hat{y}_m]^T$](img193.svg) , which is to be compared

with the labeling

, which is to be compared

with the labeling  of the current input

of the current input  with error

with error

. When the training is complete, an

unlabeled input

. When the training is complete, an

unlabeled input  is classified to one of the

is classified to one of the  classes with

a matching label to the perceptron's output. In the case of one-hot

encoding, it is possible for the binary output

classes with

a matching label to the perceptron's output. In the case of one-hot

encoding, it is possible for the binary output  to not match

any of the

to not match

any of the  one-hot encoded classes (e.g.,

one-hot encoded classes (e.g.,

![$\hat{\bf y}=[-1\;1\;-1\;1]^T$](img195.svg) . In this case, the input

. In this case, the input  can

be classified to the class corresponding to the node with the greatest

output value

can

be classified to the class corresponding to the node with the greatest

output value

.

.

The Matlab code for the essential part of the algorithm is listed

below. Array

[X Y]=DataOneHot; % get data

K=length(unique(Y','rows')) % number of classes

X=[ones(1,N); X]; % data augmentation

[d N]=size(X); % number of dimensions and number of samples

m=size(Y,1); % number of output nodes

W=2*rand(d,m)-1; % random initialization of weights

eta=1;

nt=10^4; % maximum number of iteration

for it=1:nt

n=randi([1 N]); % random index

x=X(:,n); % pick a training sample x

y=Y(:,n); % label of x

yhat=sign(W'*x); % binary output

delta=y-yhat; % error between desired and actual outputs

for i=1:m

W(:,i)=W(:,i)+eta*delta(i)*x; % update weights for all K output nodes

end

if ~mod(it,N) % test for every epoch

er=test(X,Y,W);

if er<10^(-9)

break

end

end

end

This is the function that test the training set based on estimated weight

vectors in

function er=test(X,Y,W) % test based on estimated W,

[d N]=size(X);

Ne=0; % number of misclassifications

for n=1:N

x=X(:,n);

yhat=sign(W'*x);

delta=Y(:,n)-yhat;

if any(delta) % if misclassification occurs to some output nodes

Ne=Ne+1; % update number of misclassifications

end

end

er=Ne/N; % error percentage

end

This is the code that generates the training set labeled by either one-hot or binary encoding method:

function [X,Y]=DataOneHot

d=3;

K=8;

onehot=1; % onehot=0 for binary encoding

Means=[ -1 -1 -1 -1 1 1 1 1;

-1 -1 1 1 -1 -1 1 1;

-1 1 -1 1 -1 1 -1 1];

Nk=50*ones(1,K);

N=sum(Nk); % total number of samples

X=[];

Y=[];

s=0.4;

s=0.2;

for k=1:K % for each of the K classes

Xk=Means(:,k)+s*randn(d,Nk(k));

if onehot

Yk=-ones(K,Nk(k));

Yk(k,:)=1;

else % binary encoding

dy=ceil(log2(K));

y=2*de2bi(k-1,dy)-1;

Yk=repmat(y',1,Nk(k));

end

X=[X Xk];

Y=[Y Yk];

end

Visualize(X,Y)

end

Examples

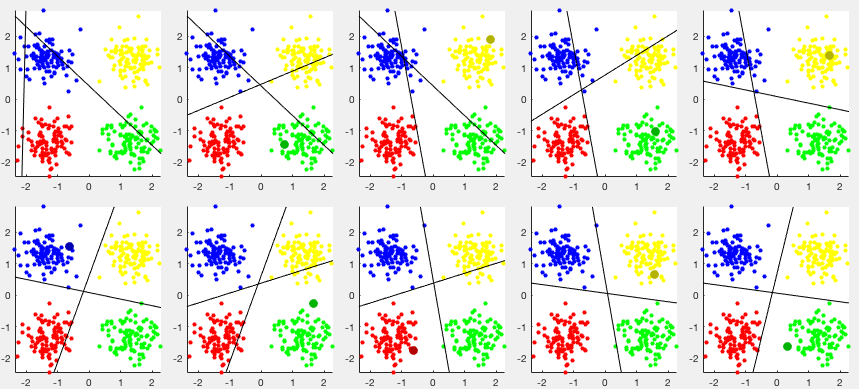

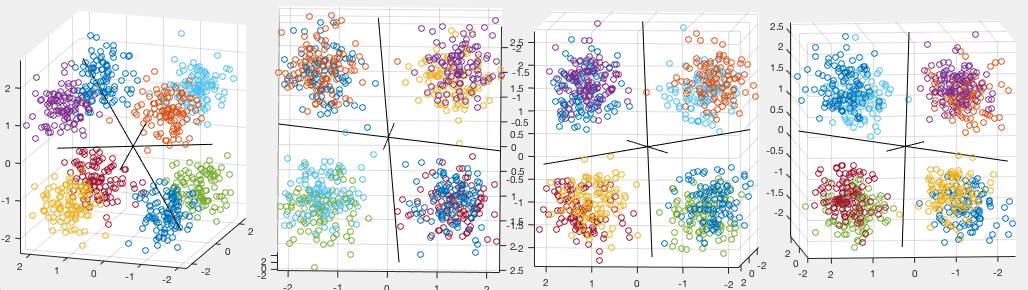

The figure below shows the classification results of a perceptron

network with

![${\bf y}=[y_1,\,y_2]$](img201.svg)

The figure below shows the classification results of a perceptron of

The main contraint of the perceptron algorithm as a binary

classifier that the two classes are linearly separable can be

removed by the kernel method, once the algorithm is modified in

such a way that all data samples appear in the form of an inner

product. Consider first the training process in which the

is the number of times sample

is the number of times sample  labeled

by

labeled

by  is misclassified. Upon receiving a new training sample

is misclassified. Upon receiving a new training sample

labeled by

labeled by  , we have

, we have

and and |

(42) |

is updated by the delta-rule:

is updated by the delta-rule:

| If |  |

||

| then |  |

||

i.e. i.e. |

(43) |

need to be updated

during the training process, while the weight vector

need to be updated

during the training process, while the weight vector  in

Eq. (41) no longer needs to be explicitly

calculated. Once the training process is complete, any unlabeled

in

Eq. (41) no longer needs to be explicitly

calculated. Once the training process is complete, any unlabeled

can be classified into either of the two classes based

on

can be classified into either of the two classes based

on

:

:

If then then |

(44) |

As all data samples appear in the form of inner product in both

the training and testing phase, the kernel method can be applied to

replace the inner product

Here is the Matlab code segment for the most essential parts of the kernel perceptron algorithm:

[X Y]=Data; % get dataset

[d N]=size(X); % d: dimension, N: number of training samples

X=[ones(1,N); X]; % augmented data

m=size(Y,1); % number of output nodes

A=zeros(m,N); % initialize alpha for all m output nodes and N samples

K=Kernel(X,X); % get kernel matrix of all N samples

for it=1:nt

n=randi([1 N]); % random index

x=X(:,n); % pick a training sample

y=Y(:,n); % and its label

yhat=sign((A.*Y)*K(:,n)); % get yhat

delta=Y(:,n)-yhat; % error between desired and actual output

for i=1:m % for each output node

if delta(i)~=0 % if a misclassification

A(i,n)=A(i,n)+1; % update the corresponding alpha

end

end

if ~mod(it,N) % test for every epoch

er=test(X,Y,A); % percentage of misclassification

if er<10^(-9)

break

end

end

end

function er=test(X,Y,A) % function for testing

[d N]=size(X); % d: dimension, N: number of training samples

m=size(Y,1);

Ne=0; % initialize number of misclassifications

for n=1:N % for all N training samples

x=X(:,n); % get the nth sample

y=Y(:,n); % and its label

f=(A.*Y)*Kernel(X,x); % f(x)

yhat=sign(f); % yhat=sign(f(x))

if norm(y-yhat)~=0 % misclassification at some output nodes

Ne=Ne+1; % increase number of misclassification

end

end

er=Ne/N; % percentage of misclassification

end

Example:

The kernel perceptron is applied to the dataset of handwritten

digits of

![$\displaystyle \left[\begin{array}{rrrrrrrrrr}

116 & 0 & 0 & 0 & 0 & 0 & 0 & 0 &...

... 1 & 3 & 93 & 4\\

1 & 2 & 0 & 1 & 7 & 0 & 0 & 3 & 6 & 96\\

\end{array}\right]$](img231.svg) |

(45) |

The constraint of the perceptron algorithm is the requirement that the classes are linear saperabe due to the fact that there is only one level of learning taking place between the output and input layers. This constraint of linear separablity will be removed when multi-layer networks are used, such as the back propagation algorithm to be discussed in the next section, and more generally, the deep learning networks containing a large number of learning layers between the input and output layers.