Next: Perceptron Up: ch10 Previous: Hebbian Learning

The Hopfield network

is a supervised method, based on the Hebbian learning rule. As a

supervised method, the Hopfield network is trained based on a set

of

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_K]$](img76.svg)

![${\bf x}_k=[x_1,\cdots,x_d]^T$](img77.svg)

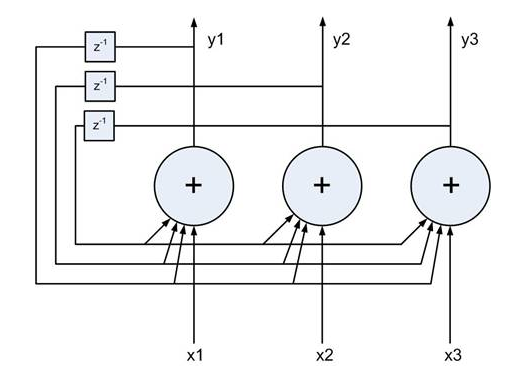

Structurally, the Hopfield network is a recurrent

network, in the sense that the outputs of its single layer of

The training process is essentially the same as the Hebbian learning,

except here the two associated patterns in each pair are the same

(self-association). The weight matrix of the network is obtained as

the sum of the outer-products of the

![$\displaystyle {\bf W}_{d\times d}=\frac{1}{d}\sum_{k=1}^K {\bf x}_k {\bf x}_k^T...

...k)} \\ \vdots \\ x_d^{(k)} \end{array} \right]

[ x_1^{(k)}, \cdots, x_d^{(k)} ]$](img80.svg) |

(20) |

|

(21) |

Once the weight matrix

|

(22) |

and

and

are the output

are the output  of the ith node

before and after the nth iteration, respectively. As shown below, this

iteration will always converge to one of the

of the ith node

before and after the nth iteration, respectively. As shown below, this

iteration will always converge to one of the  pre-stored patterns.

pre-stored patterns.

We first define the Energy function of any two nodes

|

(23) |

nodes in the network as the sum

of all pair-wise energies:

nodes in the network as the sum

of all pair-wise energies:

|

(24) |

The interaction between these two nodes is summarized below:

|

(25) |

:

:

(in cases 1 and 4),

(in cases 1 and 4),  tends to

keep

tends to

keep  to stay at the same state

to stay at the same state  in the iteration.

in the iteration.

(in cases 2 and 3),

(in cases 2 and 3),  tends to

keep

tends to

keep  to stay at the same state

to stay at the same state  .

.

:

:

(in cases 1 and 4),

(in cases 1 and 4),  tends to

reverse

tends to

reverse  from its previous state

from its previous state  to

to  .

.

(in cases 2 and 3),

(in cases 2 and 3),  tends to

reverse

tends to

reverse  from its previous state

from its previous state  to

to  .

.

corresponds to a stable interaction

between

corresponds to a stable interaction

between  and

and  , i.e., they tend to remain unchanged, and high

energy corresponds to an unstable interaction, i.e., they tend to change

their states. As the result, low total

, i.e., they tend to remain unchanged, and high

energy corresponds to an unstable interaction, i.e., they tend to change

their states. As the result, low total

corresponds

to more stable condition of the network, while high

corresponds

to more stable condition of the network, while high

corresponds to less stable condition.

corresponds to less stable condition.

We further show that the total energy

|

|

![$\displaystyle -\frac{1}{2}

\left[ \sum_{i\neq k}\sum_{j\neq k}w_{ij}x_i^{(n)}x_j^{(n)}

+\sum_i w_{ik}x_i^{(n)}x_k^{(n)}+\sum_j w_{kj}x_k^{(n)}x_j^{(n)} \right]$](img106.svg) |

|

|

|

changes state is

changes state is

|

(26) |

|

(27) |

, but

, but

and

and

, we have

, we have

and

and

.

.

, but

, but

and

and

, we have

, we have

and

and

.

.

is always

true throughout the iteration, we conclude that

is always

true throughout the iteration, we conclude that

will

eventually reach one of the minima of the energy landscape, i.e.,

the iteration will always converge.

will

eventually reach one of the minima of the energy landscape, i.e.,

the iteration will always converge.

We further show that each one of the

|

(28) |

different (ideally orthogonal to) from any of the stored

patterns, all

different (ideally orthogonal to) from any of the stored

patterns, all  terms of the summation will be small (ideally zero).

But if

terms of the summation will be small (ideally zero).

But if  is the same as any one of the stored patterns, their

inner product reaches maximum, causing the total energy to be minimized

to reach one of the minima. In other words, the patterns stored in the

network correspond to the local minima of the energy function. i.e.,

these patterns become attractors.

is the same as any one of the stored patterns, their

inner product reaches maximum, causing the total energy to be minimized

to reach one of the minima. In other words, the patterns stored in the

network correspond to the local minima of the energy function. i.e.,

these patterns become attractors.

Note that it is possible to have other local minima, called spurious states, which do not represent any of the stored patterns, i.e., the associative memory is not perfect.