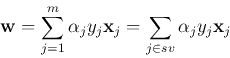

For a decision hyper-plane

![]() to separate the two

classes

to separate the two

classes

![]() and

and

![]() , it has to

satisfy

, it has to

satisfy

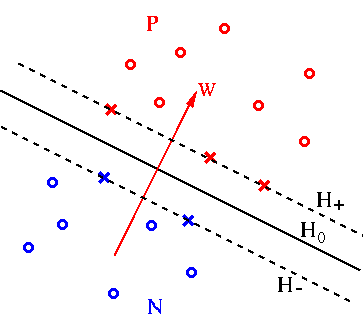

The optimal plane should be in the middle of the two classes, so that

the distance from the plane to the closest point on either side is the

same. We define two additional planes ![]() and

and ![]() that are parallel

to

that are parallel

to ![]() and go through the point closest to the plane on either side:

and go through the point closest to the plane on either side:

Moreover, the distances from the origin to the three parallel planes ![]() ,

,

![]() and

and ![]() are, respectively,

are, respectively,

![]() ,

,

![]() ,

and

,

and

![]() , and the distance between planes

, and the distance between planes ![]() and

and ![]() is

is

![]() . Our goal is to find the optimal decision hyperplane in

terms of

. Our goal is to find the optimal decision hyperplane in

terms of ![]() and

and ![]() with maximal distance between

with maximal distance between ![]() and

and ![]() ,

or, equivalantly, minimal

,

or, equivalantly, minimal

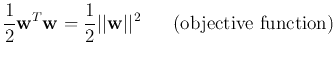

![]() . Now the

classification problem can be formulated as:

. Now the

classification problem can be formulated as:

|

|||

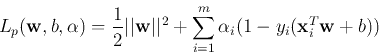

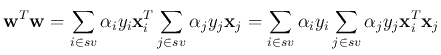

Since the objective function is quadratic, this constrained optimization

problem is called a quadratic program (QP) problem. (If the objective function

is linear instead, the problem is a linear program (LP) problem). This QP

problem can be solved by Lagrange multipliers method to minimize the following

|

|||

|

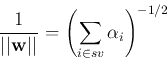

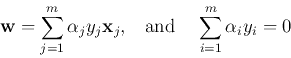

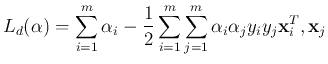

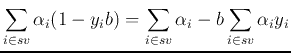

Solving this dual problem (an easier problem than the primal one), we get

![]() , from which

, from which ![]() of the optimal plane can be found.

of the optimal plane can be found.

Those points ![]() on either of the two planes

on either of the two planes ![]() and

and ![]() (for which

the equality

(for which

the equality

![]() holds) are called support vectors

and they correspond to positive Lagrange multipliers

holds) are called support vectors

and they correspond to positive Lagrange multipliers ![]() . The

training depends only on the support vectors, while all other samples away

from the planes

. The

training depends only on the support vectors, while all other samples away

from the planes ![]() and

and ![]() are not important.

are not important.

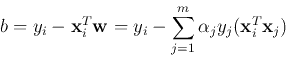

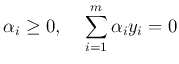

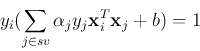

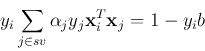

For a support vector ![]() (on the

(on the ![]() or

or ![]() plane), the constraining

condition is

plane), the constraining

condition is

|

|||

|

|||

|