Next: Vector and matrix differentiation

Up: algebra

Previous: Vector norms

An  matrix

matrix  can be considered as a particular kind

of vector

can be considered as a particular kind

of vector

, and its norm is any function that

maps

, and its norm is any function that

maps  to a real number

to a real number  that satisfies the following

required properties:

that satisfies the following

required properties:

- Positivity:

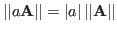

- Homogeneity:

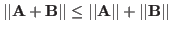

- Triangle inequality:

In addition to the three required properties for matrix norm, some of

them also satisfy these additional properties not required of all matrix

norms:

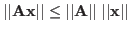

- Subordinance:

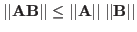

- Submultiplicativity:

We now consider some commonly used matrix norms.

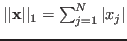

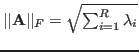

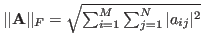

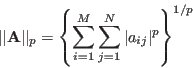

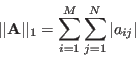

- Element-wise norms

If we treat the  elements of

elements of  are the elements of

an

are the elements of

an  -dimensional vector, then the p-norm of this vector can be used as

the p-norm of

-dimensional vector, then the p-norm of this vector can be used as

the p-norm of  :

:

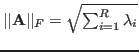

Specially, we consider the following three cases for

.

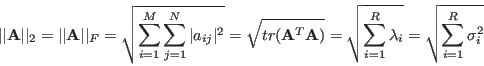

The Frobenius norm of a unitary (orthogonal if real) matrix

.

The Frobenius norm of a unitary (orthogonal if real) matrix  satisfying

satisfying

or

or

is:

is:

The Frobenius norm is the only one out of the above three matrix norms

that is unitary invariant, i.e., it is conserved or invariant

under a unitary transformation (such as a rotation)

:

:

where we have used the property of the trace

.

.

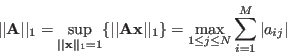

- Induced or operator norms

of a matrix

of a matrix  is based on any vector norm

is based on any vector norm

( is sub-ordinate to the vector norm

is sub-ordinate to the vector norm  .)

Here

.)

Here  is supremum of

is supremum of  , which is the same as the maximum

, which is the same as the maximum

if the function is closed and bounded. Otherwise, the maximum

does not exist and the supremum is the least upper bound of the function.

if the function is closed and bounded. Otherwise, the maximum

does not exist and the supremum is the least upper bound of the function.

Note that the norm of the identity matrix

is

is

We now prove the matrix norm defined above satisfy all properties given

above. (Recall

,

,

.)

.)

if

if

, this is trivially obvious.

, this is trivially obvious.

-

-

-

As  is arbitrary, we let

is arbitrary, we let

and get

and get

-

Specifically, the matrix p-norm  can be based on the vector

p-norm

can be based on the vector

p-norm  , as defined in the following for

, as defined in the following for

.

.

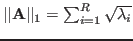

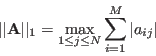

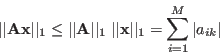

- When

,

,  is maximum absolute column sum:

is maximum absolute column sum:

In Matlab this norm is implemented by the function norm(A,1).

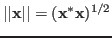

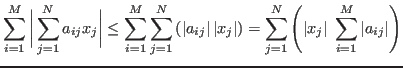

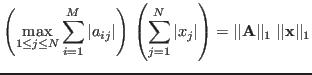

Proof: The 1-norm of vector  is

is

, we have

, we have

Assuming the kth column of  has the maximum absolute sum

and

has the maximum absolute sum

and  is normalized (as required in the definition) with

is normalized (as required in the definition) with

, we have

, we have

and

Now we show that the equality of the above can be achieved, i.e.,

is maximized, if we choose

is maximized, if we choose

![${\bf x}={\bf e}_k=[0,\cdots,1,\cdots,0]^T$](img606.png) , the kth unit

vector (normalized):

, the kth unit

vector (normalized):

i.e.,

is the vector among all other normalized

vectors that maximizes

is the vector among all other normalized

vectors that maximizes  as required in the definition,

and the resulting maximum

as required in the definition,

and the resulting maximum  is indeed

is indeed

. We therefore have

. We therefore have

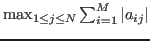

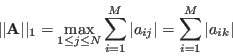

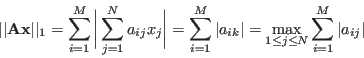

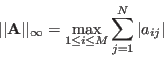

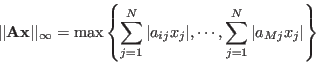

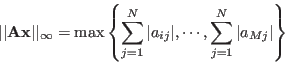

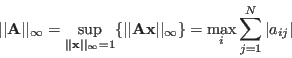

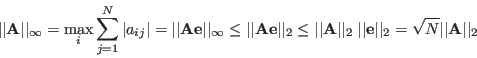

- When

,

,

is maximum absolute row sum:

is maximum absolute row sum:

In Matlab this norm is implemented by the function norm(A,inf).

Proof: When  ,

,  is normalized if

is normalized if

. The norm of vector

. The norm of vector

is:

is:

which can be maximized by any normalized vector with

to become

to become

We therefore have

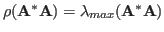

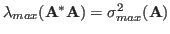

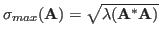

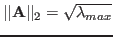

- When

,

,  is the spectral norm, the greatest

singular value of

is the spectral norm, the greatest

singular value of  , which is the square root of the greatest

eigenvalue of

, which is the square root of the greatest

eigenvalue of

, i.e., its spectral radius

, i.e., its spectral radius

,

,

where

is the

maximal eigenvalue of

is the

maximal eigenvalue of

, and

, and

the maximal

singular value of

the maximal

singular value of  . In Matlab this norm is implemented by

the function

. In Matlab this norm is implemented by

the function norm(A,2) or simply norm(A).)

Proof: When  ,

,

, and we

have

, and we

have

Here we have used the eigen-decomposition

of

of

, where

, where

are the diagonal eigenvalue matrix and the eigenvector matrix of

, satisfying

, satisfying

As

is a symmetric positive definite square matrix,

all of its eigenvalues are real and positive and assumed to be sorted

is a symmetric positive definite square matrix,

all of its eigenvalues are real and positive and assumed to be sorted

and all corresponding eigenvectors are orthogonal and assumed to

be normalized, i.e.,

, or

, or

is a unitary (orthogonal if real)

matrix. In the equation above, we have introduced a new vector

is a unitary (orthogonal if real)

matrix. In the equation above, we have introduced a new vector

as a unitary transform of

as a unitary transform of  .

.

can be considered as a rotated version of

can be considered as a rotated version of  with

its Euclidean 2-norm conserved,

with

its Euclidean 2-norm conserved,

.

.

The right-hand side of the equation above is a weighted average of

the  eigenvalues

eigenvalues

, which is maximized

if they are weighted by a normalized vector

, which is maximized

if they are weighted by a normalized vector

![${\bf y}=[1,0,\cdots,0]^*$](img634.png) with

with

, by which the greatest eigenvalue

, by which the greatest eigenvalue  is maximally weighted while all others are weighted by 0. As also

is maximally weighted while all others are weighted by 0. As also

, we therefore have

, we therefore have

- Subordinance

If vector  is the eigenvector corresponding to the greatest

eigenvalue

is the eigenvector corresponding to the greatest

eigenvalue  of

of

:

:

then the equality of the subordinance property

holds. Consider

holds. Consider

taking square root on both sides we get

.

.

- Submultiplicativity

The equality of the submultiplicativity property

holds if

holds if

(

( and

and  are linearly dependent). Consider

are linearly dependent). Consider

and

i.e.,

.

.

- Unitary invariance

The spectral norm is the only one out of the three matrix norms

that is unitary invariant, i.e., it is conserved or invariant

under a unitary transform (such as a rotation)

:

:

Here we have used the fact that the eigenvalues and eigenvectors are

invariant under the unitary transform.

Example

The eigenvalues of

are

are

The singular values of  are

are

The norm of  is

is

The eigenvector corresponding to greatest eigenvalue  is

is

![${\bf x}=[0.285,\;-0.957, 0.057]^T$](img660.png) , which satisfies the equality

, which satisfies the equality

.

.

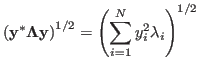

- The Schatten norms

The Shatten norm is defined based on the singular values  of

of

or the eigenvalues

or the eigenvalues

of

of

:

:

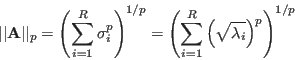

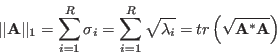

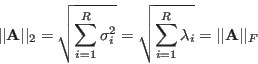

In particular, consider three common  values:

values:

is the nuclear or trace norm:

is the nuclear or trace norm:

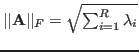

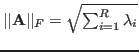

same as the Frobenius norm:

same as the Frobenius norm:

same as the spectral norm (the induced 2-norm),

the spectral radius of

same as the spectral norm (the induced 2-norm),

the spectral radius of

.

.

As the eigenvalues and eigenvectors of

are invariant

under unitary transform, the Schatten norms are unitary invariant as well.

are invariant

under unitary transform, the Schatten norms are unitary invariant as well.

All matrix norms defined above are equivalent according to the theorem

previously discussed.

- The Frobenius norm

and the induced 2-norm

and the induced 2-norm

are

equivalent:

are

equivalent:

The equality on the left holds when all eigenvalues  but one

are zero, and the equality on the right holds when all

but one

are zero, and the equality on the right holds when all  are

the same.

are

the same.

- The Frobenius norm

and the Schatten 1-norm

and the Schatten 1-norm

are equivalent:

are equivalent:

The equality on the left holds when all eigenvalues  but

one are zero, and the equality on the right holds when all

but

one are zero, and the equality on the right holds when all  are the same.

are the same.

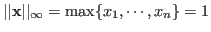

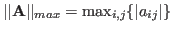

- The element-wise maximum norm

and the Frobenius norm

and the Frobenius norm

are equivalent:

are equivalent:

The equality on the left holds when all elements  but one

are zero, and the equality on the right holds when all elements are

the same.

but one

are zero, and the equality on the right holds when all elements are

the same.

-

Proof: Define an N-D vector

![${\bf e}=[1,\cdots,1]^T$](img678.png) , then the

greatest absolute row sum of

, then the

greatest absolute row sum of  is

is

i.e.,

-

Theorem

Proof: Let  and

and  by the eigenvalue and the

corresponding eigenvector of

by the eigenvalue and the

corresponding eigenvector of  respectively, i.e.,

respectively, i.e.,

Taking norm on both sides we get

Dividing both sides by

we get

we get

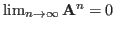

Theorem A square matrix  is convergent, i.e.,

is convergent, i.e.,

, if and only if

, if and only if

.

.

The proof of this theorem can be found

here.

Next: Vector and matrix differentiation

Up: algebra

Previous: Vector norms

Ruye Wang

2015-04-27

![]() matrix

matrix ![]() can be considered as a particular kind

of vector

can be considered as a particular kind

of vector

![]() , and its norm is any function that

maps

, and its norm is any function that

maps ![]() to a real number

to a real number ![]() that satisfies the following

required properties:

that satisfies the following

required properties:

![]() elements of

elements of ![]() are the elements of

an

are the elements of

an ![]() -dimensional vector, then the p-norm of this vector can be used as

the p-norm of

-dimensional vector, then the p-norm of this vector can be used as

the p-norm of ![]() :

:

![]() of a matrix

of a matrix ![]() is based on any vector norm

is based on any vector norm

![]()

![]() is

is

![]() ,

,

![]() .)

.)

![]() can be based on the vector

p-norm

can be based on the vector

p-norm ![]() , as defined in the following for

, as defined in the following for

![]() .

.

![]() is

is

![]() , we have

, we have

![]() ,

, ![]() is normalized if

is normalized if

![]() . The norm of vector

. The norm of vector

![]() is:

is:

![]() ,

,

![]() , and we

have

, and we

have

![]() eigenvalues

eigenvalues

![]() , which is maximized

if they are weighted by a normalized vector

, which is maximized

if they are weighted by a normalized vector

![]() with

with

![]() , by which the greatest eigenvalue

, by which the greatest eigenvalue ![]() is maximally weighted while all others are weighted by 0. As also

is maximally weighted while all others are weighted by 0. As also

![]() , we therefore have

, we therefore have

![]() is the eigenvector corresponding to the greatest

eigenvalue

is the eigenvector corresponding to the greatest

eigenvalue ![]() of

of

![]() :

:

![]() holds if

holds if

![]() (

(![]() and

and ![]() are linearly dependent). Consider

are linearly dependent). Consider

![]() :

:

![\begin{displaymath}

{\bf A}=\left[\begin{array}{rrr}3&-6&2 2&5&1 -3&2&2\end...

...t[\begin{array}{rrr}3&2&1 2&-3&0 1&0&-1\end{array}\right]

\end{displaymath}](img651.png)

![]() of

of

![]() or the eigenvalues

or the eigenvalues

![]() of

of

![]() :

:

and the induced 2-norm

and the induced 2-norm

and the Schatten 1-norm

and the Schatten 1-norm

![]() is convergent, i.e.,

is convergent, i.e.,

![]() , if and only if

, if and only if

![]() .

.