As one of the most important tasks in machine learning,

pattern classification is to classify some objects of interest,

generically referred to as patterns and described by a set of  features or attributes that characterizes the patterns,

to one of some

features or attributes that characterizes the patterns,

to one of some  classes or categories. Each pattern is

represented by a vector (or a point)

classes or categories. Each pattern is

represented by a vector (or a point)

![${\bf x}=[x_1,\cdots,x_d]^T$](img3.svg) in

a d-dimensional feature space, where

in

a d-dimensional feature space, where

is a

variable for the measurement of the ith feature. Symbolically, the

is a

variable for the measurement of the ith feature. Symbolically, the  classes can be denoted

classes can be denoted

, and a pattern

, and a pattern  belonging to the kth class is denoted by

belonging to the kth class is denoted by

. Pattern

classification can therefore be considered as the process by which

the d-dimensional feature space is partitioned into

. Pattern

classification can therefore be considered as the process by which

the d-dimensional feature space is partitioned into  regions each

corresponding to one of the

regions each

corresponding to one of the  classes. The boundaries between these

regions, called decision boundaries, are to be determined by the

specific algorithm, called a classifier, used for the classification.

classes. The boundaries between these

regions, called decision boundaries, are to be determined by the

specific algorithm, called a classifier, used for the classification.

Pattern classification can be carried out as either a supervised

or unsupervised learning process, depending on the availability

of a training set containing patterns of known class identities.

Specifically, the training set contains a set of  patterns in

patterns in

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_N]$](img9.svg) , labeled respectively by the

corresponding component in

, labeled respectively by the

corresponding component in

![${\bf y}=[ y_1,\cdots,y_N]^T$](img10.svg) representing

the class identities of the corresponding patterns in some way. For

example, we can use

representing

the class identities of the corresponding patterns in some way. For

example, we can use

to indicate

to indicate

. In the special case when

. In the special case when  , there are only two classes

, there are only two classes

and

and  , and the classifier becomes binary based on

training pattern

, and the classifier becomes binary based on

training pattern

, each labeled by

, each labeled by  if

if

or

or  if

if

.

.

We assume there are  training samples

training samples

all labeled to belong

to

all labeled to belong

to

, and in total

, and in total

samples in

the training set. If the training set is a fair representation of all

patterns of different classes in the entire dataset, then

samples in

the training set. If the training set is a fair representation of all

patterns of different classes in the entire dataset, then  can

be treated as an estimate of the a priori probability that any

randomly selected pattern

can

be treated as an estimate of the a priori probability that any

randomly selected pattern  happens to belong to class

happens to belong to class  ,

without any prior knowledge of the pattern.

,

without any prior knowledge of the pattern.

Once a classifier is properly trained according to a specific algorithm

based on the traning set, the feature space is partitioned into regions

corresponding to the different classes and any unlabeled pattern of unknown

class as a vector  in the feature space can be classified into

one of the

in the feature space can be classified into

one of the  classes.

classes.

Supervised classification can be considered as a process of

establishing the corresponding relationship between the patterns

treated as the independent or input

variables to the classifier, and the classes

treated as the independent or input

variables to the classifier, and the classes

the

input patterns belong, treated as the dependent or output variables.

Therefore regression and classification can be considered as the

same supervised learning process: modeling the relationship between

the data points in

the

input patterns belong, treated as the dependent or output variables.

Therefore regression and classification can be considered as the

same supervised learning process: modeling the relationship between

the data points in

and their

corresponding labelings (or targets) in

and their

corresponding labelings (or targets) in

. This

process is regression when the labelings take continous real values,

but it is classification when they are discrete categorical

representing different classes. Some methods in the previous chapter

on regression analysis are actually used as classifiers, such as

logistic and solfmax regressions, and the method of Gaussian process

can also be used for classification.

. This

process is regression when the labelings take continous real values,

but it is classification when they are discrete categorical

representing different classes. Some methods in the previous chapter

on regression analysis are actually used as classifiers, such as

logistic and solfmax regressions, and the method of Gaussian process

can also be used for classification.

If the training data of labeled patterns are unavailable, various

unsupervised learning methods can be used to assign each

unlabeled patterns into one of the  different groups, called

clusters, according to its position in the feature space,

based on the overall spatial structure and distribution of the

data set in the feature space. This process is called

clustering analysis or simply clustering.

different groups, called

clusters, according to its position in the feature space,

based on the overall spatial structure and distribution of the

data set in the feature space. This process is called

clustering analysis or simply clustering.

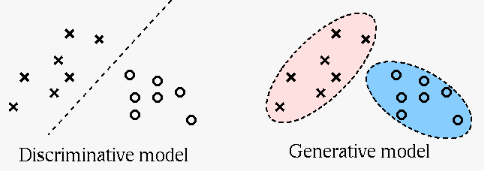

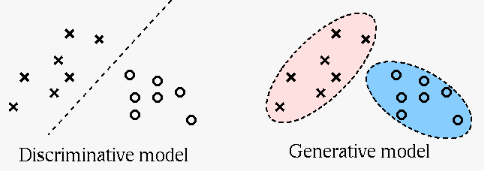

There exsit a variety of methods for learning, including both

regression and classification, based on different models

assumed. One way to characterize these methods is to put

them all in a probabilistic framework, in terms of the

probabilities of the given dataset incuding data points  and the corresponding labeling

and the corresponding labeling  . Now a method can be

categorized into either of the following two groups:

. Now a method can be

categorized into either of the following two groups:

Here are some comparisons between the two approaches:

- The discriminative methods find the decision boundary in

the feature space directly based on the data points in the

training set, in general they

- are simpler than the generative approach requiring

problem-specific knowledge for building models of the data,

- are effective in producing accurate result when the

dataset is large

- provide no insight or interpretation regarding the data

and no uncertainty estimate

- The generative methods first establish a probabilistic

model for the underlying structure of the data as an effort

to explain how the data was generated, and then finds the

decision boundary based the model. In general, they

- allow the use of problem-specific knowlege for building

the model,

- can provide explanation and interpretation of the data,

- may be less prone to overfitting than a discriminative

method,

- can provide uncertainty estimate

- may not be as accurate as the discriminative methods

if the model does not fit the dataset well.

![${\bf x}=[x_1,\cdots,x_d]^T$](img3.svg)

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_N]$](img9.svg)

![${\bf y}=[ y_1,\cdots,y_N]^T$](img10.svg)