Next: Optimality of KLT Up: Principal Component Analysis Previous: Covariance and Correlation

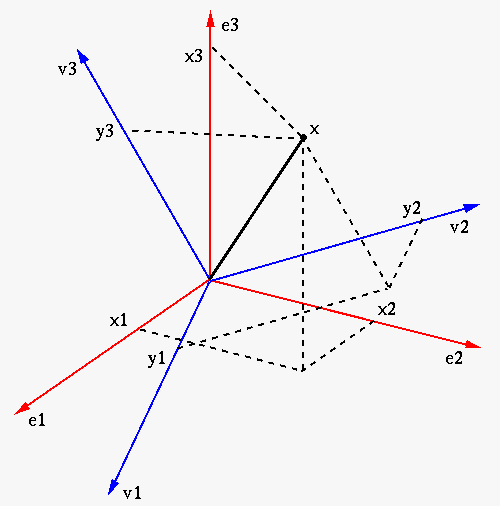

A data point in a d-dimensional space is represented by a vector

![${\bf x}=[x_1,\cdots,x_d]^T$](img2.svg)

|

|

![$\displaystyle \left[\begin{array}{c}x_1\\ x_2\\ \vdots\\ x_d\end{array}\right]

...

...begin{array}{c}0\\ \vdots\\ 0\\ 1\end{array}\right]

=\sum_{i=1}^d x_i {\bf e}_i$](img185.svg) |

|

|

![$\displaystyle \left[\begin{array}{ccc}&&\\ {\bf e}_1&\cdots&{\bf e}_d\\

&&\end...

...ght]

\left[\begin{array}{c}x_1\\ \vdots\\ x_d\end{array}\right]

={\bf I}{\bf x}$](img186.svg) |

(44) |

![${\bf I}=[{\bf e}_1,\cdots,{\bf e}_d]$](img187.svg) is the identity

matrix.

is the identity

matrix.

The space can also be spanned by any other orthonormal basis

|

(45) |

can also be represented as a weighted

vector sum of these basis vectors:

can also be represented as a weighted

vector sum of these basis vectors:

![$\displaystyle {\bf x}=\sum_{i=1}^d y_i {\bf a}_i

=\left[\begin{array}{ccc}&&\\ ...

...ight]

\left[ \begin{array}{c} y_1\\ \vdots \\ y_d \end{array} \right]

={\bf Ay}$](img190.svg) |

(46) |

![${\bf A}=[{\bf a}_1,\cdots,{\bf a}_d]$](img191.svg) composed of

composed of  orthonormal column vectors is an orthogonal matrix satisfying

orthonormal column vectors is an orthogonal matrix satisfying

, and

, and

![${\bf y}=[y_1,\cdots,y_d]^T$](img193.svg) is a vector containing all

is a vector containing all  coordinates in the directions of

the basis vector

coordinates in the directions of

the basis vector

, which can be

found by premultiplying

, which can be

found by premultiplying

on both sides of

the equation above

on both sides of

the equation above

:

:

![$\displaystyle {\bf A}^T{\bf Ay}={\bf y}

=\left[ \begin{array}{c} y_1\\ \vdots \...

...in{array}{c}{\bf a}_1^T{\bf x}\\ \vdots\\ {\bf a}_d^T{\bf x}

\end{array}\right]$](img197.svg) |

(47) |

is the

projection of

is the

projection of  onto the ith basis vector

onto the ith basis vector  .

.

The basis vectors in

![${\bf A}=[{\bf a}_1,\cdots,{\bf a}_d]$](img191.svg)

![${\bf I}=[{\bf e}_1,\cdots,{\bf e}_d]$](img187.svg)

|

(48) |

Summarizing the above, we can define an orthogonal transform based on

any orthogonal matrix

|

(49) |

under the implicit standard basis,

the column vectors of

under the implicit standard basis,

the column vectors of

![${\bf I}=[{\bf e}_1,\cdots,{\bf e}_d]$](img187.svg) , is

transformed into

, is

transformed into

under the explicit

basis, the column vectors of

under the explicit

basis, the column vectors of

![${\bf A}=[{\bf a}_1,\cdots,{\bf a}_d]$](img191.svg) .

.

Any orthogonal transform

If

|

|

![$\displaystyle E[ {\bf y} ]=E[ {\bf A}^T{\bf x} ]

={\bf A}^T E[ {\bf x} ]={\bf A}^T{\bf m}_x$](img203.svg) |

|

|

|

![$\displaystyle E[ {\bf yy}^T ]-{\bf m}_y{\bf m}_y^T

=E[ {\bf A}^T{\bf x}{\bf x}^T{\bf A} ]

-{\bf A}^T{\bf m}_x{\bf m}_x^T{\bf A}$](img205.svg) |

|

|

![$\displaystyle {\bf A}^T[ E[ {\bf x}{\bf x}^T ]-{\bf m}_x{\bf m}^T]{\bf A}

={\bf A}^T{\bf\Sigma}_x{\bf A}$](img206.svg) |

(50) |

In particular, the Karhunen-Loeve Transform (KLT) is just

one of such orthogonal transforms in the form of

![${\bf V}=[{\bf v}_1,\cdots,{\bf v}_d]$](img208.svg)

The

|

(51) |

![$\displaystyle {\bf\Sigma}_x{\bf V}={\bf\Sigma}_x[{\bf v}_1,\cdots,{\bf v}_d]

=[...

...dots&\ddots&\vdots\\ 0&\cdots&\lambda_d

\end{array}\right]

={\bf V}{\bf\Lambda}$](img215.svg) |

(52) |

is a diagonal

matrix. Premultiplying

is a diagonal

matrix. Premultiplying

on both sides, we get

on both sides, we get

|

(53) |

|

(54) |

and its inverse

and its inverse

are diagonalized by the orthogonal

eigenvector matrix

are diagonalized by the orthogonal

eigenvector matrix  to become

to become

and

and

respectively.

respectively.

Based on the orthogonal eigenvector matrix

is the projection

of

is the projection

of  onto the ith eigenvector

onto the ith eigenvector  . Premultiplying

. Premultiplying

on both sides of the forward transform

on both sides of the forward transform

, we get the inverse KLT transform, by

which vector

, we get the inverse KLT transform, by

which vector  is represented as a linear combination of the

eigenvectors

is represented as a linear combination of the

eigenvectors

as the basis vectors:

as the basis vectors:

![$\displaystyle {\bf x}={\bf V} {\bf y}=\left[\begin{array}{cccc}&&\\

{\bf v}_1&...

...n{array}{c} y_1\\ \vdots \\ y_d \end{array} \right]

=\sum_{i=1}^d y_i {\bf v}_i$](img225.svg) |

(56) |

Example:

![$\displaystyle {\bf\Sigma}_1=\left[\begin{array}{rr}2.964 & 0.544\\ 0.544 & 4.68...

...gma}_3=\left[\begin{array}{rr}3.043 & -3.076\\ -3.076 & 3.930\end{array}\right]$](img226.svg) |

(57) |

|

(58) |

|

(59) |