The goal of regression analysis is to model the relatiionship

between a dependent variable  , typically a scalor, and a set

of independent variables or predictors

, typically a scalor, and a set

of independent variables or predictors

,

represented as a column vector

,

represented as a column vector

![${\bf x}=[x_1,\cdots,x_d]^T$](img491.svg) in a

d-dimensional space. Here both

in a

d-dimensional space. Here both  and the components in

and the components in  take numerical values.

take numerical values.

Regression can be considered as a supervised learning method that

learns the essential relationship between the dependent and independent

variables, based on the training dataset containing  observed data

samples

observed data

samples

|

(99) |

where each  is labeld by

is labeld by  , the ground truth

value corresponding to

, the ground truth

value corresponding to  . We also represent the training set

as

. We also represent the training set

as

in terms of a

in terms of a  matrix

matrix

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_N]$](img498.svg) containing the

containing the  sample

points and an N-dimensional vector

sample

points and an N-dimensional vector

![${\bf y}=[y_1,\cdots,y_N]^T$](img499.svg) for

their labelings.

for

their labelings.

More specifically, a regression algorithm is to model the relationship

between independent variable  and the dependent variable

and the dependent variable  by a hypothesized regression function

by a hypothesized regression function

, containing a set of parameters

symbolically denoted by

, containing a set of parameters

symbolically denoted by

![${\bf\theta}=[\theta_1,\cdots,\theta_M]^T$](img501.svg) .

Geometrically this regression function

.

Geometrically this regression function

represents curve

if

represents curve

if  , a surface if

, a surface if  or a hypersurface if

or a hypersurface if  .

.

Typically, the form of the function

(e.g.,

linear, polynomial, or exponential) is determined is assumed to be

known based on prior knowledge, while the parameter

(e.g.,

linear, polynomial, or exponential) is determined is assumed to be

known based on prior knowledge, while the parameter

is

to be estimated by the regression algorithm, so that the predicted

value

is

to be estimated by the regression algorithm, so that the predicted

value  is to match the ground truth

is to match the ground truth  optimally is some

sense, but not affected by the inevitable observation noise in the

data. In other words, a regression algorithm should neither

overfit nor underfit the data.

optimally is some

sense, but not affected by the inevitable observation noise in the

data. In other words, a regression algorithm should neither

overfit nor underfit the data.

Regression analysis can also be interpreted as

system modeling/identification, when the independent variable

and the dependent variable

and the dependent variable  are treated respectively as

the input (stimuli) and output (responses) of a system, the behavior

of which is described by the relationship between such input and

output modeled by the regression function

are treated respectively as

the input (stimuli) and output (responses) of a system, the behavior

of which is described by the relationship between such input and

output modeled by the regression function

.

.

Regression analysis is also closely related to

pattern recognition/classification, when the independent

vector variable  in the data is treated as a set of

features that characterize a pattern or opject of

interest, and the corresponding dependent variable

in the data is treated as a set of

features that characterize a pattern or opject of

interest, and the corresponding dependent variable  is treated

as a categorical label indicating to which of a set of

is treated

as a categorical label indicating to which of a set of  classes

classes

a pattern

a pattern  belongs. In this case,

the modeling process of the relationship between

belongs. In this case,

the modeling process of the relationship between  and

and  becomes supervised pattern classification or recognition, to be

discussed in a later chapter.

becomes supervised pattern classification or recognition, to be

discussed in a later chapter.

In general the regression problem can be addressed based on

different philosophical viewpoints. In the frequentist

point of view, the unknown model parameters in

are fixed deterministic variables that can be estimated based

on the observed data, and a typical method based on this

viewpoint is the least squares method.

are fixed deterministic variables that can be estimated based

on the observed data, and a typical method based on this

viewpoint is the least squares method.

Alternatively, in the Bayesian inferenceoint of view, the model parameters in

are random

variables. Their prior probability distribution

are random

variables. Their prior probability distribution

before any data are observed can be estimated based on some prior

knowledge. If no such prior knowledge is available,

before any data are observed can be estimated based on some prior

knowledge. If no such prior knowledge is available,

can be simply a uniform distribution, i.e., all possible values

of

can be simply a uniform distribution, i.e., all possible values

of  are equally likely. Once the training set

are equally likely. Once the training set  becomes available, we can further get the posterior probability

based on Bayes' theorem:

becomes available, we can further get the posterior probability

based on Bayes' theorem:

|

(100) |

In general, the posterior is a narrower distribution than the

prior, and therefore a more accurate description of the model

parameters. The output

of

such a regression model based Bayesian inference is no longer

a deterministic value, but a random variable described by its

mean and variance.

of

such a regression model based Bayesian inference is no longer

a deterministic value, but a random variable described by its

mean and variance.

Based on either of these two viewpoints, different regression

algorithms can be used to find the parameters

for the model function

for the model function

to

fit the observed dataset

to

fit the observed dataset

in

some optimal way based on different criteria, as shown below.

in

some optimal way based on different criteria, as shown below.

- Least squares (LS) method:

This frequentist method measures how well the regression

function models the training data by the residual

defined as

, defined

as the difference between the model prediction

, defined

as the difference between the model prediction

and the ground

truth labeling value

and the ground

truth labeling value  corresponding to

corresponding to  , for each

of the

, for each

of the  data points in the observed data:

data points in the observed data:

![$\displaystyle {\bf r}({\bf\theta})

=\left[\begin{array}{c}r_1\\ \vdots\\ r_N\en...

...\ \vdots\\

y_N-f({\bf x}_N,{\bf\theta})\end{array}\right]

={\bf y}-\hat{\bf y}$](img519.svg) |

(101) |

Ideally, the residual should be zero,

,

and we can find the

,

and we can find the  model parameters by solving this equation

system of

model parameters by solving this equation

system of  equations of

equations of  unknowns in

unknowns in

. However,

as in general

. However,

as in general  , this system is overconstrained without a

solution. We therefore can only use least squares method to find

the optimal solution that minimizes the

sum of squared error (SSE):

, this system is overconstrained without a

solution. We therefore can only use least squares method to find

the optimal solution that minimizes the

sum of squared error (SSE):

|

(102) |

Here the coefficent  is included for mathematical

convenience. When divide by the total number of samples

is included for mathematical

convenience. When divide by the total number of samples  ,

this SSE becomes the average of all

,

this SSE becomes the average of all  squared errors, and

the error becomes the mean squared error (MSE).

squared errors, and

the error becomes the mean squared error (MSE).

- Maximum likelihood estimate (MLE):

This Bayesian inference method measures how well the

regression function models the training data

in terms of the

likelihood

in terms of the

likelihood

of the model parameter

based on the observed dataset

based on the observed dataset

,

which is proportional to the conditional probability of

,

which is proportional to the conditional probability of

given

given

:

:

|

(103) |

As we only need maximize the likelihood, the proportionality

is not a concern. The optimal model parameters in

can be found as those that maximize the likelihood function, or

equivalently the log likelihood function, for computational

convenience:

can be found as those that maximize the likelihood function, or

equivalently the log likelihood function, for computational

convenience:

|

(104) |

Typically the residual

is assumed

to be zero-mean random variable with a normal probability density

function (pdf):

is assumed

to be zero-mean random variable with a normal probability density

function (pdf):

The justification for this assumed normal pdf is that it

has the greatest

entropy

or

maximum uncertainty among all possible pdfs with the same

variance, i.e., based on  only, such an assumption

of normal pdf imposes least amount of constraint and thereby

minimum bias.

only, such an assumption

of normal pdf imposes least amount of constraint and thereby

minimum bias.

The zero-mean pdf of

can also be

considered as the pdf of

can also be

considered as the pdf of  with mean

with mean

,

the conditional pdf

,

the conditional pdf

of

of  given

given

as well as

as well as  , based on the assumption

that

, based on the assumption

that  and

and  are indeed related by

are indeed related by

. Then we can find the likelihood

of the model parameter

. Then we can find the likelihood

of the model parameter

given the

given the  samples

in in the training set, all assumed to be

independent and identically distributed (i.i.d.):

samples

in in the training set, all assumed to be

independent and identically distributed (i.i.d.):

or, equivalently, minimize the negative log likelihood function:

The first term and the coefficient

in the second

term are both independent of

in the second

term are both independent of  and can therefore be

dropped. We note that this result is equivalent to that in

Eq. (#_#>107), i.e., we can find the optimal

and can therefore be

dropped. We note that this result is equivalent to that in

Eq. (#_#>107), i.e., we can find the optimal

by either maximizing the likelihood

by either maximizing the likelihood

, or equivalently minimizing the

sum of squares error

, or equivalently minimizing the

sum of squares error

.

.

- Maximum A Posteriori (MAP)

This is also a Bayesian inference method that measures how

well the regression function models the training data by the

posterior probability of the parameter

given

in Eq. (100), proportional to the product

of the likelihood

given

in Eq. (100), proportional to the product

of the likelihood

and the prior

and the prior

. If no prior knowledge about

. If no prior knowledge about

is available, then

is available, then

is a uniform

distribution, then MAP is equivalent to MLE. However, if certain

prior knowledge regarding

is a uniform

distribution, then MAP is equivalent to MLE. However, if certain

prior knowledge regarding

does exist, and the

prior is not uniform, then the posterior better characterizes

does exist, and the

prior is not uniform, then the posterior better characterizes

than the likelihood and MAP may produce better

result than MLE.

than the likelihood and MAP may produce better

result than MLE.

We see that regression analysis can be treated as an optimization

problem, in which either the sum of squares error is minimized or

the likelihood function is maximized. Also, the optimization problem

is in general over-determined, as there are typically many more

observed data points in the training data than the number of unknown

parameters. Algorithms based on these different methods are to be

considered in detail in later sections in this chapter.

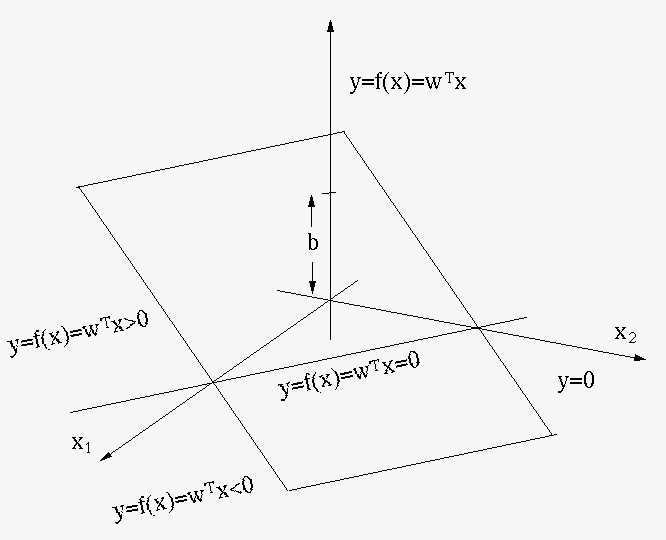

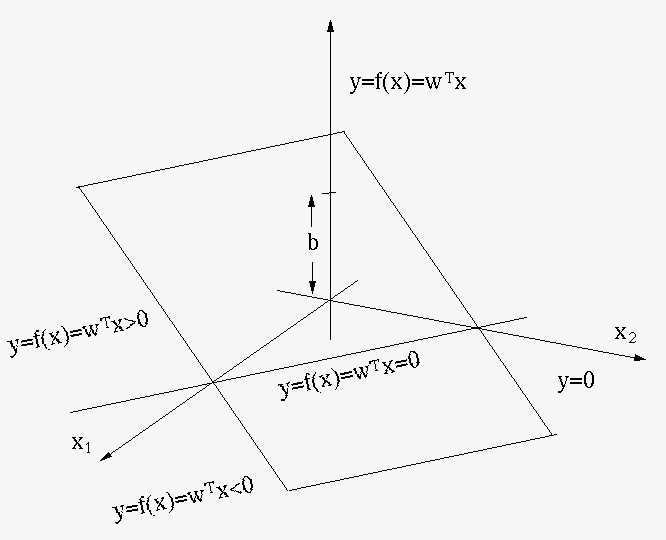

We further note that regression analysis can be considered as a

binary classification, when the regression function

,

as a hypersurface in a

,

as a hypersurface in a  dimensional space spanned by

dimensional space spanned by

, is thresholded by a constant

, is thresholded by a constant  (e.g.,

(e.g.,

). The resulting equation

). The resulting equation

defines a hypersurface

in the

defines a hypersurface

in the  dimensional space spanned by

dimensional space spanned by

, which

partitions the space into two parts in such a way that all points on

one side of the hypersurface satisfy

, which

partitions the space into two parts in such a way that all points on

one side of the hypersurface satisfy

, while all points

on the other side satisfy

, while all points

on the other side satisfy

. In other words, the regression

function is a binary classifier that separates every point

. In other words, the regression

function is a binary classifier that separates every point  in the

in the  dimensional space into two classes

dimensional space into two classes  and

and  , depending

on whether

, depending

on whether

is greater or smaller than C. Now each

is greater or smaller than C. Now each  corresponding to

corresponding to  in the given dataset

in the given dataset

can be treated as a label indicating

can be treated as a label indicating

belong to class

belong to class  if

if  , or

, or  if

if  , and

the regression problem becomes a binary classification problem.

, and

the regression problem becomes a binary classification problem.

![${\bf x}=[x_1,\cdots,x_d]^T$](img491.svg)

is labeld by

is labeld by  , the ground truth

value corresponding to

, the ground truth

value corresponding to  . We also represent the training set

as

. We also represent the training set

as

in terms of a

in terms of a  matrix

matrix

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_N]$](img498.svg) containing the

containing the  sample

points and an N-dimensional vector

sample

points and an N-dimensional vector

![${\bf y}=[y_1,\cdots,y_N]^T$](img499.svg) for

their labelings.

for

their labelings.

![${\bf\theta}=[\theta_1,\cdots,\theta_M]^T$](img501.svg)

of

such a regression model based Bayesian inference is no longer

a deterministic value, but a random variable described by its

mean and variance.

of

such a regression model based Bayesian inference is no longer

a deterministic value, but a random variable described by its

mean and variance.

![$\displaystyle {\bf r}({\bf\theta})

=\left[\begin{array}{c}r_1\\ \vdots\\ r_N\en...

...\ \vdots\\

y_N-f({\bf x}_N,{\bf\theta})\end{array}\right]

={\bf y}-\hat{\bf y}$](img519.svg)

,

and we can find the

,

and we can find the  model parameters by solving this equation

system of

model parameters by solving this equation

system of  equations of

equations of  unknowns in

unknowns in

. However,

as in general

. However,

as in general  , this system is overconstrained without a

solution. We therefore can only use least squares method to find

the optimal solution that minimizes the

sum of squared error (SSE):

Here the coefficent

, this system is overconstrained without a

solution. We therefore can only use least squares method to find

the optimal solution that minimizes the

sum of squared error (SSE):

Here the coefficent  is included for mathematical

convenience. When divide by the total number of samples

is included for mathematical

convenience. When divide by the total number of samples  ,

this SSE becomes the average of all

,

this SSE becomes the average of all  squared errors, and

the error becomes the mean squared error (MSE).

squared errors, and

the error becomes the mean squared error (MSE).

can be found as those that maximize the likelihood function, or

equivalently the log likelihood function, for computational

convenience:

can be found as those that maximize the likelihood function, or

equivalently the log likelihood function, for computational

convenience:

is assumed

to be zero-mean random variable with a normal probability density

function (pdf):

is assumed

to be zero-mean random variable with a normal probability density

function (pdf):

only, such an assumption

of normal pdf imposes least amount of constraint and thereby

minimum bias.

only, such an assumption

of normal pdf imposes least amount of constraint and thereby

minimum bias.

in the second

term are both independent of

in the second

term are both independent of  and can therefore be

dropped. We note that this result is equivalent to that in

Eq. (#_#>

and can therefore be

dropped. We note that this result is equivalent to that in

Eq. (#_#> by either maximizing the likelihood

by either maximizing the likelihood

, or equivalently minimizing the

sum of squares error

, or equivalently minimizing the

sum of squares error

.

.