The golden section search algorithm can be used for finding a minimum (or

maximum) of a single-variable function  . If it is known that the

function has a minimum between some two points, then we can search for it

iteratively, in a manner similar to the bisection search for the root of

an equation

. If it is known that the

function has a minimum between some two points, then we can search for it

iteratively, in a manner similar to the bisection search for the root of

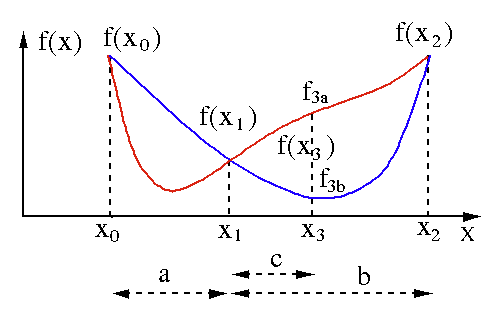

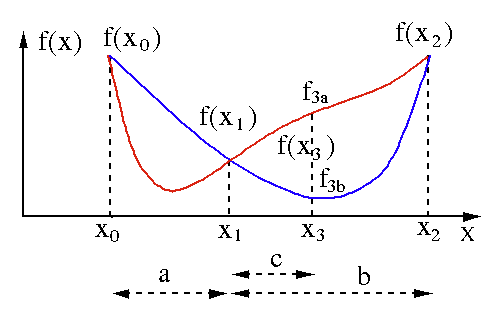

an equation  . Specifically, if in the neighborhood of the minimum

we can find three points

. Specifically, if in the neighborhood of the minimum

we can find three points

corresponding to

corresponding to

,

then there exists a minimum between

,

then there exists a minimum between  and

and  . To search for this

minimum, we can choose another point

. To search for this

minimum, we can choose another point  between

between  and

and  as shown

in the figure below, with the following two possible outcomes:

as shown

in the figure below, with the following two possible outcomes:

- If

, the minimum is inside the interval

, the minimum is inside the interval

associated with three new points

associated with three new points

, i.e.,

, i.e.,  is replaced by

is replaced by

.

.

- If

, the minimum is inside the interval

, the minimum is inside the interval  associated with three new points

associated with three new points

, i.e.,

, i.e.,  is replaced by

is replaced by

.

.

In either case, the new search interval

or

or  is

smaller than the old one

is

smaller than the old one

, i.e., such an iteration is guaranteed

to converge. Of course

, i.e., such an iteration is guaranteed

to converge. Of course  can also be between

can also be between  and

and  , the

iteration can be similarly carried out accordingly.

, the

iteration can be similarly carried out accordingly.

Similar to the bisection search for the root of  , here to search

for the minimum of

, here to search

for the minimum of  , we also want to avoid the worst possible case

in which the minimum always happens to be in the larger one of the two

sections

, we also want to avoid the worst possible case

in which the minimum always happens to be in the larger one of the two

sections  and

and  . We therefore choose

. We therefore choose  in such a way that the

two resulting search intervals will always be the same:

in such a way that the

two resulting search intervals will always be the same:

i.e. i.e. |

(10) |

In other words, through out the iteration, the search interval should

always be partitioned into two sections with the same ratio:

or or |

(11) |

In either case, we get

|

(12) |

Solving this quadratic equation for  in terms of

in terms of  we get

we get

|

(13) |

We keep the positive result and get

|

(14) |

This is the golden ratio between the two sections  and

and  of

the search interval.

of

the search interval.

The iteration of the golden section search can terminate when the size

of the search brackets  is small enough, compared with some

tolerance, such as

is small enough, compared with some

tolerance, such as  , pre-determined based on some prior knowledge

of the scale of the problem. However, this scale may be unknown, or it may

vary drastically, and an absolute tolerance may be too large for a small

scale problem but too small for a large scale problem. This problem can

be resolved by using a relative tolerance with a scale comparable with

that of the size of the problem:

, pre-determined based on some prior knowledge

of the scale of the problem. However, this scale may be unknown, or it may

vary drastically, and an absolute tolerance may be too large for a small

scale problem but too small for a large scale problem. This problem can

be resolved by using a relative tolerance with a scale comparable with

that of the size of the problem:

|

(15) |

where  is a small value such as

is a small value such as  , and

, and  represents the size of the search interval and

represents the size of the search interval and

represents

the scale of the problem. When the inequality is satisfied, the iteration

is terminated.

represents

the scale of the problem. When the inequality is satisfied, the iteration

is terminated.

It is possible that the three initial points chosen are such that either

or

or

, then we have to search

toward the descending direction of

, then we have to search

toward the descending direction of  until it starts to ascend.

For example, if

until it starts to ascend.

For example, if

, they can be iteratively updated

by the following

, they can be iteratively updated

by the following

|

(16) |

where  (e.g.,

(e.g.,  ), until we reach a point

), until we reach a point  at which

at which

. Obviously the distance

. Obviously the distance  grows exponentially

grows exponentially

.

.

, the minimum is inside the interval

, the minimum is inside the interval

associated with three new points

associated with three new points

, i.e.,

, i.e.,  is replaced by

is replaced by

.

.

, the minimum is inside the interval

, the minimum is inside the interval  associated with three new points

associated with three new points

, i.e.,

, i.e.,  is replaced by

is replaced by

.

.

or

or  is

smaller than the old one

is

smaller than the old one

, i.e., such an iteration is guaranteed

to converge. Of course

, i.e., such an iteration is guaranteed

to converge. Of course  can also be between

can also be between  and

and  , the

iteration can be similarly carried out accordingly.

, the

iteration can be similarly carried out accordingly.

i.e.

i.e.

or

or

in terms of

in terms of  we get

we get

and

and  of

the search interval.

of

the search interval.

is a small value such as

is a small value such as  , and

, and  represents the size of the search interval and

represents the size of the search interval and

represents

the scale of the problem. When the inequality is satisfied, the iteration

is terminated.

represents

the scale of the problem. When the inequality is satisfied, the iteration

is terminated.

(e.g.,

(e.g.,  ), until we reach a point

), until we reach a point  at which

at which

. Obviously the distance

. Obviously the distance  grows exponentially

grows exponentially

.

.