Next: The Simplex Algorithm Up: Constrained Optimization Previous: Duality and KKT Conditions

The basic problem in linear programming (LP) is to minimize/maximize a

linear objective function

|

(209) |

The LP problem can be more concisely represented in matrix form:

where![$\displaystyle {\bf x}=\left[\begin{array}{c}x_1\\ \vdots\\ x_N\end{array}\right...

...ts&a_{1N}\\

\vdots & \ddots & \vdots \\ a_{M1}&\cdots&a_{MN}\end{array}\right]$](img789.svg) |

(211) |

For example, the objective function could be the total profit,

the constraints could be some limited resources. Spicifically,

Here all variables are assumed to be non-negative. If there exists a variable that is not restricted, it can be eliminated. Specifically, we can solve one of the constraining equations for the variable and use the resulting expression of the variable to replace all its appearances in the problem. For example:

|

(212) |

, we get

, we get

. Substituting this into the objective

function and the other constraint, we get

. Substituting this into the objective

function and the other constraint, we get

and

and

, the problem can be reformulated as:

, the problem can be reformulated as:

|

(213) |

Given the primal LP problem above, we can further find its dual problem. We first construct the Lagrangian of primal problem:

|

(214) |

![${\bf y}=[y_1,\cdots,y_M]^T$](img800.svg) contains the Lagrange multipliers

for the inequality constraints

contains the Lagrange multipliers

for the inequality constraints

, and

, and

according to Table 188. We

define the dual function as the maximum of

according to Table 188. We

define the dual function as the maximum of

over

over

:

:

![$\displaystyle f_d({\bf y})=\max_{\bf x} L({\bf x})

=\max_{\bf x} [ {\bf c}^T{\b...

...bf b}) ]

=\max_{\bf x} [ ({\bf c}-{\bf A}^T{\bf y})^T{\bf x}+{\bf y}^T{\bf b} ]$](img804.svg) |

(215) |

that maximizes

that maximizes

, we set

its gradient to zero and get:

, we set

its gradient to zero and get:

![$\displaystyle \bigtriangledown_{\bf x}L({\bf x})

=\bigtriangledown_{\bf x}[ ({\...

...}-{\bf A}^T{\bf y})^T{\bf x}+{\bf y}^T{\bf b}]

={\bf c}-{\bf A}^T{\bf y}={\bf0}$](img805.svg) |

(216) |

we get:

we get:

, which is the upper bound of

, which is the upper bound of

, under the constraint that

, under the constraint that

so that

so that

contributes negatively

to

contributes negatively

to

(as

(as

):

):

|

(217) |

,

to become the tightest upper bound, i.e., the original primal

problem of maximization in Eq. (210) has now been converted

into the following dual problem of minimization:

,

to become the tightest upper bound, i.e., the original primal

problem of maximization in Eq. (210) has now been converted

into the following dual problem of minimization:

|

(218) |

|

(219) |

If either the primal or the dual is feasible and bounded, so is

the other, and they form a strong duality, the solution

An inequality constrained LP problem can be converted to an equality constrained problem by introducing slack variables:

|

(220) |

|

(221) |

is an

is an  dimensional augmented variable vector that

includes the

dimensional augmented variable vector that

includes the  slack variables

slack variables

as well as the

as well as the

original variables

original variables

:

:

![$\displaystyle {\bf x}=[x_1,\;x_2,\cdots,x_N,\,s_1,\;s_2,\cdots,s_M]^T$](img823.svg) |

(222) |

is redefined as an

is redefined as an

augmented coefficient

matrix that includes coefficients for both types of variables:

augmented coefficient

matrix that includes coefficients for both types of variables:

![$\displaystyle {\bf A}=\left[\begin{array}{cccc\vert cccc}

a_{11} & a_{12} & \cd...

...\right]_{M\times (N+M)}

=[\;{\bf A}_{M\times N}\;\vert\; {\bf I}_{M\times M}\;]$](img825.svg) |

(223) |

are the coefficients for the

are the coefficients for the  original variables

original variables

, and the identity matrix

, and the identity matrix

![${\bf I}_{M\times M}=[{\bf e}_1,\cdots,{\bf e}_M]$](img827.svg) is for the unity

coefficients of the

is for the unity

coefficients of the  slack variables

slack variables

,

each of which appears in the

,

each of which appears in the  constraints only once.

constraints only once.

Now the LP problem can be expressed in the standard form (original form

on the left, with

or or |

(224) |

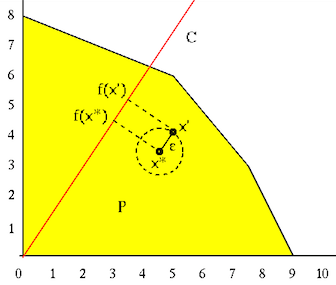

An LP problem can be viewed geometrically. If normalize

![${\bf a}_j=[a_{i1},\cdots,a_{IN}]^T$](img833.svg)

|

(225) |

non-negativity conditions

non-negativity conditions  in

in

corresponds to a hyper-plane perpendicular

to the jth standard basis vector

corresponds to a hyper-plane perpendicular

to the jth standard basis vector  . In general, in an

N-D space, if none of the hyper-planes is parallel to any others,

then no more than

. In general, in an

N-D space, if none of the hyper-planes is parallel to any others,

then no more than  hyper-planes intersect at one point. For

example, in a 2-D or 3-D space, two straignt lines or three

surfaces intersect at a point. Here for the LP problem in the

N-D space, the total number of intersection points formed by

the

hyper-planes intersect at one point. For

example, in a 2-D or 3-D space, two straignt lines or three

surfaces intersect at a point. Here for the LP problem in the

N-D space, the total number of intersection points formed by

the  hyper-planes is

hyper-planes is

|

(226) |

The polytope enclosed by these

Fundamental theorem of linear programming

The optimal solution

Proof:

Assume the optimal solution

|

(227) |

|

(228) |

cannot be the optimal solution as assumed. This contradiction

indicates that an optimal solution

cannot be the optimal solution as assumed. This contradiction

indicates that an optimal solution  must be on the surface of

must be on the surface of  ,

either at one of its vertices or on one of its surfaces. Q.E.D.

,

either at one of its vertices or on one of its surfaces. Q.E.D.

Based on this theorem, the optimization of a linear programming problem

could be solved by exhaustively checking each of the

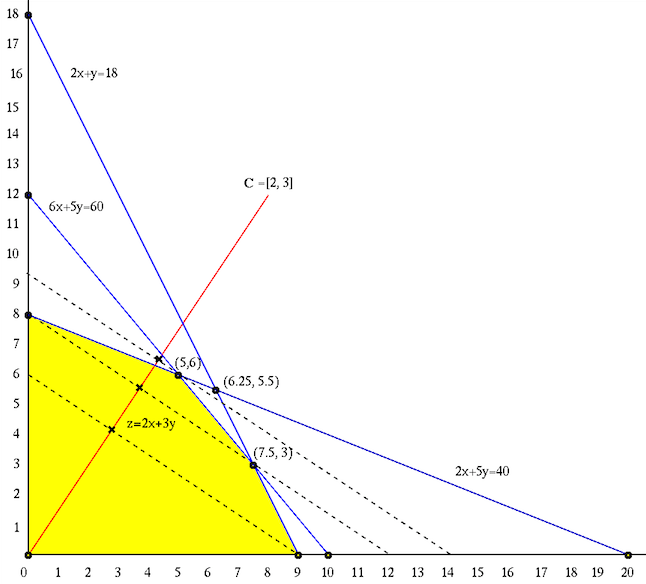

Example:

or or |

![$\displaystyle {\bf x}=\left[\begin{array}{c}x_1\\ x_2\end{array}\right],\;\;\;\...

...ight],\;\;\;\;

{\bf A}=\left[\begin{array}{cc}2&1\\ 6&5\\ 2&5\end{array}\right]$](img847.svg) |

This problem has

![${\bf x}=[x_1,\;x_2]^T$](img852.svg)

![${\bf c}=[2,\;3]^T$](img853.svg)

This LP problem can be converted into the standard form:

|

Listed in the table below are the

|

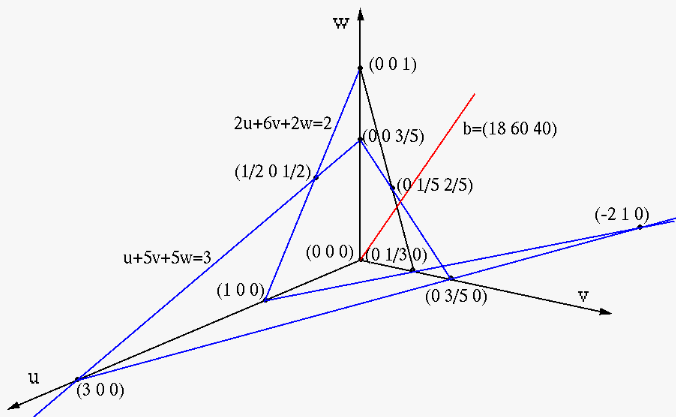

This primal maximization problem can be converted into the dual minimization problem:

or or |

The five planes,

|

We see that at the feasible point

Homework:

Develop the code to find all