The optimization problems subject to equality constraints can be

generally formulated as:

|

(173) |

where

is the objective function and

is the objective function and

is the ith constraint. Here

is the ith constraint. Here  , as in general there does not

exist a solution that satisfies more than

, as in general there does not

exist a solution that satisfies more than  equations in the N-D

space.

equations in the N-D

space.

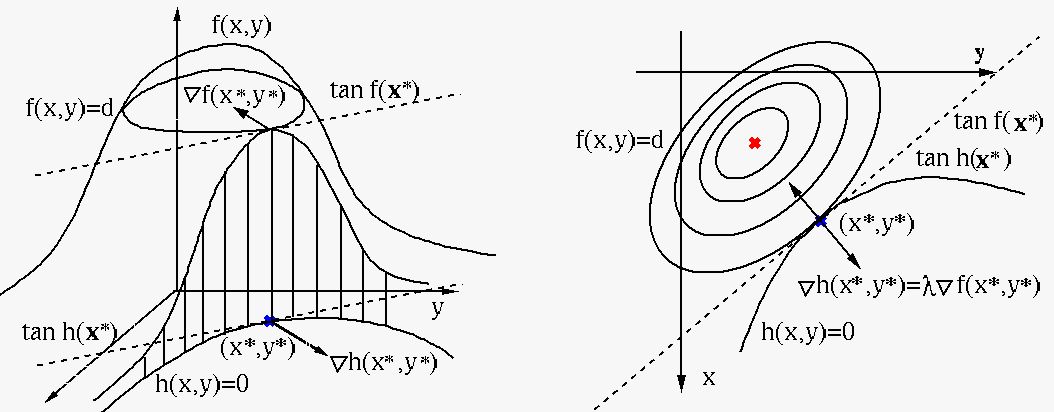

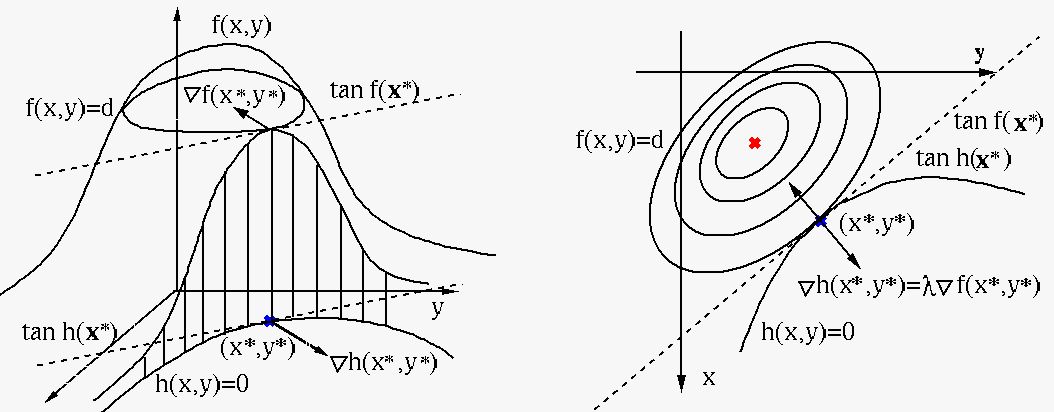

This problem can be visualized in the special case with  and

and

, where both

, where both

and

and

are surfaces defined

over the 2-D space spanned by

are surfaces defined

over the 2-D space spanned by  and

and  , and

, and

is the intersection line of

is the intersection line of

and the 2-D plane. The

solution

and the 2-D plane. The

solution  must satisfy the following two conditions:

must satisfy the following two conditions:

is on the intersection line, i.e.,

is on the intersection line, i.e.,

;

;

is on the contour line

is on the contour line

(for some

(for some

as shown in the figure), so that

as shown in the figure), so that

neither

increase nor decrease (no point is higher or lower) in the

neighborhood of

neither

increase nor decrease (no point is higher or lower) in the

neighborhood of  along the contour line.

along the contour line.

For both conditions to be satisfied, the two contours

and

and

must coincide at

must coincide at  , i.e., they must

have the same tangents and therefore parallel gradients (perpendicular

to the tangents):

, i.e., they must

have the same tangents and therefore parallel gradients (perpendicular

to the tangents):

|

(174) |

where

![$\bigtriangledown_{\bf x}f({\bf x})=[\partial f/\partial x_1,

\cdots,\partial f/\partial x_N ]^T$](img653.svg) is the gradient of

is the gradient of

,

and

,

and  is a constant scaling factor which can be either

positive if the two gradients are in the same direction or negative

if they are in opposite directions. As here we are only interested

in whether the two curves coincide or not, the sign of

is a constant scaling factor which can be either

positive if the two gradients are in the same direction or negative

if they are in opposite directions. As here we are only interested

in whether the two curves coincide or not, the sign of  can be either positive or negative.

can be either positive or negative.

To find such a point  , we first construct a new

objective function, called the Lagrange function (or simply

Lagrangian):

, we first construct a new

objective function, called the Lagrange function (or simply

Lagrangian):

|

(175) |

where  is the Lagrange multiplier, which can be

either positive or negative (i.e., the sign in front of

is the Lagrange multiplier, which can be

either positive or negative (i.e., the sign in front of  can be either plus or minus), and then set the gradient of this

objective function with respect to

can be either plus or minus), and then set the gradient of this

objective function with respect to  as well as

as well as  to zero:

to zero:

![$\displaystyle \bigtriangledown_{{\bf x},\lambda} L({\bf x},\lambda)

=\bigtriangledown_{{\bf x},\lambda} [ f({\bf x})-\lambda\, h({\bf x}) ]

={\bf0}$](img657.svg) |

(176) |

This equation contains two parts:

|

(177) |

This is an equation system containing  equations for

the same number of unknowns

equations for

the same number of unknowns

:

:

|

(178) |

The solution

![${\bf x}^*=[x_1^*,\,x_2^*]^T$](img662.svg) and

and  of this

equation system happens to satisfy the two desired relationships:

(a)

of this

equation system happens to satisfy the two desired relationships:

(a)

,

and (b)

,

and (b)

.

.

Specially, consider the following two possible cases:

- If

, then

, then

,

indicating

,

indicating

is an extremum independent of the constraint,

i.e., the constraint

is an extremum independent of the constraint,

i.e., the constraint

is inactive, and the

optimization is unconstrained.

is inactive, and the

optimization is unconstrained.

- If

, then

, then

(as in general

(as in general

),

indicating

),

indicating

is not an extremum without the constraint,

i.e., the constraint is active, and the optimization is indeed

constrained.

is not an extremum without the constraint,

i.e., the constraint is active, and the optimization is indeed

constrained.

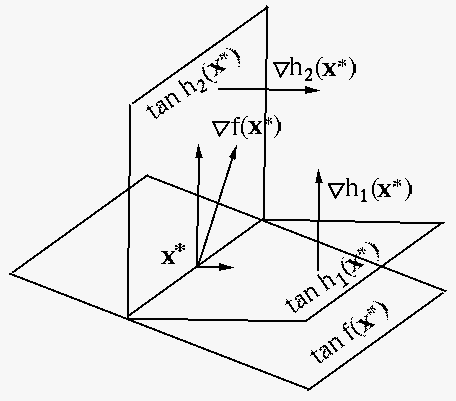

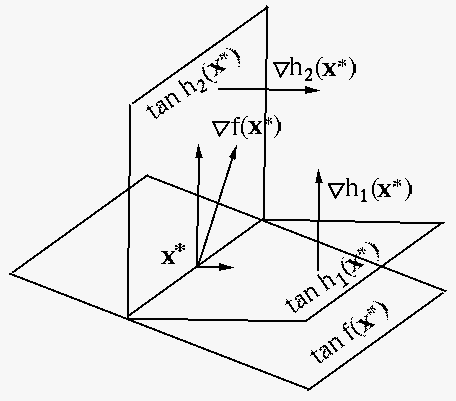

The discussion above can be generalized from 2-D to an  dimensional

space, in which the optimal solution

dimensional

space, in which the optimal solution  is to be found to

extremize the objective

is to be found to

extremize the objective

subject to

subject to  equality

constraints

equality

constraints

, each representing a

contour surface in the N-D space. The solution

, each representing a

contour surface in the N-D space. The solution  must

satisfy the following two conditions:

must

satisfy the following two conditions:

is on all

is on all  contour surfaces so that

contour surfaces so that

, i.e., it is on the

intersection of these surfaces, a curve in the space (e.g.,

the intersection of two surfaces in 3-D space is a curve);

, i.e., it is on the

intersection of these surfaces, a curve in the space (e.g.,

the intersection of two surfaces in 3-D space is a curve);

is on a contour surface

is on a contour surface

(for some value

(for some value  ) so that

) so that

is an extremum,

i.e., it does not increase or degrease in the neighborhood of

is an extremum,

i.e., it does not increase or degrease in the neighborhood of

along the curve.

along the curve.

Combining these conditions, we see that the intersection (a

curve or a surface) of the  surfaces

surfaces

must

coincide with the contour surface

must

coincide with the contour surface

at

at  ,

i.e., the intersection of the tangent surfaces of

,

i.e., the intersection of the tangent surfaces of

through

through  must coincide with the tangent surface of

must coincide with the tangent surface of

at

at  , therefore the gradient

, therefore the gradient

must be a linear combination

of the

must be a linear combination

of the  gradients

gradients

:

:

|

(179) |

To find such a solution  of the optimization problem,

we first construct the Lagrange function:

of the optimization problem,

we first construct the Lagrange function:

|

(180) |

where

![${\bf\lambda}=[\lambda_1,\cdots,\lambda_m]^T$](img677.svg) is a vector

for

is a vector

for  Lagrange multipliers, and then set its gradient to

zero:

Lagrange multipliers, and then set its gradient to

zero:

![$\displaystyle \bigtriangledown_{{\bf x},{\bf\lambda}} L({\bf x},{\bf\lambda})

=...

...\lambda}}

\left[ f({\bf x})-\sum_{i=1}^m \lambda_i h_i({\bf x}) \right]

={\bf0}$](img678.svg) |

(181) |

to get the following two equation systems of  and

and  equations

repectively:

equations

repectively:

i.e. i.e. |

(182) |

and

|

(183) |

The first set of  equations indicates that the gradient

equations indicates that the gradient

at

at  is a

linear combination of the

is a

linear combination of the  gradients

gradients

, while the second

set of

, while the second

set of  equations guarantees that

equations guarantees that  also satisfy

the

also satisfy

the  equality constraints. Solving these equations we get

the desired soluton

equality constraints. Solving these equations we get

the desired soluton  together with the Lagrange

multipliers

together with the Lagrange

multipliers

. This is the

Lagrange multiplier method.

. This is the

Lagrange multiplier method.

Specially, if

, then the ith constraint is not active.

More specially if

, then the ith constraint is not active.

More specially if

for all

for all

, then

, then

|

(184) |

indicating

is an extremum independent of any of

the constraints, i.e.,

is an extremum independent of any of

the constraints, i.e.,  is an unconstrained solution,

and the optimization problem is unconstrained.

is an unconstrained solution,

and the optimization problem is unconstrained.

is the objective function and

is the objective function and

is the ith constraint. Here

is the ith constraint. Here  , as in general there does not

exist a solution that satisfies more than

, as in general there does not

exist a solution that satisfies more than  equations in the N-D

space.

equations in the N-D

space.

is on the intersection line, i.e.,

is on the intersection line, i.e.,

;

;

is on the contour line

is on the contour line

(for some

(for some

as shown in the figure), so that

as shown in the figure), so that

neither

increase nor decrease (no point is higher or lower) in the

neighborhood of

neither

increase nor decrease (no point is higher or lower) in the

neighborhood of  along the contour line.

along the contour line.

and

and

must coincide at

must coincide at  , i.e., they must

have the same tangents and therefore parallel gradients (perpendicular

to the tangents):

, i.e., they must

have the same tangents and therefore parallel gradients (perpendicular

to the tangents):

![$\bigtriangledown_{\bf x}f({\bf x})=[\partial f/\partial x_1,

\cdots,\partial f/\partial x_N ]^T$](img653.svg) is the gradient of

is the gradient of

,

and

,

and  is a constant scaling factor which can be either

positive if the two gradients are in the same direction or negative

if they are in opposite directions. As here we are only interested

in whether the two curves coincide or not, the sign of

is a constant scaling factor which can be either

positive if the two gradients are in the same direction or negative

if they are in opposite directions. As here we are only interested

in whether the two curves coincide or not, the sign of  can be either positive or negative.

can be either positive or negative.

is the Lagrange multiplier, which can be

either positive or negative (i.e., the sign in front of

is the Lagrange multiplier, which can be

either positive or negative (i.e., the sign in front of  can be either plus or minus), and then set the gradient of this

objective function with respect to

can be either plus or minus), and then set the gradient of this

objective function with respect to  as well as

as well as  to zero:

to zero:

![$\displaystyle \bigtriangledown_{{\bf x},\lambda} L({\bf x},\lambda)

=\bigtriangledown_{{\bf x},\lambda} [ f({\bf x})-\lambda\, h({\bf x}) ]

={\bf0}$](img657.svg)

equations for

the same number of unknowns

equations for

the same number of unknowns

:

:

![${\bf x}^*=[x_1^*,\,x_2^*]^T$](img662.svg) and

and  of this

equation system happens to satisfy the two desired relationships:

(a)

of this

equation system happens to satisfy the two desired relationships:

(a)

,

and (b)

,

and (b)

.

.

, then

, then

,

indicating

,

indicating

is an extremum independent of the constraint,

i.e., the constraint

is an extremum independent of the constraint,

i.e., the constraint

is inactive, and the

optimization is unconstrained.

is inactive, and the

optimization is unconstrained.

, then

, then

(as in general

(as in general

),

indicating

),

indicating

is not an extremum without the constraint,

i.e., the constraint is active, and the optimization is indeed

constrained.

is not an extremum without the constraint,

i.e., the constraint is active, and the optimization is indeed

constrained.

is on all

is on all  contour surfaces so that

contour surfaces so that

, i.e., it is on the

intersection of these surfaces, a curve in the space (e.g.,

the intersection of two surfaces in 3-D space is a curve);

, i.e., it is on the

intersection of these surfaces, a curve in the space (e.g.,

the intersection of two surfaces in 3-D space is a curve);

is on a contour surface

is on a contour surface

(for some value

(for some value  ) so that

) so that

is an extremum,

i.e., it does not increase or degrease in the neighborhood of

is an extremum,

i.e., it does not increase or degrease in the neighborhood of

along the curve.

along the curve.

surfaces

surfaces

must

coincide with the contour surface

must

coincide with the contour surface

at

at  ,

i.e., the intersection of the tangent surfaces of

,

i.e., the intersection of the tangent surfaces of

through

through  must coincide with the tangent surface of

must coincide with the tangent surface of

at

at  , therefore the gradient

, therefore the gradient

must be a linear combination

of the

must be a linear combination

of the  gradients

gradients

:

:

![${\bf\lambda}=[\lambda_1,\cdots,\lambda_m]^T$](img677.svg) is a vector

for

is a vector

for  Lagrange multipliers, and then set its gradient to

zero:

Lagrange multipliers, and then set its gradient to

zero:

![$\displaystyle \bigtriangledown_{{\bf x},{\bf\lambda}} L({\bf x},{\bf\lambda})

=...

...\lambda}}

\left[ f({\bf x})-\sum_{i=1}^m \lambda_i h_i({\bf x}) \right]

={\bf0}$](img678.svg)

and

and  equations

repectively:

equations

repectively:

i.e.

i.e.

equations indicates that the gradient

equations indicates that the gradient

at

at  is a

linear combination of the

is a

linear combination of the  gradients

gradients

, while the second

set of

, while the second

set of  equations guarantees that

equations guarantees that  also satisfy

the

also satisfy

the  equality constraints. Solving these equations we get

the desired soluton

equality constraints. Solving these equations we get

the desired soluton  together with the Lagrange

multipliers

together with the Lagrange

multipliers

. This is the

Lagrange multiplier method.

. This is the

Lagrange multiplier method.

is an extremum independent of any of

the constraints, i.e.,

is an extremum independent of any of

the constraints, i.e.,  is an unconstrained solution,

and the optimization problem is unconstrained.

is an unconstrained solution,

and the optimization problem is unconstrained.