Next: Radial-Basis Function (RBF) Networks

Up: Back Propagation Network (BPN)

Previous: Error back propagation

The following is for one randomly chosen training pattern pair

.

.

- Apply

![${\bf x}_p=[x_{p1}, x_{p2}, ... , x_{pn}]^T$](img244.png) to the input nodes;

to the input nodes;

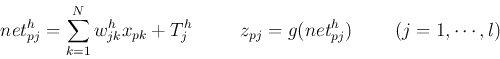

- Compute net input to and output from all nodes in the hidden layer:

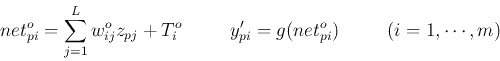

- Compute net input to and output from all nodes in the output layer:

- Compare the output

with the desired output

with the desired output

![${\bf y}_p=[y_{p1},y_{p2},...,y_{pm}]^T$](img248.png) corresponding to the input

corresponding to the input  ,

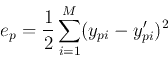

to find the error terms for all output nodes (not quite the same as defined

previously):

,

to find the error terms for all output nodes (not quite the same as defined

previously):

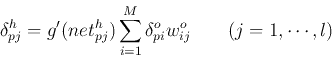

- Find error terms for all hidden nodes (not quite the same as defined

previously)

- Update weights to output nodes

- Update weights to hidden nodes

- Compute

This process is then repeated with another pair of

in the training set. When the error is acceptably small for all of the training

pattern pairs, training can be terminated.

in the training set. When the error is acceptably small for all of the training

pattern pairs, training can be terminated.

The training process of BP network can be considered as a

data modeling problem:

The goal is to find the optimal parameters, the weights of both the hidden and

output layers, based on the observed dataset, the training data of  pairs

pairs

. The Levenberg-Marquardt algorithm

discussed previously can be used to obtain the parameters, such as Matlab function

trainlm.

. The Levenberg-Marquardt algorithm

discussed previously can be used to obtain the parameters, such as Matlab function

trainlm.

Applications of BP networks include:

- Pattern recognition/classification

- Medical diagnosis (symptoms, diagnosis, drugs)

- Time series analysis (curve-fitting, interpolation and extrapolation, e.g.,

stock-market prediction).

- Data compression

- Automation (robots, autonomous vehicles)

- .....

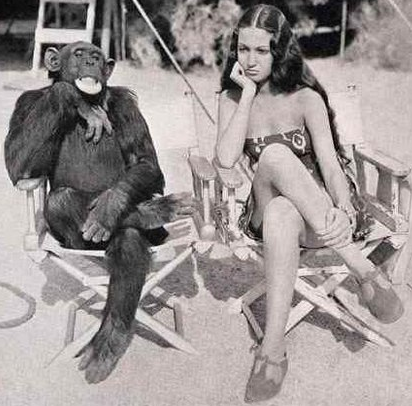

A story related (maybe) to neural networks

An elementary school teacher asked her class to give examples of the great

technological inventions in the 20th century. One kid mentioned it was the

telephone, as it would let you talk to someone far away. Another kid said it

was the airplane, because an airplane could take you to anywhere in the world.

Then the teacher saw little Johnny eagerly waving his hand in the back of the

classroom. ``What do you think is the greatest invention, Johnny?'' ``The thermos!''

The teacher was very puzzled, ``Why do you think the thermos? All it can do

is to keep hot things hot and cold things cold.'' ``But'', Johnny answered,

`` how does it know when to keep things hot and when to keep them cold?''

Don't you sometimes wonder ``How does a neural network know ...?''

Well, it does not. It is just a monkey-see-monkey-do type of learning,

like this...

Next: Radial-Basis Function (RBF) Networks

Up: Back Propagation Network (BPN)

Previous: Error back propagation

Ruye Wang

2015-08-13

![]() .

.