While the two-sample t-test (based on Student's t-distribution)

tests whether two variables are the same, the one-way AVOVA

(based on the F-distribution) tests whether  groups

are the same. The null hypothesis is that all samples are

drawn from populations with the same means. For example, we

want to find out if any of the

groups

are the same. The null hypothesis is that all samples are

drawn from populations with the same means. For example, we

want to find out if any of the  different treatments for

a disease is on average superior or inferior to the others.

different treatments for

a disease is on average superior or inferior to the others.

Specifically, let

be

be  groups each

containing

groups each

containing  samples

samples

.

The total number of samples is

.

The total number of samples is

. The null

hypothesis is

. The null

hypothesis is

.

.

The method is based on the assumption that samples in each of

the  groups have normal distributions

groups have normal distributions

of possibly different unknown means but the same unknown variance.

of possibly different unknown means but the same unknown variance.

We first find the following

- Group means

(

48)

- Total mean

(

49)

where  .

.

- Total sum of squares with

degrees of freedom:

degrees of freedom:

(

50)

- Within-group sum of squares (error, unexplained)

with

degrees of freedom:

degrees of freedom:

(

51)

- Between-group sum of squares (information, explained)

with

degrees of freedom:

degrees of freedom:

(

52)

We see that the three degrees of freedom the three sums of squares

are similarly related by

(

53)

While the first equation is obvious, the second equation can be

proven below:

The last equality is due to the fact that the summation of

the middle term is equal to zero. These sum of squares are

measurements of variations (relative to the means) in the

data set. While SST represents the total variation, SSB and

SSW represent the variations from two difference sources,

variation between the groups, directly related to the issue

whether these groups have the same mean, and variation within

each of the groups, treated as error.

We further define the following mean-squares (MS) of  distributions

distributions

(

55)

Now we can finally define the test statistic:

which has a F-distribution with  numerator d.f. and

numerator d.f. and  denominator d.f.

denominator d.f.

If all samples are drawn from the populations having the

same means, SSB for between-group variation will be small

and  is likely to be less than 1. But if the samples are

drawn from populations of different means, SSB will be larger

than SSW for within-group variation, and

is likely to be less than 1. But if the samples are

drawn from populations of different means, SSB will be larger

than SSW for within-group variation, and  is likely to be

greater than 1. Also, if the sample size

is likely to be

greater than 1. Also, if the sample size  is large, i.e.,

there is a stronger evidence for different group means, then

is large, i.e.,

there is a stronger evidence for different group means, then

is large and

is large and  is likely to be rejected.

is likely to be rejected.

Specifically, substituting the specific values obtained from

the data set into the expression above, we get the value  and the corresponding p-value from the F-distribution table

(Matlab function

and the corresponding p-value from the F-distribution table

(Matlab function 1-fcdf(f,DFG,DFE)), which are then

compared with the critical value  corresponding to

the given significant level

corresponding to

the given significant level  (Matlab function

(Matlab function

finv(1-alpha,DFG,DFE)). If  , or equivalently

, or equivalently

, then we reject the null hypothesis

, then we reject the null hypothesis  and

conclude that the

and

conclude that the  means are significantly different.

Otherwise, we accept

means are significantly different.

Otherwise, we accept  as there is not significant

evidence against it.

as there is not significant

evidence against it.

These can be summarized by the ANOVA table below:

(

57)

Example: Given  samples of each of the

samples of each of the  groups

below, find if their means are the same,

groups

below, find if their means are the same,

,

for the significant level

,

for the significant level  .

.

(

58)

The total number of samples is  , the degrees of freedom

are

, the degrees of freedom

are

(

59)

The group means are:

(

60)

The total mean is  . The sum of squares are

. The sum of squares are

(

61)

The value of the test statistic  and the corresponding

p-value are:

and the corresponding

p-value are:

(

62)

This result is summarized in the table below:

(

63)

As  is greater than the critical value

is greater than the critical value

corresponding to

corresponding to  , and equivalently

, and equivalently

, the null hypothesis is rejected, i.e.,

the means of the

, the null hypothesis is rejected, i.e.,

the means of the  groups are not the same.

groups are not the same.

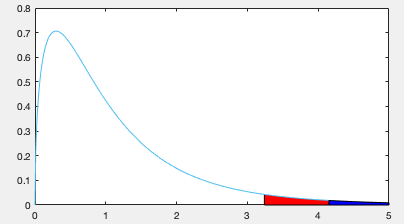

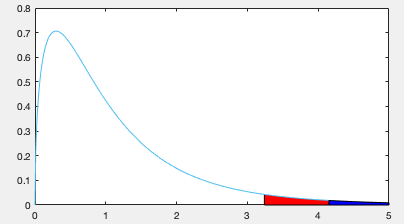

This result is also illustrated in the plot below, where the

area to the right of

(red) is

(red) is  ,

while the area to the right of

,

while the area to the right of  is

is  , which

is inside the critical region,

, which

is inside the critical region,  is rejected.

is rejected.

(48)

(48)

(49)

(49)

.

.

degrees of freedom:

degrees of freedom:

(50)

(50)

degrees of freedom:

degrees of freedom:

(51)

(51)

degrees of freedom:

degrees of freedom:

(52)

(52)

(53)

(53)

![$\displaystyle \sum_{k=1}^K \sum_{{\bf x} \in C_k}

[( x-\bar{x}_k)^2+2(x-\bar{x}_k)(\bar{x}_k-\bar{x})+(\bar{x}_k-\bar{x})^2]$](img215.svg)

![$\displaystyle \sum_{k=1}^K \sum_{{\bf x} \in C_k}

[( x-\bar{x}_k)^2+(\bar{x}_k-\bar{x})^2]=SSW+SSB$](img216.svg)

(55)

(55)

numerator d.f. and

numerator d.f. and  denominator d.f.

denominator d.f.

(57)

(57)

(58)

(58)

, the degrees of freedom

are

, the degrees of freedom

are

(59)

(59)

(60)

(60)

. The sum of squares are

. The sum of squares are

(61)

(61)

and the corresponding

p-value are:

and the corresponding

p-value are:

(62)

(62)

(63)

(63)

is greater than the critical value

is greater than the critical value

corresponding to

corresponding to  , and equivalently

, and equivalently

, the null hypothesis is rejected, i.e.,

the means of the

, the null hypothesis is rejected, i.e.,

the means of the  groups are not the same.

groups are not the same.