We first consider binary classification based on the same

linear model

used in

linear regression considered before. Any test sample

used in

linear regression considered before. Any test sample  is classified into one of the two classes

is classified into one of the two classes

depending on whether

depending on whether

is greater or smaller than

zero:

is greater or smaller than

zero:

if then then |

(275) |

Here  is a model parameter to be determined based on

the training set

is a model parameter to be determined based on

the training set

,

where

,

where

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_N]$](img9.svg) ,

,

![${\bf y}=[ y_1,\cdots,y_N]^T$](img10.svg) ,

,

if

if  and

and

if

if  , so

that

, so

that  fits all data points optimally in certain sense.

fits all data points optimally in certain sense.

While in the previously considered least square classification

method, we find the optimal  that minimizes the squared

error

that minimizes the squared

error

, here we find the optimal

, here we find the optimal

based on a probabilistic model. Specifically, we now

convert the linear function

based on a probabilistic model. Specifically, we now

convert the linear function

into

the probability for

into

the probability for  to belong to either class:

to belong to either class:

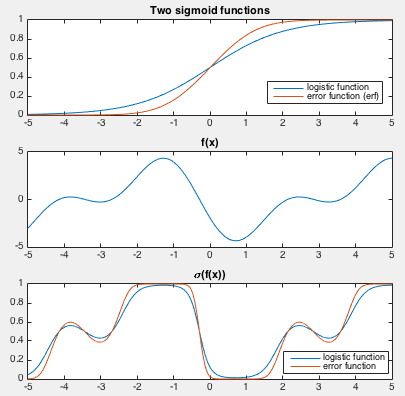

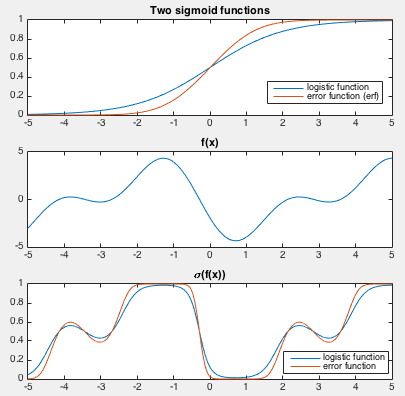

This is done by a sigmoid function defined as either a logistic or

cumulative Gaussian (error function, erf):

In either case, we have

and and |

(278) |

Using this sigmoid function,

in the range of

in the range of

is mapped to

is mapped to

in the range

of

in the range

of  , which can be used as the probability for

, which can be used as the probability for  to

belong to either class:

to

belong to either class:

The two cases can be combined:

or or |

(280) |

The binary classification problem can now be treated as a regression

problem to find the model parameter  that best fits the data

in the training set

that best fits the data

in the training set

. Such a regression

problem is called logistic regression if

. Such a regression

problem is called logistic regression if  is used, or

probit regression if

is used, or

probit regression if  is used.

is used.

Same as in the case of Bayesian regression, we assume the prior

distribution of  to be a zero-mean Gaussian

to be a zero-mean Gaussian

, and for simplicity

we further assume

, and for simplicity

we further assume

, and find the likelihood of

, and find the likelihood of

based on the linear model applied to the observed data set

based on the linear model applied to the observed data set

:

:

|

(281) |

Note that this likelihood is not Gaussian (as in the case of

Eq. (![[*]](crossref.png) )), because

)), because

is the binary class

labeling of the training samples in

is the binary class

labeling of the training samples in  , instead of a continuous

function as in the case of regression.

, instead of a continuous

function as in the case of regression.

The posterior of  can now be expressed in terms of the

prior

can now be expressed in terms of the

prior

and the likelihood

and the likelihood

:

:

where the denominator

is dropped as

it is a constant independent of the variable

is dropped as

it is a constant independent of the variable  of interest.

We can further find the log posterior denoted by

of interest.

We can further find the log posterior denoted by

:

:

|

(283) |

The optimal  that best fits the training set

that best fits the training set

can now be found as the one that

maximizes this posterior

can now be found as the one that

maximizes this posterior

, or, equivalently,

the log posterior

, or, equivalently,

the log posterior

, by setting the derivative of

, by setting the derivative of

to zero and solving the resulting equation below

by Newton's method or conjugate gradient ascent method:

to zero and solving the resulting equation below

by Newton's method or conjugate gradient ascent method:

Having found the optimal  , we can classify any test pattern

, we can classify any test pattern

in terms of the posterior of its corresponding labeling

in terms of the posterior of its corresponding labeling  :

:

|

(285) |

In a multi-class case with

, we can still use a vector

, we can still use a vector

to represent each class

to represent each class  , the direction

of the class with respect to the origin in the feature space, and the inner

product

, the direction

of the class with respect to the origin in the feature space, and the inner

product

proportional to the projection of

proportional to the projection of  onto vector

onto vector  measures the extent to which

measures the extent to which  belongs to

belongs to

. Similar to the logistic function used in the two-class case, here

the soflmax function defined below is used to convert

. Similar to the logistic function used in the two-class case, here

the soflmax function defined below is used to convert

into the probability

that

into the probability

that  belongs to

belongs to  :

:

|

(286) |

where

![${\bf W}=[{\bf w}_1,\cdots,{\bf w}_K]$](img1022.svg) .

.

then

then

is a model parameter to be determined based on

the training set

is a model parameter to be determined based on

the training set

,

where

,

where

![${\bf X}=[{\bf x}_1,\cdots,{\bf x}_N]$](img9.svg) ,

,

![${\bf y}=[ y_1,\cdots,y_N]^T$](img10.svg) ,

,

if

if  and

and

if

if  , so

that

, so

that  fits all data points optimally in certain sense.

fits all data points optimally in certain sense.

and

and

in the range of

in the range of

is mapped to

is mapped to

in the range

of

in the range

of  , which can be used as the probability for

, which can be used as the probability for  to

belong to either class:

to

belong to either class:

![[*]](crossref.png) )), because

)), because

is the binary class

labeling of the training samples in

is the binary class

labeling of the training samples in  , instead of a continuous

function as in the case of regression.

, instead of a continuous

function as in the case of regression.

is dropped as

it is a constant independent of the variable

is dropped as

it is a constant independent of the variable  of interest.

We can further find the log posterior denoted by

of interest.

We can further find the log posterior denoted by

:

:

![${\bf W}=[{\bf w}_1,\cdots,{\bf w}_K]$](img1022.svg) .

.