The Jacobi method solves the eigenvalue problem of a real symmetric

matrice

, of which all eigenvalues are real and

all eigenvectors are orthogonal to each other (as shown

here). This algorithm

produces the eigenvalue matrix

, of which all eigenvalues are real and

all eigenvectors are orthogonal to each other (as shown

here). This algorithm

produces the eigenvalue matrix

and eigenvector matrix

and eigenvector matrix

satisfying

satisfying

.

.

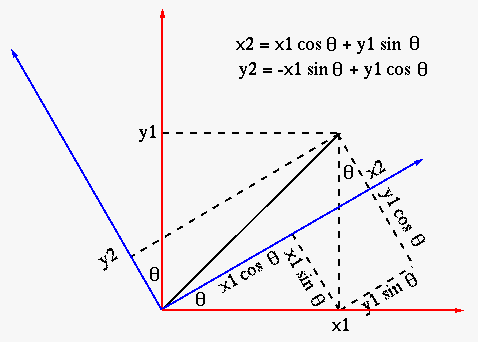

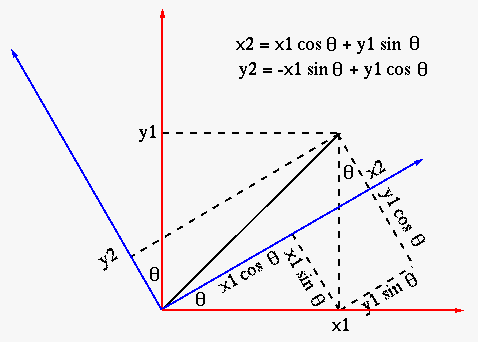

We first review the rotation of a vector in both 2 and 3-D space by

an orthogonal rotation matrix. In a 2-D space, the rotation matrix

is

![$\displaystyle {\bf R}(\theta)

=\left[\begin{array}{rr}\cos\theta&-\sin\theta\\ ...

...eta

\end{array}\right] =\left[\begin{array}{rr}c & -s\\ s & c\end{array}\right]$](img52.svg) |

(15) |

where  is the rotation angle,

is the rotation angle,

and

and

.

This rotation matrix is orthogonal satisfying

.

This rotation matrix is orthogonal satisfying

.

Pre-multiplying

.

Pre-multiplying  to a column vector

to a column vector

![${\bf v}_1=[x_1, y_1]^T$](img58.svg) we

get a rotated version of the vector:

we

get a rotated version of the vector:

![$\displaystyle {\bf v}_2=\left[\begin{array}{c}x_2\\ y_2\end{array}\right]

={\bf...

...nd{array}\right]

=\left[\begin{array}{r}cx_1-sy_1\\ sx_1+cy_1\end{array}\right]$](img59.svg) |

(16) |

Taking transpose of the equatioin, we get the rotation of the row

vector

![${\bf v}_1^T=[x_1,\;y_1]$](img60.svg) by post-multiplying

by post-multiplying  :

:

![$\displaystyle {\bf v}^T_2=[x_2,\,y_2]=({\bf Rv}_1^T={\bf v}^T_1{\bf R}^T

=[x_1\...

...\;\left[\begin{array}{rr}c&s\\ -s&c\end{array}\right]

=[cx_1-sy_1\;\;sx_1+cy_1]$](img62.svg) |

(17) |

As  is an orthogonal matrix, the norms of the column or row

vector is preserved, i..e.,

is an orthogonal matrix, the norms of the column or row

vector is preserved, i..e.,

.

The rotation can be either clockwise if

.

The rotation can be either clockwise if  or counter clockwise

if

or counter clockwise

if  .

.

In a 3-D space, a rotation by an angle  around each of the

three principal axes can be carried out by each of the following

three rotation matrices:

around each of the

three principal axes can be carried out by each of the following

three rotation matrices:

![$\displaystyle {\bf R}_x(\theta)

=\left[\begin{array}{rrr}1&0&0\\ 0&c&-s\\ 0&s&c...

... R}_z(\theta)

=\left[\begin{array}{rrr}c&-s&0\\ s&c&0\\ 0&0&1\end{array}\right]$](img66.svg) |

(18) |

In general, in an N-D space, the rotation around the direction

of the cross-product of the ith column and the jth column

(the normal direction of the plane spanned

by

(the normal direction of the plane spanned

by  and

and  ), referred to as Givens rotation,

can be carried out by the following matrix:

), referred to as Givens rotation,

can be carried out by the following matrix:

This is an identical matrix with four of its elements modified,

and

and

. As all rows and columns are

orthonormal,

. As all rows and columns are

orthonormal,  is orthogonal.

is orthogonal.

The Jacobi method finds the eigenvalues of a symmetric matrix  by

iteratively rotating its rows and columns in such a way that all of the

off-diagonal elements are eliminated one at a time, so that eventually the

resulting matrix becomes the diagonal eigenvalue matrix

by

iteratively rotating its rows and columns in such a way that all of the

off-diagonal elements are eliminated one at a time, so that eventually the

resulting matrix becomes the diagonal eigenvalue matrix

,

and the product of all rotation matrices used in the process becomes the

orthogonal eigenvector matrix

,

and the product of all rotation matrices used in the process becomes the

orthogonal eigenvector matrix  . Specifically, in each iteration,

we pre and post multiply

. Specifically, in each iteration,

we pre and post multiply

to

to  , so that

its ith and jth columns and rows are modified, while all other elements

remain the same:

, so that

its ith and jth columns and rows are modified, while all other elements

remain the same:

![$\displaystyle {\bf A}'={\bf R}{\bf A}{\bf R}^T

=\left[\begin{array}{ccccccc}

&\...

...ots&&\vdots&&\vdots\\

&\cdots&a'_{ni}&\cdots&a'_{nj}&\cdots&\end{array}\right]$](img77.svg) |

(20) |

As

is symmetric, the resulting matrix is also

symmetric:

is symmetric, the resulting matrix is also

symmetric:

|

(21) |

For example, the 2nd and 4th columns and rows of a  matrix

matrix  are modified by

are modified by

:

:

We can verify that as

is symmetric, i.e.,

is symmetric, i.e.,

, the resulting

, the resulting  is also symmetric. In

general, the ith and jth rows and columns are updated as shown

below:

is also symmetric. In

general, the ith and jth rows and columns are updated as shown

below:

As  is an orthogonal matrix that preserves the norms

of the row and column vectors of

is an orthogonal matrix that preserves the norms

of the row and column vectors of  , i.e.,

, i.e.,

, when an off-diagonal

element

, when an off-diagonal

element

is eliminated to zero, the values of the

diagonal elements

is eliminated to zero, the values of the

diagonal elements  and

and  will be increased. We desire

to find the rotation angle

will be increased. We desire

to find the rotation angle  so that after the rotation the

off-diagonal element becomes zero:

so that after the rotation the

off-diagonal element becomes zero:

|

(24) |

This equation can be written as

i.e., i.e., |

(25) |

where we have defined

|

(26) |

Solving the equation above for  , we get

, we get

|

(27) |

We will always choose the root with the smaller absolute value

for better precision:

|

(28) |

Having found  , we can further find

, we can further find  and

and  in the rotation

matrix

in the rotation

matrix

:

:

|

(29) |

by which

will be eliminated. This process is repeated

to eliminate the off-diagonal component with the maximum absolute

value, the pivot, until eventually the matrix becomes diagonal,

containing all eigenvalues.

will be eliminated. This process is repeated

to eliminate the off-diagonal component with the maximum absolute

value, the pivot, until eventually the matrix becomes diagonal,

containing all eigenvalues.

Note that in each iteration only the ith and jth rows and columns

of the matrix are affected, we can therefore update these rows and

columns alone based on the equations given above, without actually

carry out the matrix multiplication to reduce computational cost.

Moreover, to minimize the roundoff error, we prefer to update the elements

of the rows and columns in an iterative fashion by adding a term to its old

value. To do so, we first solve the equation above

for

for  and

and  to get

to get

Substituting these into the expressions for  and

and  respectively,

we get the following iterations:

respectively,

we get the following iterations:

|

(30) |

and

|

(31) |

We then rewrite the update equations for  and

and  as:

as:

where

|

(32) |

If we set an off-diagonal element to zero  by

by

,

with

,

with

and

and

defined above, then the values

of the diagonal elements

defined above, then the values

of the diagonal elements  and

and  of the resulting matrix

of the resulting matrix

will be increased. If we iteratively

carry out such rotations to set the off-diagonal elements to zero one at

a time

will be increased. If we iteratively

carry out such rotations to set the off-diagonal elements to zero one at

a time

until eventually the resulting matrix

becomes diagonal

containing the eigenvalues of

becomes diagonal

containing the eigenvalues of  , and

, and

contains the corresponding eigenvectors.

contains the corresponding eigenvectors.

In summary, here are the steps in each iteration of the Jacobi algorithm:

- Find the off-diagonal element

(

( ) of the greatest absolute

value, the pivot, and find

) of the greatest absolute

value, the pivot, and find

.

.

- Solve the quadratic equation

for

for

.

.

- Obtain

and

and

.

.

- Update all elements in the ith and jth rows and columns.

The iteration terminates when all off-diagonal elements are close enough to zero,

and we get a diagonal matrix as the eigenvalue matrix:

|

(34) |

where

is the eigenvector matrix.

is the eigenvector matrix.

Example: Find the eigenvalue matrix and eigenvector matrix of the

following matrix:

- Find

:

:

|

(35) |

- Find

. As

. As  , we have

, we have

|

(36) |

The rotation angle is

.

.

- Find

and

and

|

(37) |

- Find

and

and

|

(38) |

- Find the eigenvector and eigenvalue matrices:

![$\displaystyle {\bf R}(\theta)

=\left[\begin{array}{rr}\cos\theta&-\sin\theta\\ ...

...eta

\end{array}\right] =\left[\begin{array}{rr}c & -s\\ s & c\end{array}\right]$](img52.svg)

is the rotation angle,

is the rotation angle,

and

and

.

This rotation matrix is orthogonal satisfying

.

This rotation matrix is orthogonal satisfying

.

Pre-multiplying

.

Pre-multiplying  to a column vector

to a column vector

![${\bf v}_1=[x_1, y_1]^T$](img58.svg) we

get a rotated version of the vector:

we

get a rotated version of the vector:

![$\displaystyle {\bf v}_2=\left[\begin{array}{c}x_2\\ y_2\end{array}\right]

={\bf...

...nd{array}\right]

=\left[\begin{array}{r}cx_1-sy_1\\ sx_1+cy_1\end{array}\right]$](img59.svg)

![${\bf v}_1^T=[x_1,\;y_1]$](img60.svg) by post-multiplying

by post-multiplying  :

:

![$\displaystyle {\bf v}^T_2=[x_2,\,y_2]=({\bf Rv}_1^T={\bf v}^T_1{\bf R}^T

=[x_1\...

...\;\left[\begin{array}{rr}c&s\\ -s&c\end{array}\right]

=[cx_1-sy_1\;\;sx_1+cy_1]$](img62.svg)

is an orthogonal matrix, the norms of the column or row

vector is preserved, i..e.,

is an orthogonal matrix, the norms of the column or row

vector is preserved, i..e.,

.

The rotation can be either clockwise if

.

The rotation can be either clockwise if  or counter clockwise

if

or counter clockwise

if  .

.

![$\displaystyle {\bf R}_x(\theta)

=\left[\begin{array}{rrr}1&0&0\\ 0&c&-s\\ 0&s&c...

... R}_z(\theta)

=\left[\begin{array}{rrr}c&-s&0\\ s&c&0\\ 0&0&1\end{array}\right]$](img66.svg)

(the normal direction of the plane spanned

by

(the normal direction of the plane spanned

by  and

and  ), referred to as Givens rotation,

can be carried out by the following matrix:

), referred to as Givens rotation,

can be carried out by the following matrix:

![$\displaystyle \left[\begin{array}{rrrrrrr}

1&\cdots&0&\cdots&0&\cdots&0\\

\vdo...

...\cdots&0&\cdots&1\end{array}\right]\begin{array}{c}\\ i\\ \\ j\\ \\ \end{array}$](img72.svg)

and

and

. As all rows and columns are

orthonormal,

. As all rows and columns are

orthonormal,  is orthogonal.

is orthogonal.

![$\displaystyle {\bf A}'={\bf R}{\bf A}{\bf R}^T

=\left[\begin{array}{ccccccc}

&\...

...ots&&\vdots&&\vdots\\

&\cdots&a'_{ni}&\cdots&a'_{nj}&\cdots&\end{array}\right]$](img77.svg)

is symmetric, the resulting matrix is also

symmetric:

is symmetric, the resulting matrix is also

symmetric:

matrix

matrix  are modified by

are modified by

:

:

![$\displaystyle {\bf A}'={\bf R}{\bf A}{\bf R}^T=\left[\begin{array}{ccccc}

1 & 0...

...\\ 0 & 0 & 1 & 0 & 0\\ 0 &-s & 0 & c & 0\\ 0 & 0 & 0 & 0 & 1

\end{array}\right]$](img81.svg)

![$\displaystyle \left[\begin{array}{ccccc}

a_{11} & a_{12} & a_{13} & a_{14} & a_...

...\\ 0 & 0 & 1 & 0 & 0\\ 0 &-s & 0 & c & 0\\ 0 & 0 & 0 & 0 & 1

\end{array}\right]$](img82.svg)

![$\displaystyle \left[\begin{array}{ccccc}1 & 0 & 0 & 0 & 0\\ 0 & c & 0 & -s& 0

\...

...51} & ca_{52}-sa_{54} & a_{53} & sa_{52}+ca_{54} & a_{55}\\

\end{array}\right]$](img83.svg)

![$\displaystyle \left[\begin{array}{ccccc}

a_{11} & ca_{12}-sa_{14} & a_{13} & sa...

...51} & ca_{52}-sa_{54} & a_{53} & sa_{52}+ca_{54} & a_{55}\\

\end{array}\right]$](img84.svg)

is symmetric, i.e.,

is symmetric, i.e.,

, the resulting

, the resulting  is also symmetric. In

general, the ith and jth rows and columns are updated as shown

below:

is also symmetric. In

general, the ith and jth rows and columns are updated as shown

below:

is an orthogonal matrix that preserves the norms

of the row and column vectors of

is an orthogonal matrix that preserves the norms

of the row and column vectors of  , i.e.,

, i.e.,

, when an off-diagonal

element

, when an off-diagonal

element

is eliminated to zero, the values of the

diagonal elements

is eliminated to zero, the values of the

diagonal elements  and

and  will be increased. We desire

to find the rotation angle

will be increased. We desire

to find the rotation angle  so that after the rotation the

off-diagonal element becomes zero:

so that after the rotation the

off-diagonal element becomes zero:

i.e.,

i.e.,

, we get

, we get

, we can further find

, we can further find  and

and  in the rotation

matrix

in the rotation

matrix

:

:

will be eliminated. This process is repeated

to eliminate the off-diagonal component with the maximum absolute

value, the pivot, until eventually the matrix becomes diagonal,

containing all eigenvalues.

will be eliminated. This process is repeated

to eliminate the off-diagonal component with the maximum absolute

value, the pivot, until eventually the matrix becomes diagonal,

containing all eigenvalues.

and

and  respectively,

we get the following iterations:

respectively,

we get the following iterations:

becomes diagonal

containing the eigenvalues of

becomes diagonal

containing the eigenvalues of  , and

, and

contains the corresponding eigenvectors.

contains the corresponding eigenvectors.

(

( ) of the greatest absolute

value, the pivot, and find

) of the greatest absolute

value, the pivot, and find

.

.

for

for

.

.

and

and

.

.

is the eigenvector matrix.

is the eigenvector matrix.

![$\displaystyle {\bf A}=\left[\begin{array}{cc}3&2\\ 2&1\end{array}\right]

$](img138.svg)

:

:

. As

. As  , we have

, we have

.

.

and

and

and

and

![$\displaystyle {\bf V}=\left[\begin{array}{rr}c&s\\ -s&c\end{array}\right]

=\le...

...array}\right]

=\left[\begin{array}{cc}4.2361&0\\ 0&-0.2361\end{array}\right]

$](img148.svg)