Similar to the perceptron network, back propagation network (BPN) is also a

typical supervised learning network. But different from perceptron network,

BPN is composed of three layers of nodes: the input, hidden, and output layers

with ![]() ,

, ![]() , and

, and ![]() nodes, respectively. Each node is fully connected to

all nodes in the previous layer.

nodes, respectively. Each node is fully connected to

all nodes in the previous layer.

Due to the two levels of learning, BPN is much more powerful than the perceptron network in the sense that it can handle nonlinear classification problems.

The two-phase classification process is:

In training phase the following two steps are repeated for all input patterns presented to the input layer in random order:

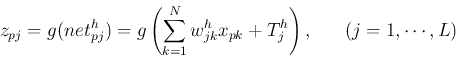

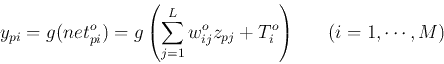

The forward pass is composed of two levels of computation: from the input

to hidden:

The calculation above can also be expressed in matrix form:

For example, in supervised classification, the number of output nodes ![]() can

be set to be the same as the number of classes

can

be set to be the same as the number of classes ![]() , i.e.,

, i.e., ![]() , and the desired

output for an input

, and the desired

output for an input ![]() belonging to class

belonging to class ![]() is

is

![]() , for all output nodes to output 0 except

the c-th one which should output 1 (one-hot method).

, for all output nodes to output 0 except

the c-th one which should output 1 (one-hot method).