A random experiment may have binary (e.g., rain or dry) or multiple outcomes.

For example, a dice has six possible outcomes with equal probability, or a pixel

in a digital image takes one of the ![]() gray levels (from 0 to 255) with not

necessarily the same probability. In general, these multiple outcomes can be

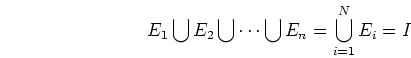

considered as

gray levels (from 0 to 255) with not

necessarily the same probability. In general, these multiple outcomes can be

considered as ![]() events

events ![]() with corresponding probability

with corresponding probability ![]() (

(![]() ), which are

), which are

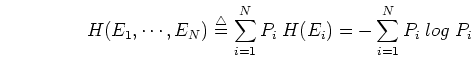

The uncertainty about the outcome of such a random experiment is the sum of the

uncertainty ![]() associated with each individual event

associated with each individual event ![]() , weighted by

the probability

, weighted by

the probability ![]() of the event:

of the event:

For example, the weather can have two possible outcomes: rain ![]() with probability

with probability

![]() or dry

or dry ![]() with probability

with probability ![]() . The uncertainty of the weather is

therefore the sum of the uncertainty of a rainy weather and the uncertainty

of a fine weather weighted by their probabilities:

. The uncertainty of the weather is

therefore the sum of the uncertainty of a rainy weather and the uncertainty

of a fine weather weighted by their probabilities: